前沿:

為了更好的梳理preview下buffer數據流的操作過程,前一文中對surface下的buffer相關的操作架構進行了描述。本文主要以此為基礎,重點分析再Camera2Client和Camera3Device下是如何維護並讀寫這些視頻幀緩存的。

1. Camera3Device::convertMetadataListToRequestListLocked函數

結合上一博文中關於preview的控制流,定位到數據流主要的操作主要是對preview模式下將CameraMetadata mPreviewRequest轉換為CaptureRequest的過程之中,回顧到mPreviewRequest是主要包含了當前preview下所需要Camera3Device來操作的OutputStream的index值。

2. Camera3Device::configureStreamsLocked函數

在configureStreamsLocked的函數中,主要關注的是Camera3Device對當前所具有的所有的mInputStreams和mOutputStreams進行Config的操作,分別包括startConfiguration/finishConfiguration兩個狀態。

2.1 mOutputStreams.editValueAt(i)->startConfiguration()

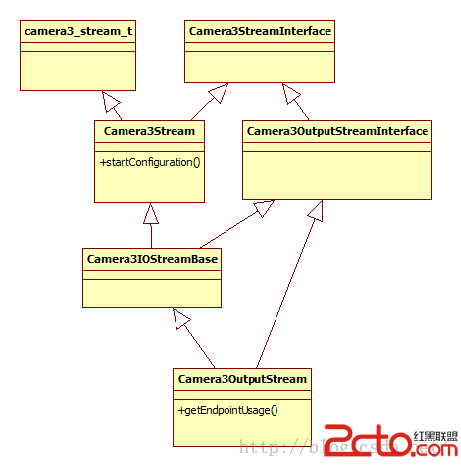

這裡的遍歷所有輸出stream即最終調用的函數入口為Camera3Stream::startConfiguration(),這裡需要先看下Camera3OutputStream的整個結構,出現了Camera3Stream和Camera3IOStreamBase,兩者是Input和Output stream所共有的,前者提供的更多的是對buffer的config、get/retrun buffer的操作,後者以維護當前的stream所擁有的buffer數目。另一個支路camera3_stream_t是一個和Camera HAL3底層進行stream信息交互的入口。

startConfiguration函數首先是判斷當前stream的狀態,對於已經config的不作處理,config的主要操作是getEndpointUsage:

status_t Camera3OutputStream::getEndpointUsage(uint32_t *usage) {

status_t res;

int32_t u = 0;

res = mConsumer->query(mConsumer.get(),

NATIVE_WINDOW_CONSUMER_USAGE_BITS, &u);

*usage = u;

return res;

}

這裡的mConsumer其實就是之前創建的Surface的本體,每一個Stream在建立時createStream,都會傳入一個ANativeWIndow類似的Consumer綁定到當前的stream中去。這裡主要是完成當前window所管理的buffer的USAGE值,可參看grallo.h中的定義,由Gralloc模塊負責指定當前buffer操作是由HW還是SW來完成以及不同的應用場合,在Gralloc模塊中不同模塊需求的buffer亦會有不同的分配、定義與處理方式:

/* buffer will be used as an OpenGL ES texture */

GRALLOC_USAGE_HW_TEXTURE = 0x00000100,

/* buffer will be used as an OpenGL ES render target */

GRALLOC_USAGE_HW_RENDER = 0x00000200,

/* buffer will be used by the 2D hardware blitter */

GRALLOC_USAGE_HW_2D = 0x00000400,

/* buffer will be used by the HWComposer HAL module */

GRALLOC_USAGE_HW_COMPOSER = 0x00000800,

/* buffer will be used with the framebuffer device */

GRALLOC_USAGE_HW_FB = 0x00001000,

/* buffer will be used with the HW video encoder */

GRALLOC_USAGE_HW_VIDEO_ENCODER = 0x00010000,

/* buffer will be written by the HW camera pipeline */

GRALLOC_USAGE_HW_CAMERA_WRITE = 0x00020000,

/* buffer will be read by the HW camera pipeline */

GRALLOC_USAGE_HW_CAMERA_READ = 0x00040000,

/* buffer will be used as part of zero-shutter-lag queue */

GRALLOC_USAGE_HW_CAMERA_ZSL = 0x00060000,

/* mask for the camera access values */

GRALLOC_USAGE_HW_CAMERA_MASK = 0x00060000,

/* mask for the software usage bit-mask */

GRALLOC_USAGE_HW_MASK = 0x00071F00,

2.2 mHal3Device->ops->configure_streams(mHal3Device, &config);

config是一個camera3_stream_configuration數據結構,他記錄了一次和HAL3交互的stream的數量,已經當前每一個stream的屬性配置相關的信息camer3_stream_t,包括stream中每一個buffer的屬性值,stream的類型值等等,提交這些信息供hal3去分析處理。在高通平台中你可以看到,對於每一個stream在HAL3平台下均以一個Channel的形式存在。

typedef struct camera3_stream_configuration {

uint32_t num_streams;

camera3_stream_t **streams;

} camera3_stream_configuration_t;

stream_type包括:CAMERA3_STREAM_OUTPUT、CAMERA3_STREAM_INPUT、CAMERA3_STREAM_BIDIRECTIONAL。

format主要是指當前buffer支持的像素點存儲格式,以HAL_PIXEL_FORMAT_IMPLEMENTATION_DEFINED居多,表明數據格式是由Gralloc模塊來決定的。

對於HAL3中對configureStreams接口的實現會放在後續介紹高通平台的實現機制時再做分析。

2.3 Camera3Stream::finishConfiguration

該函數主要執行configureQueueLocked和registerBuffersLocked兩個函數

status_t Camera3OutputStream::configureQueueLocked() {

status_t res;

mTraceFirstBuffer = true;

if ((res = Camera3IOStreamBase::configureQueueLocked()) != OK) {

return res;

}

ALOG_ASSERT(mConsumer != 0, mConsumer should never be NULL);

// Configure consumer-side ANativeWindow interface

res = native_window_api_connect(mConsumer.get(),

NATIVE_WINDOW_API_CAMERA);

if (res != OK) {

ALOGE(%s: Unable to connect to native window for stream %d,

__FUNCTION__, mId);

return res;

}

res = native_window_set_usage(mConsumer.get(), camera3_stream::usage);

if (res != OK) {

ALOGE(%s: Unable to configure usage %08x for stream %d,

__FUNCTION__, camera3_stream::usage, mId);

return res;

}

res = native_window_set_scaling_mode(mConsumer.get(),

NATIVE_WINDOW_SCALING_MODE_SCALE_TO_WINDOW);

if (res != OK) {

ALOGE(%s: Unable to configure stream scaling: %s (%d),

__FUNCTION__, strerror(-res), res);

return res;

}

if (mMaxSize == 0) {

// For buffers of known size

res = native_window_set_buffers_dimensions(mConsumer.get(),

camera3_stream::width, camera3_stream::height);

} else {

// For buffers with bounded size

res = native_window_set_buffers_dimensions(mConsumer.get(),

mMaxSize, 1);

}

if (res != OK) {

ALOGE(%s: Unable to configure stream buffer dimensions

%d x %d (maxSize %zu) for stream %d,

__FUNCTION__, camera3_stream::width, camera3_stream::height,

mMaxSize, mId);

return res;

}

res = native_window_set_buffers_format(mConsumer.get(),

camera3_stream::format);

if (res != OK) {

ALOGE(%s: Unable to configure stream buffer format %#x for stream %d,

__FUNCTION__, camera3_stream::format, mId);

return res;

}

int maxConsumerBuffers;

res = mConsumer->query(mConsumer.get(),

NATIVE_WINDOW_MIN_UNDEQUEUED_BUFFERS, &maxConsumerBuffers);//支持的最大buffer數量

if (res != OK) {

ALOGE(%s: Unable to query consumer undequeued

buffer count for stream %d, __FUNCTION__, mId);

return res;

}

ALOGV(%s: Consumer wants %d buffers, HAL wants %d, __FUNCTION__,

maxConsumerBuffers, camera3_stream::max_buffers);

if (camera3_stream::max_buffers == 0) {

ALOGE(%s: Camera HAL requested max_buffer count: %d, requires at least 1,

__FUNCTION__, camera3_stream::max_buffers);

return INVALID_OPERATION;

}

mTotalBufferCount = maxConsumerBuffers + camera3_stream::max_buffers;//至少2個buffer

mHandoutTotalBufferCount = 0;

mFrameCount = 0;

mLastTimestamp = 0;

res = native_window_set_buffer_count(mConsumer.get(),

mTotalBufferCount);

if (res != OK) {

ALOGE(%s: Unable to set buffer count for stream %d,

__FUNCTION__, mId);

return res;

}

res = native_window_set_buffers_transform(mConsumer.get(),

mTransform);

if (res != OK) {

ALOGE(%s: Unable to configure stream transform to %x: %s (%d),

__FUNCTION__, mTransform, strerror(-res), res);

}

return OK;

}

如果你對SurfaceFlinger的架構熟悉的話,該代碼會相對比較好理解。本質是根據當前stream設置的buffer屬性,將這些屬性值通過ANativeWindow這個接口傳遞給Consumer側去維護:

這裡重點關注以下幾個buffer的相關屬性信息:

比如native_window_set_buffer_count是設置當前Window所需要的buffer數目:

總的當前stream下的buffer個數總數為mTotalBufferCount = maxConsumerBuffers + camera3_stream::max_buffers。其中camera3_stream::max_buffer需要的buffer總數由configureStreams時HAL3底層的Device來決定的,高通平台下定義的camera3_stream::max_buffer數為7個,而maxConsumerBuffers指的是在所有buffer被dequeue時還應該保留的處於queue操作的buffer個數,即全dequeue時至少有maxConsumerBuffers個buffer是處於queue狀態在被Consumer使用的。通過query NATIVE_WINDOW_MIN_UNDEQUEUED_BUFFERS來完成,一般默認是1個,即每個stream可以認為需要由8個buffer緩存塊組成,實際可dequeue的為8個。

比如native_window_set_buffers_transform一般是指定buffer的Consumer,即當前buffer顯示的90/180/270°角度。

該過程本質是結合HAL3的底層buffer配置需求,反過來請求Buffer的Consumer端BufferQueueConsumer來設置相關的buffer屬性。

registerBuffersLocked是一個比較重要的處理過程:

status_t Camera3Stream::registerBuffersLocked(camera3_device *hal3Device) {

ATRACE_CALL();

/**

* >= CAMERA_DEVICE_API_VERSION_3_2:

*

* camera3_device_t->ops->register_stream_buffers() is not called and must

* be NULL.

*/

if (hal3Device->common.version >= CAMERA_DEVICE_API_VERSION_3_2) {

ALOGV(%s: register_stream_buffers unused as of HAL3.2, __FUNCTION__);

if (hal3Device->ops->register_stream_buffers != NULL) {

ALOGE(%s: register_stream_buffers is deprecated in HAL3.2;

must be set to NULL in camera3_device::ops, __FUNCTION__);

return INVALID_OPERATION;

} else {

ALOGD(%s: Skipping NULL check for deprecated register_stream_buffers, __FUNCTION__);

}

return OK;

} else {

ALOGV(%s: register_stream_buffers using deprecated code path, __FUNCTION__);

}

status_t res;

size_t bufferCount = getBufferCountLocked();//獲取buffer的數量,mTotalBufferCount,最少2個buffer

Vector buffers;

buffers.insertAt(/*prototype_item*/NULL, /*index*/0, bufferCount);

camera3_stream_buffer_set bufferSet = camera3_stream_buffer_set();

bufferSet.stream = this;//新的bufferset指向camera3_stream_t

bufferSet.num_buffers = bufferCount;//當前stream下的buffer數

bufferSet.buffers = buffers.editArray();

Vector streamBuffers;

streamBuffers.insertAt(camera3_stream_buffer_t(), /*index*/0, bufferCount);

// Register all buffers with the HAL. This means getting all the buffers

// from the stream, providing them to the HAL with the

// register_stream_buffers() method, and then returning them back to the

// stream in the error state, since they won't have valid data.

//

// Only registered buffers can be sent to the HAL.

uint32_t bufferIdx = 0;

for (; bufferIdx < bufferCount; bufferIdx++) {

res = getBufferLocked( &streamBuffers.editItemAt(bufferIdx) );//返回dequeue buffer出來的所有buffer

if (res != OK) {

ALOGE(%s: Unable to get buffer %d for registration with HAL,

__FUNCTION__, bufferIdx);

// Skip registering, go straight to cleanup

break;

}

sp fence = new Fence(streamBuffers[bufferIdx].acquire_fence);

fence->waitForever(Camera3Stream::registerBuffers);//等待可寫

buffers.editItemAt(bufferIdx) = streamBuffers[bufferIdx].buffer;//dequeue buffer出來的buffer handle

}

if (bufferIdx == bufferCount) {

// Got all buffers, register with HAL

ALOGV(%s: Registering %zu buffers with camera HAL,

__FUNCTION__, bufferCount);

ATRACE_BEGIN(camera3->register_stream_buffers);

res = hal3Device->ops->register_stream_buffers(hal3Device,

&bufferSet);//buffer綁定並register到hal層

ATRACE_END();

}

// Return all valid buffers to stream, in ERROR state to indicate

// they weren't filled.

for (size_t i = 0; i < bufferIdx; i++) {

streamBuffers.editItemAt(i).release_fence = -1;

streamBuffers.editItemAt(i).status = CAMERA3_BUFFER_STATUS_ERROR;

returnBufferLocked(streamBuffers[i], 0);//register後進行queue buffer

}

return res;

}

a 可以明確看到CAMERA_DEVICE_API_VERSION_3_2的版本才支持這個Device ops接口

b getBufferCountLocked獲取當前stream下的允許的buffer總數

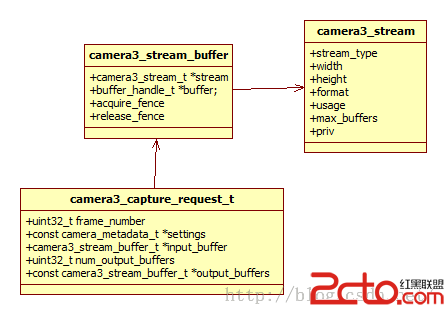

c camera3_stream_buffer_t、camera3_stream_buffer_set和buffer_handle_t

首先需要關注的結構是camera3_stream_buffer_t,用於描述每一個stream下的buffer自身的特性值,其中關鍵結構是buffer_handle_t值是每一個buffer在不同進程間共享的handle,此外acquire_fence和release_fence用來不同硬件模塊對buffer讀寫時的同步。

camera3_stream_buffer_set是封裝了當前stream下所有的buffer的信息:

typedef struct camera3_stream_buffer_set {

/**

* The stream handle for the stream these buffers belong to

*/

camera3_stream_t *stream;

/**

* The number of buffers in this stream. It is guaranteed to be at least

* stream->max_buffers.

*/

uint32_t num_buffers;

/**

* The array of gralloc buffer handles for this stream. If the stream format

* is set to HAL_PIXEL_FORMAT_IMPLEMENTATION_DEFINED, the camera HAL device

* should inspect the passed-in buffers to determine any platform-private

* pixel format information.

*/

buffer_handle_t **buffers;

} camera3_stream_buffer_set_t;

三個變量分別保存stream的buffer個數,當前這個set集合所屬的stream,以及他所包含的所有buffer的handle信息列表。

d getBufferLocked獲取當前buffer

status_t Camera3OutputStream::getBufferLocked(camera3_stream_buffer *buffer) {

ATRACE_CALL();

status_t res;

if ((res = getBufferPreconditionCheckLocked()) != OK) {

return res;

}

ANativeWindowBuffer* anb;

int fenceFd;

/**

* Release the lock briefly to avoid deadlock for below scenario:

* Thread 1: StreamingProcessor::startStream -> Camera3Stream::isConfiguring().

* This thread acquired StreamingProcessor lock and try to lock Camera3Stream lock.

* Thread 2: Camera3Stream::returnBuffer->StreamingProcessor::onFrameAvailable().

* This thread acquired Camera3Stream lock and bufferQueue lock, and try to lock

* StreamingProcessor lock.

* Thread 3: Camera3Stream::getBuffer(). This thread acquired Camera3Stream lock

* and try to lock bufferQueue lock.

* Then there is circular locking dependency.

*/

sp currentConsumer = mConsumer;

mLock.unlock();

res = currentConsumer->dequeueBuffer(currentConsumer.get(), &anb, &fenceFd);

mLock.lock();

if (res != OK) {

ALOGE(%s: Stream %d: Can't dequeue next output buffer: %s (%d),

__FUNCTION__, mId, strerror(-res), res);

return res;

}

/**

* FenceFD now owned by HAL except in case of error,

* in which case we reassign it to acquire_fence

*/

handoutBufferLocked(*buffer, &(anb->handle), /*acquireFence*/fenceFd,

/*releaseFence*/-1, CAMERA3_BUFFER_STATUS_OK, /*output*/true);

return OK;

}

該函數主要是從由ANativeWindow從Consumer端dequeue獲取一個buffer,本質上這個過程中首次執行是會有Consumer端去分配一個由實際物理空間的給當前的一個buffer的。

接著執行handoutBufferLocked,填充camera3_stream_buffer這個結構體,其中設置的acquireFence為-1值表明hal3的這個buffer可被Framewrok直接使用,而acquireFence表示HAL3如何想使用這個buffer時需要等待其變為1,因為buffer分配和handler返回不一定是一致同步的。還會切換當前buffer的狀態CAMERA3_BUFFER_STATUS_OK。

void Camera3IOStreamBase::handoutBufferLocked(camera3_stream_buffer &buffer,

buffer_handle_t *handle,

int acquireFence,

int releaseFence,

camera3_buffer_status_t status,

bool output) {

/**

* Note that all fences are now owned by HAL.

*/

// Handing out a raw pointer to this object. Increment internal refcount.

incStrong(this);

buffer.stream = this;

buffer.buffer = handle;

buffer.acquire_fence = acquireFence;

buffer.release_fence = releaseFence;

buffer.status = status;

// Inform tracker about becoming busy

if (mHandoutTotalBufferCount == 0 && mState != STATE_IN_CONFIG &&

mState != STATE_IN_RECONFIG) {

/**

* Avoid a spurious IDLE->ACTIVE->IDLE transition when using buffers

* before/after register_stream_buffers during initial configuration

* or re-configuration.

*

* TODO: IN_CONFIG and IN_RECONFIG checks only make sense for statusTracker = mStatusTracker.promote();

if (statusTracker != 0) {

statusTracker->markComponentActive(mStatusId);

}

}

mHandoutTotalBufferCount++;//統計dequeuebuffer的數量

if (output) {

mHandoutOutputBufferCount++;

}

}

e hal3Device->ops->register_stream_buffers(hal3Device,&bufferSet);//buffer綁定並register到hal層

將所屬的stream下的所有buffer有關的信息,主要是每個buffer的buffer_handle_t值,交給HAL3層去實現。比如高通HAL3平台每一個Channel對應於Camera3Device端的stream,而每一個stream的buffer在不同的Channel下面卻是一個個的stream,這是高通的實現方式。

f 在完成register所有buffer後,設置每一個buffer狀態為從CAMERA3_BUFFER_STATUS_OK切換到CAMERA3_BUFFER_STATUS_ERROR表明這個buffer都是可用的,目的在於執行returnBufferLocked是為了將這些因為register而出列的所有buffer再次cancelbuffer操作。

Camera3OutputStream::returnBufferLocked->Camera3IOStreamBase::returnAnyBufferLocked->Camera3OutputStream::returnBufferCheckedLocked

status_t Camera3OutputStream::returnBufferCheckedLocked(//result返回時調用

const camera3_stream_buffer &buffer,

nsecs_t timestamp,

bool output,

/*out*/

sp *releaseFenceOut) {

(void)output;

ALOG_ASSERT(output, Expected output to be true);

status_t res;

sp releaseFence;

/**

* Fence management - calculate Release Fence

*/

if (buffer.status == CAMERA3_BUFFER_STATUS_ERROR) {

if (buffer.release_fence != -1) {

ALOGE(%s: Stream %d: HAL should not set release_fence(%d) when

there is an error, __FUNCTION__, mId, buffer.release_fence);

close(buffer.release_fence);

}

/**

* Reassign release fence as the acquire fence in case of error

*/

releaseFence = new Fence(buffer.acquire_fence);

} else {

res = native_window_set_buffers_timestamp(mConsumer.get(), timestamp);

if (res != OK) {

ALOGE(%s: Stream %d: Error setting timestamp: %s (%d),

__FUNCTION__, mId, strerror(-res), res);

return res;

}

releaseFence = new Fence(buffer.release_fence);

}

int anwReleaseFence = releaseFence->dup();

/**

* Release the lock briefly to avoid deadlock with

* StreamingProcessor::startStream -> Camera3Stream::isConfiguring (this

* thread will go into StreamingProcessor::onFrameAvailable) during

* queueBuffer

*/

sp currentConsumer = mConsumer;

mLock.unlock();

/**

* Return buffer back to ANativeWindow

*/

if (buffer.status == CAMERA3_BUFFER_STATUS_ERROR) {

// Cancel buffer

res = currentConsumer->cancelBuffer(currentConsumer.get(),

container_of(buffer.buffer, ANativeWindowBuffer, handle),

anwReleaseFence);//Register buffer locked所在的事情,cancelbuffer dequeue的buffer

if (res != OK) {

ALOGE(%s: Stream %d: Error cancelling buffer to native window:

%s (%d), __FUNCTION__, mId, strerror(-res), res);

}

} else {

if (mTraceFirstBuffer && (stream_type == CAMERA3_STREAM_OUTPUT)) {

{

char traceLog[48];

snprintf(traceLog, sizeof(traceLog), Stream %d: first full buffer

, mId);

ATRACE_NAME(traceLog);

}

mTraceFirstBuffer = false;

}

res = currentConsumer->queueBuffer(currentConsumer.get(),

container_of(buffer.buffer, ANativeWindowBuffer, handle),

anwReleaseFence);//queuebuffer,送顯ANativeWindowBuffer

if (res != OK) {

ALOGE(%s: Stream %d: Error queueing buffer to native window:

%s (%d), __FUNCTION__, mId, strerror(-res), res);

}

}

mLock.lock();

if (res != OK) {

close(anwReleaseFence);

}

*releaseFenceOut = releaseFence;

return res;

}

該函數對於首次register的處理來說,他處理的buffer均是CAMERA3_BUFFER_STATUS_ERROR,調用了cancelBuffer將所有buffer的狀態都還原為free的狀態,依次說明目前的buffer均是可用的,之前均不涉及到對buffer的數據流的操作。

3 buffer數據流的dequeue操作

上述步驟2主要是將每一個Stream下全部的buffer信息全部register到下層的HAL3中,為後續對buffer的數據流讀寫操作奠定基礎。

那麼preview模式下我們又是如何去獲得一幀完成的視頻流的呢?

觸發點就是preview模式下的Request,前面提到過一個mPreviewRequest至少包含一個StreamProcessor和一個CallbackProcessor的兩路stream,每路stream擁有不同的buffer數量。比如要從HAL3獲取一幀圖像數據,最簡單的思路就是從StreamProcessor下的Outputstream流中下發一個可用的buffer地址,然後HAL3填充下數據,Framework就可以擁有一幀數據了。

根據這個思路,回顧到前一博文中每次會不斷的下發一個Request命令包到HAL3中,在這裡我們就可以看到這個buffer地址身影。

Camera3Device::RequestThread::threadLoop() 下的部分代碼:

outputBuffers.insertAt(camera3_stream_buffer_t(), 0,

nextRequest->mOutputStreams.size());//Streamprocess,Callbackprocessor

request.output_buffers = outputBuffers.array();//camera3_stream_buffer_t

for (size_t i = 0; i < nextRequest->mOutputStreams.size(); i++) {

res = nextRequest->mOutputStreams.editItemAt(i)->

getBuffer(&outputBuffers.editItemAt(i));//等待獲取buffer,內部是dequeue一根buffer填充到camera3_stream_buffer_t

if (res != OK) {

// Can't get output buffer from gralloc queue - this could be due to

// abandoned queue or other consumer misbehavior, so not a fatal

// error

ALOGE(RequestThread: Can't get output buffer, skipping request:

%s (%d), strerror(-res), res);

Mutex::Autolock l(mRequestLock);

if (mListener != NULL) {

mListener->notifyError(

ICameraDeviceCallbacks::ERROR_CAMERA_REQUEST,

nextRequest->mResultExtras);

}

cleanUpFailedRequest(request, nextRequest, outputBuffers);

return true;

}

request.num_output_buffers++;//一般一根OutStream對應一個buffer,故總的out_buffer數目

}

在這個下發到HAL3的camera3_capture_request中,可以看到 const camera3_stream_buffer_t *output_buffers,下面的代碼可以說明這一次的Request的output_buffers是打包了當前Camera3Device所擁有的mOutputStreams。

outputBuffers.insertAt(camera3_stream_buffer_t(), 0,

nextRequest->mOutputStreams.size());//Streamprocess,Callbackprocessor

對於每一個OutputStream他會給她分配一個buffer handle。關注下面的處理代碼:

nextRequest->mOutputStreams.editItemAt(i)->

getBuffer(&outputBuffers.editItemAt(i))

nextRequest->mOutputStreams.editItemAt(i)是獲取一個Camera3OutputStream對象,然後對getBuffer而言傳入的是這個Camera3OutputStream所對應的這次buffer的輸入位置,這個camera3_stream_buffer是需要從Camera3OutputStream對象中去獲取的。

status_t Camera3Stream::getBuffer(camera3_stream_buffer *buffer) {

ATRACE_CALL();

Mutex::Autolock l(mLock);

status_t res = OK;

// This function should be only called when the stream is configured already.

if (mState != STATE_CONFIGURED) {

ALOGE(%s: Stream %d: Can't get buffers if stream is not in CONFIGURED state %d,

__FUNCTION__, mId, mState);

return INVALID_OPERATION;

}

// Wait for new buffer returned back if we are running into the limit.

if (getHandoutOutputBufferCountLocked() == camera3_stream::max_buffers) {//dequeue過多時等待queue的釋放

ALOGV(%s: Already dequeued max output buffers (%d), wait for next returned one.,

__FUNCTION__, camera3_stream::max_buffers);

res = mOutputBufferReturnedSignal.waitRelative(mLock, kWaitForBufferDuration);

if (res != OK) {

if (res == TIMED_OUT) {

ALOGE(%s: wait for output buffer return timed out after %lldms, __FUNCTION__,

kWaitForBufferDuration / 1000000LL);

}

return res;

}

}

res = getBufferLocked(buffer);

if (res == OK) {

fireBufferListenersLocked(*buffer, /*acquired*/true, /*output*/true);

}

return res;

}

上述的代碼先是檢查dequeue了的buffer是否已經達到本stream申請的buffer數目的最大值,如果已經全部dequeue的話就得wait到當前已經有buffer return並且queue操作後,在處理完成後才允許將從buffer隊列中再次執行dequeue操作。

隨後調用getBufferLocked通過2.2(d)小節可以知道是從buffer隊列中獲取一個可用的buffer,並填充這個camera3_stream_buffer值。

這樣處理完的結果是,下發的Request包含所有模塊下的outputstream,同時每個stream都配備了一個camera3_stream_buffer供底層HAL3.0去處理,而這個buffer在Camera3Device模式下,可以是交互的是幀圖像數據,可以是參數控制命令,也可以是其他的3A信息,這些不同的信息一般歸不同的模塊管理,也就是不同的stream來處理。

4 buffer數據流的queue操作

dequeue出來的buffer信息已經隨著Request下發到了HAL3層,在Camera3Device架構下,可以使用一個Callback接口將數據從HAL3回傳到Camera所在的Framework層。Camera3Device私有繼承了一個Callback接口camera3_callback_ops數據結構,分別預留了notify和process_capture_result。前者是用於回調一些shutter已經error等信息,後者以Callback數據流為主,這個回調接口通過device->initialize(camera3_device, this)來完成注冊。

void Camera3Device::sProcessCaptureResult(const camera3_callback_ops *cb,

const camera3_capture_result *result) {

Camera3Device *d =

const_cast(static_cast(cb));

d->processCaptureResult(result);

}

返回的buffer所有信息均包含在camera3_capture_result中,該函數的處理過程相對比較復雜,如果只定位queue buffer的入口可直接到returnOutputBuffers中去:

void Camera3Device::returnOutputBuffers(

const camera3_stream_buffer_t *outputBuffers, size_t numBuffers,

nsecs_t timestamp) {

for (size_t i = 0; i < numBuffers; i++)//對每一個buffer所屬的stream進行分析

{

Camera3Stream *stream = Camera3Stream::cast(outputBuffers[i].stream);//該buffer對應的camera3_stream

status_t res = stream->returnBuffer(outputBuffers[i], timestamp);//Camera3OutPutStream,每一各stream對應的return

// Note: stream may be deallocated at this point, if this buffer was

// the last reference to it.

if (res != OK) {

ALOGE(Can't return buffer to its stream: %s (%d),

strerror(-res), res);

}

}

}

因為在下發Request時,每一個buffer均包含所述的stream信息,當buffer數據返回到Framework層時,我們又可以轉到Camera3OutPutStream來處理這個return的buffer。

status_t Camera3Stream::returnBuffer(const camera3_stream_buffer &buffer,

nsecs_t timestamp) {

ATRACE_CALL();

Mutex::Autolock l(mLock);

/**

* TODO: Check that the state is valid first.

*

* = HAL3.2 CONFIGURED only

*

* Do this for getBuffer as well.

*/

status_t res = returnBufferLocked(buffer, timestamp);//以queue buffer為主

if (res == OK) {

fireBufferListenersLocked(buffer, /*acquired*/false, /*output*/true);

mOutputBufferReturnedSignal.signal();

}

return res;

}

在這裡看看registerBuffersLocked,參考前面對這個函數他是register完所有的buffer時被調用,在這裡其本質處理的buffer狀態不在是CAMERA3_BUFFER_STATUS_ERROR,而是CAMERA3_BUFFER_STATUS_OK故執行的是將會queuebuffer的操作。

5 buffer數據真正的被Consumer處理

在queuebuffer的操作時,參考前一博文Android5.1中surface和CpuConsumer下生產者和消費者間的處理框架簡述很容易知道真正的Consumer需要開始工作了,對於preview模式下的當然是由SurfaceFlinger的那套機制去處理。而在Camera2Client和Camera3Device下你還可以看到CPUConsumer的存在,比如:

void CallbackProcessor::onFrameAvailable(const BufferItem& /*item*/) {

Mutex::Autolock l(mInputMutex);

if (!mCallbackAvailable) {

mCallbackAvailable = true;

mCallbackAvailableSignal.signal();//數據callback線程處理

}

}

在這裡,你就可以去處理那些處於queue狀態的buffer數據,比如這裡的Callback將這幀數據上傳會APP。

bool CallbackProcessor::threadLoop() {

status_t res;

{

Mutex::Autolock l(mInputMutex);

while (!mCallbackAvailable) {

res = mCallbackAvailableSignal.waitRelative(mInputMutex,

kWaitDuration);

if (res == TIMED_OUT) return true;

}

mCallbackAvailable = false;

}

do {

sp client = mClient.promote();

if (client == 0) {

res = discardNewCallback();

} else {

res = processNewCallback(client);//callback 處理新的一幀

}

} while (res == OK);

return true;

}

l.mRemoteCallback->dataCallback(CAMERA_MSG_PREVIEW_FRAME,

callbackHeap->mBuffers[heapIdx], NULL);//處理成API的需求後,回調Preview frame

6 總結

到這裡,整個preview預覽的視頻流基本介紹完畢了,主要框架雖然負責,但仔細看看也就是buffer的queue與dequeue操作,真正的HAL3的實現才是最為復雜的。後續還會簡單介紹下整個take picture的過程,數據的回調處理在後續中還會繼續分析。

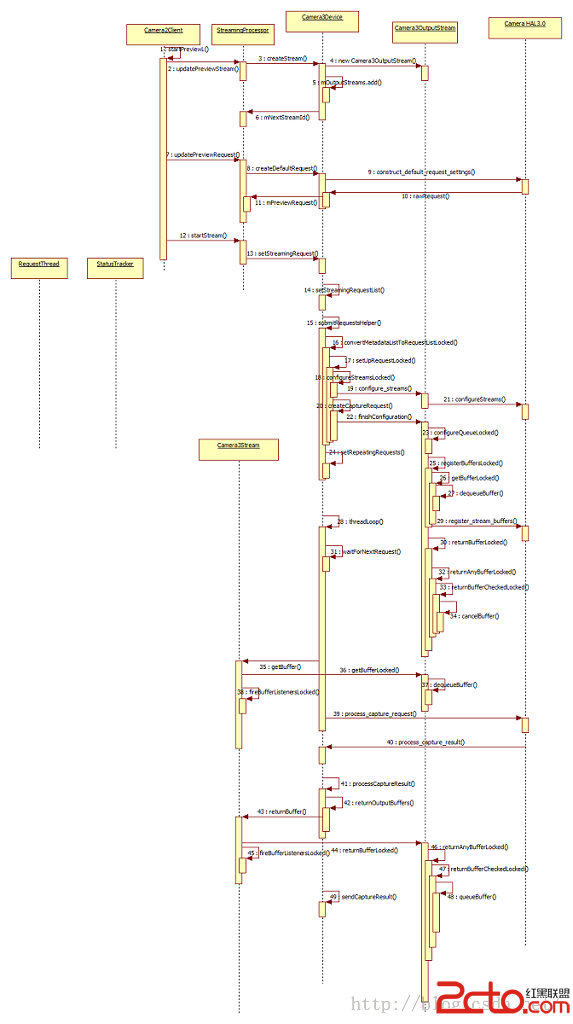

下面貼一圖是整個Camera3架構下基於Request和result的處理流序圖: