6.uvc解析uvc視頻流

6.1 重要結構體

6.1.1 uvc數據流

[cpp]

struct uvc_streaming {

struct list_head list; //uvc視頻流鏈表頭

struct uvc_device *dev; //uvc設備

struct video_device *vdev; //V4L2視頻設備

struct uvc_video_chain *chain; //uvc視頻鏈

atomic_t active;

struct usb_interface *intf; //usb接口設備

int intfnum; //usb接口號

__u16 maxpsize; //最大包尺寸

struct uvc_streaming_header header; //uvc視頻流頭部

enum v4l2_buf_type type; //V4L2緩沖區類型 輸入/輸出

unsigned int nformats; //uvc格式個數

struct uvc_format *format; //uvc格式指針

struct uvc_streaming_control ctrl; //uvc數據流控制

struct uvc_format *cur_format; //當前uvc格式指針

struct uvc_frame *cur_frame; //當前uvc幀指針

struct mutex mutex;

unsigned int frozen : 1;

struct uvc_video_queue queue; //uvc視頻隊列

void (*decode) (struct urb *urb, struct uvc_streaming *video,struct uvc_buffer *buf);//解碼函數

struct {

__u8 header[256];

unsigned int header_size;

int skip_payload;

__u32 payload_size;

__u32 max_payload_size;

} bulk;

struct urb *urb[UVC_URBS];//urb數組

char *urb_buffer[UVC_URBS]; //urb緩沖區

dma_addr_t urb_dma[UVC_URBS];//urb DMA緩沖區

unsigned int urb_size;

__u32 sequence;

__u8 last_fid;

};

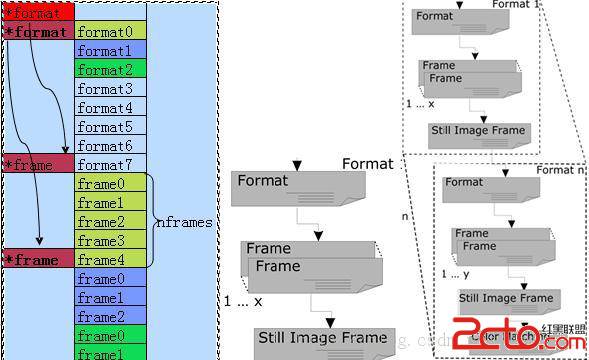

6.1.2 uvc格式

[cpp]

struct uvc_format { //uvc格式

__u8 type; //類型

__u8 index; //索引

__u8 bpp; //bits per pixel 每像素位數

__u8 colorspace; //顏色空間

__u32 fcc; //壓縮格式

__u32 flags; //標記

char name[32]; //名字

unsigned int nframes; //所含uvc幀個數

struct uvc_frame *frame; //uvc幀指針

};

6.1.3 uvc幀

[cpp]

struct uvc_frame { //uvc幀

__u8 bFrameIndex; //幀索引號

__u8 bmCapabilities; //uvc幀兼容性

__u16 wWidth; //寬度

__u16 wHeight; //高度

__u32 dwMinBitRate; //最新位流

__u32 dwMaxBitRate; //最大位流

__u32 dwMaxVideoFrameBufferSize; //最大視頻幀緩沖區

__u8 bFrameIntervalType; //間隙類型

__u32 dwDefaultFrameInterval; //默認幀間隙

__u32 *dwFrameInterval; //幀間隙指針

};

6.2 uvc_parse_streaming函數

[cpp]

static int uvc_parse_streaming(struct uvc_device *dev,struct usb_interface *intf)

{

struct uvc_streaming *streaming = NULL; //uvc數據流

struct uvc_format *format; //uvc格式

struct uvc_frame *frame; //uvc幀

struct usb_host_interface *alts = &intf->altsetting[0]; //獲取usb接口第一個usb_host_interface (Alt.Setting 0)

unsigned char *_buffer, *buffer = alts->extra; //獲取額外描述符

int _buflen, buflen = alts->extralen; //獲取額外描述符長度

unsigned int nformats = 0, nframes = 0, nintervals = 0;

unsigned int size, i, n, p;

__u32 *interval;

__u16 psize;

int ret = -EINVAL;

if (intf->cur_altsetting->desc.bInterfaceSubClass != UVC_SC_VIDEOSTREAMING) { //判讀usb接口描述符子類是否為視頻數據流接口子類

uvc_trace(UVC_TRACE_DESCR, "device %d interface %d isn't a video streaming interface\n", dev->udev->devnum,intf->altsetting[0].desc.bInterfaceNumber);

return -EINVAL;

}

if (usb_driver_claim_interface(&uvc_driver.driver, intf, dev)) { //綁定uvc設備的usb驅動和usb接口

uvc_trace(UVC_TRACE_DESCR, "device %d interface %d is already claimed\n", dev->udev->devnum,intf->altsetting[0].desc.bInterfaceNumber);

return -EINVAL;

}

streaming = kzalloc(sizeof *streaming, GFP_KERNEL); //分配uvc數據流內存

if (streaming == NULL) {

usb_driver_release_interface(&uvc_driver.driver, intf);

return -EINVAL;

}

mutex_init(&streaming->mutex);

streaming->dev = dev; //uvc數據流和uvc設備捆綁

streaming->intf = usb_get_intf(intf); //uvc數據流和usb接口捆綁,並增加引用計數

streaming->intfnum = intf->cur_altsetting->desc.bInterfaceNumber; //設置接口號

/* The Pico iMage webcam has its class-specific interface descriptors after the endpoint descriptors. */

if (buflen == 0) { //Pico iMage webcam 特殊處理

for (i = 0; i < alts->desc.bNumEndpoints; ++i) {

struct usb_host_endpoint *ep = &alts->endpoint[i];

if (ep->extralen == 0)

continue;

if (ep->extralen > 2 && ep->extra[1] == USB_DT_CS_INTERFACE) {

uvc_trace(UVC_TRACE_DESCR, "trying extra data from endpoint %u.\n", i);

buffer = alts->endpoint[i].extra;

buflen = alts->endpoint[i].extralen;

break;

}

}

}

/* Skip the standard interface descriptors. 跳過標准的接口描述符*/

while (buflen > 2 && buffer[1] != USB_DT_CS_INTERFACE) {

buflen -= buffer[0];

buffer += buffer[0];

}

if (buflen <= 2) {

uvc_trace(UVC_TRACE_DESCR, "no class-specific streaming interface descriptors found.\n");

goto error;

}

/* Parse the header descriptor. 解析header描述符*/ //Class-specific VS Interface Input Header Descriptor

switch (buffer[2]) { //bDescriptorSubtype

case UVC_VS_OUTPUT_HEADER: //輸出類型的視頻流

streaming->type = V4L2_BUF_TYPE_VIDEO_OUTPUT; //設置為V4L2視頻buf輸出

size = 9;

break;

case UVC_VS_INPUT_HEADER: //輸入類型的視頻流

streaming->type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //設置為V4L2視頻buf輸入

size = 13;

break;

default:

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming interface %d HEADER descriptor not found.\n", dev->udev->devnum,alts->desc.bInterfaceNumber);

goto error;

}

p = buflen >= 4 ? buffer[3] : 0; //bNumFormats uvc格式format個數

n = buflen >= size ? buffer[size-1] : 0; //bControlSize 控制位域大小

if (buflen < size + p*n) { //檢測buflen長度是否合適

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming interface %d HEADER descriptor is invalid.\n",dev->udev->devnum, alts->desc.bInterfaceNumber);

goto error;

}

//初始化uvc視頻流頭部

streaming->header.bNumFormats = p; //uvc格式format格式個數

streaming->header.bEndpointAddress = buffer[6]; //端點地址

if (buffer[2] == UVC_VS_INPUT_HEADER) { //輸入的視頻流

streaming->header.bmInfo = buffer[7]; //信息位圖(兼容性)

streaming->header.bTerminalLink = buffer[8]; //連接到的輸出Terminal ID號

streaming->header.bStillCaptureMethod = buffer[9]; //靜態圖像捕捉方法(Method 1、Method 2、Method 3)

streaming->header.bTriggerSupport = buffer[10]; //硬件觸發支持

streaming->header.bTriggerUsage = buffer[11]; //觸發用例

}

else {

streaming->header.bTerminalLink = buffer[7]; //連接到的輸入Terminal ID號

}

streaming->header.bControlSize = n; //控制位域大小

streaming->header.bmaControls = kmemdup(&buffer[size], p * n,GFP_KERNEL); //初始化bmaControls(x)位圖(大小=幀數*位域大小)

if (streaming->header.bmaControls == NULL) {

ret = -ENOMEM;

goto error;

}

buflen -= buffer[0];

buffer += buffer[0]; //指向下一個描述符

_buffer = buffer;

_buflen = buflen; //指向同一個描述符

解析完vs header後解析剩下的vs描述符

第一次解析描述符 統計uvc幀、uvc格式、間隔,並分配內存

[cpp]

/* Count the format and frame descriptors. 計算格式描述符和幀描述符個數*/

while (_buflen > 2 && _buffer[1] == USB_DT_CS_INTERFACE) {

switch (_buffer[2]) {

case UVC_VS_FORMAT_UNCOMPRESSED:

case UVC_VS_FORMAT_MJPEG:

case UVC_VS_FORMAT_FRAME_BASED:

nformats++;

break;

case UVC_VS_FORMAT_DV:

/* DV format has no frame descriptor. We will create a dummy frame descriptor with a dummy frame interval. */

nformats++;

nframes++;

nintervals++;

break;

case UVC_VS_FORMAT_MPEG2TS:

case UVC_VS_FORMAT_STREAM_BASED:

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming interface %d FORMAT %u is not supported.\n",dev->udev->devnum,alts->desc.bInterfaceNumber, _buffer[2]);

break;

case UVC_VS_FRAME_UNCOMPRESSED:

case UVC_VS_FRAME_MJPEG:

nframes++;

if (_buflen > 25)

nintervals += _buffer[25] ? _buffer[25] : 3;

break;

case UVC_VS_FRAME_FRAME_BASED:

nframes++;

if (_buflen > 21)

nintervals += _buffer[21] ? _buffer[21] : 3;

break;

} //計算uvc幀和uvc格式的個數及間隔個數

_buflen -= _buffer[0];

_buffer += _buffer[0]; //跳到下一個描述符

}

if (nformats == 0) {

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming interface %d has no supported formats defined.\n",dev->udev->devnum, alts->desc.bInterfaceNumber);

goto error;

}

// uvc格式數 * uvc格式大小 + uvc幀 * uvc幀大小 + 間隔數 * 間隔大小

size = nformats * sizeof *format + nframes * sizeof *frame+ nintervals * sizeof *interval;

format = kzalloc(size, GFP_KERNEL); //分配uvc格式和uvc幀的內存

if (format == NULL) {

ret = -ENOMEM;

goto error;

}

frame = (struct uvc_frame *)&format[nformats]; //uvc幀存放在uvc格式數組後面

interval = (__u32 *)&frame[nframes]; //間隔放在幀後面

streaming->format = format; //uvc視頻流捆綁uvc格式結構體

streaming->nformats = nformats; //uvc格式個數

第二次解析描述符

[cpp]

/* Parse the format descriptors.解析格式描述符 */

while (buflen > 2 && buffer[1] == USB_DT_CS_INTERFACE) {

switch (buffer[2]) { //bDescriptorSubtype描述符類型

case UVC_VS_FORMAT_UNCOMPRESSED:

case UVC_VS_FORMAT_MJPEG:

case UVC_VS_FORMAT_DV:

case UVC_VS_FORMAT_FRAME_BASED:

format->frame = frame; //uvc格式的幀指針 指向uvc幀地址

ret = uvc_parse_format(dev, streaming, format,&interval, buffer, buflen); //7.解析uvc格式描述符

if (ret < 0)

goto error;

frame += format->nframes; //uvc幀地址指向下一個uvc格式所屬的uvc幀地址

format++; //指向下一個uvc格式

buflen -= ret;

buffer += ret; //指向下一個uvc格式描述符

continue;