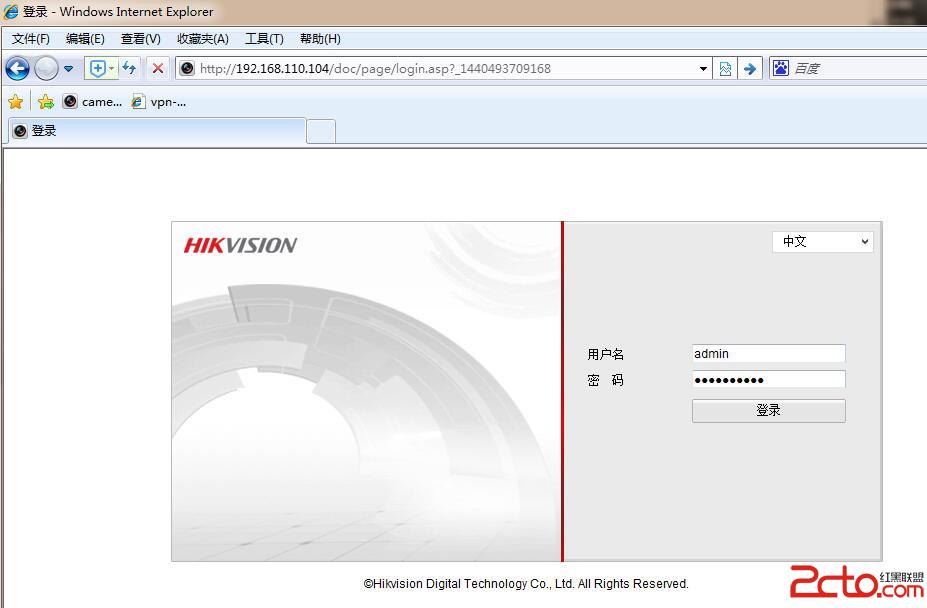

再保證可以從浏覽器中訪問。賬號密碼默認的一般是admin、a123456789(老版本的攝像頭密碼是12345)。

再保證可以從浏覽器中訪問。賬號密碼默認的一般是admin、a123456789(老版本的攝像頭密碼是12345)。

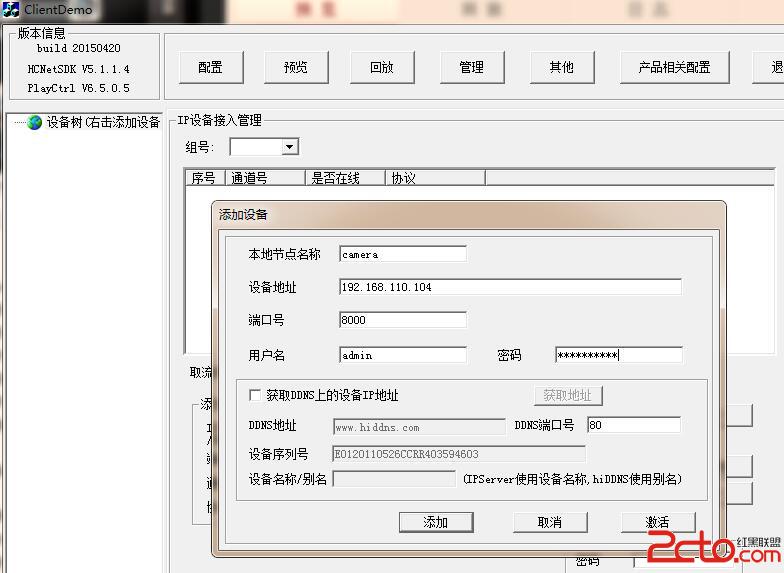

配置使用sdk中自帶的ClientDemo.exe工具可以訪問

配置使用sdk中自帶的ClientDemo.exe工具可以訪問

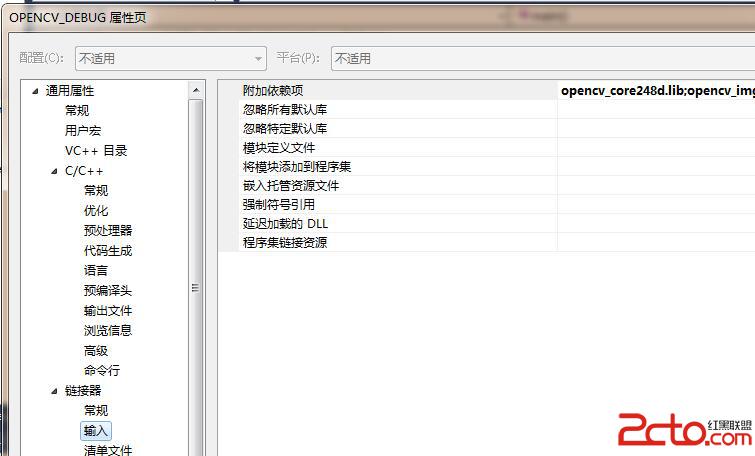

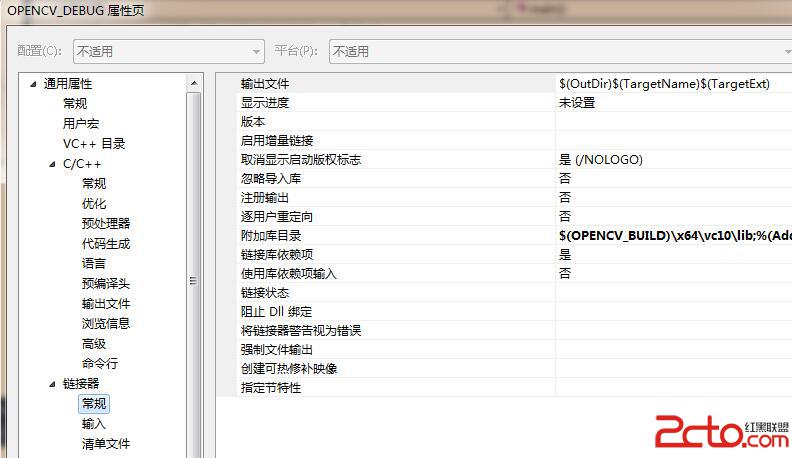

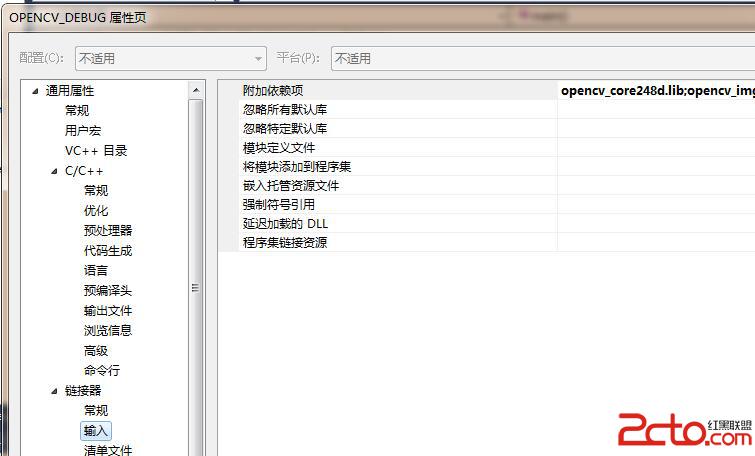

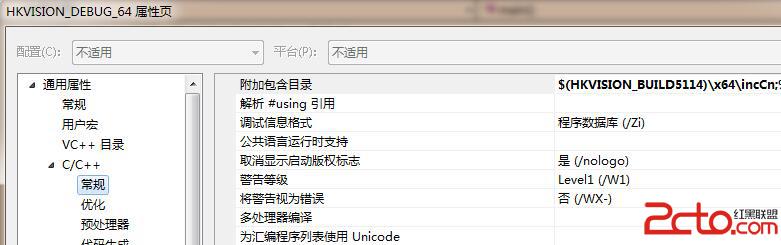

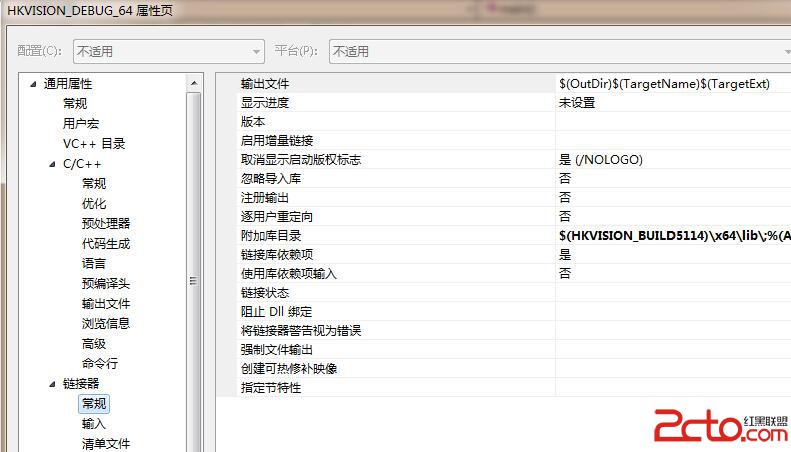

配置sdk開發環境

配置sdk開發環境

#include

#include

#include

#include

#include

#include

#include

#include

/*#include "PlayM4.h"*/

#include

#include

#include

#include "global.h"

#include "readCamera.h"

#define USECOLOR 1

using namespace cv;

using namespace std;

//--------------------------------------------

int iPicNum=0;//Set channel NO.

LONG nPort=-1;

HWND hWnd=NULL;

void yv12toYUV(char *outYuv, char *inYv12, int width, int height,int widthStep)

{

int col,row;

unsigned int Y,U,V;

int tmp;

int idx;

//printf("widthStep=%d.\n",widthStep);

for (row=0; row>1;

tmp = (row/2)*(width/2)+(col/2);

// if((row==1)&&( col>=1400 &&col<=1600))

// {

// printf("col=%d,row=%d,width=%d,tmp=%d.\n",col,row,width,tmp);

// printf("row*width+col=%d,width*height+width*height/4+tmp=%d,width*height+tmp=%d.\n",row*width+col,width*height+width*height/4+tmp,width*height+tmp);

// }

Y=(unsigned int) inYv12[row*width+col];

U=(unsigned int) inYv12[width*height+width*height/4+tmp];

V=(unsigned int) inYv12[width*height+tmp];

// if ((col==200))

// {

// printf("col=%d,row=%d,width=%d,tmp=%d.\n",col,row,width,tmp);

// printf("width*height+width*height/4+tmp=%d.\n",width*height+width*height/4+tmp);

// return ;

// }

if((idx+col*3+2)> (1200 * widthStep))

{

//printf("row * widthStep=%d,idx+col*3+2=%d.\n",1200 * widthStep,idx+col*3+2);

}

outYuv[idx+col*3] = Y;

outYuv[idx+col*3+1] = U;

outYuv[idx+col*3+2] = V;

}

}

//printf("col=%d,row=%d.\n",col,row);

}

//解碼回調 視頻為YUV數據(YV12),音頻為PCM數據

void CALLBACK DecCBFun(long nPort,char * pBuf,long nSize,FRAME_INFO * pFrameInfo, long nReserved1,long nReserved2)

{

long lFrameType = pFrameInfo->nType;

if(lFrameType ==T_YV12)

{

#if USECOLOR

//int start = clock();

static IplImage* pImgYCrCb = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), 8, 3);//得到圖像的Y分量

yv12toYUV(pImgYCrCb->imageData, pBuf, pFrameInfo->nWidth,pFrameInfo->nHeight,pImgYCrCb->widthStep);//得到全部RGB圖像

static IplImage* pImg = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), 8, 3);

cvCvtColor(pImgYCrCb,pImg,CV_YCrCb2RGB);

//int end = clock();

#else

static IplImage* pImg = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), 8, 1);

memcpy(pImg->imageData,pBuf,pFrameInfo->nWidth*pFrameInfo->nHeight);

#endif

//printf("%d\n",end-start);

Mat frametemp(pImg),frame;

//frametemp.copyTo(frame);

// cvShowImage("IPCamera",pImg);

// cvWaitKey(1);

EnterCriticalSection(&g_cs_frameList);

g_frameList.push_back(frametemp);

LeaveCriticalSection(&g_cs_frameList);

#if USECOLOR

// cvReleaseImage(&pImgYCrCb);

// cvReleaseImage(&pImg);

#else

/*cvReleaseImage(&pImg);*/

#endif

//此時是YV12格式的視頻數據,保存在pBuf中,可以fwrite(pBuf,nSize,1,Videofile);

//fwrite(pBuf,nSize,1,fp);

}

/***************

else if (lFrameType ==T_AUDIO16)

{

//此時是音頻數據,數據保存在pBuf中,可以fwrite(pBuf,nSize,1,Audiofile);

}

else

{

}

*******************/

}

///實時流回調

void CALLBACK fRealDataCallBack(LONG lRealHandle,DWORD dwDataType,BYTE *pBuffer,DWORD dwBufSize,void *pUser)

{

DWORD dRet;

switch (dwDataType)

{

case NET_DVR_SYSHEAD: //系統頭

if (!PlayM4_GetPort(&nPort)) //獲取播放庫未使用的通道號

{

break;

}

if(dwBufSize > 0)

{

if (!PlayM4_OpenStream(nPort,pBuffer,dwBufSize,1024*1024))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//設置解碼回調函數 只解碼不顯示

if (!PlayM4_SetDecCallBack(nPort,DecCBFun))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//設置解碼回調函數 解碼且顯示

//if (!PlayM4_SetDecCallBackEx(nPort,DecCBFun,NULL,NULL))

//{

// dRet=PlayM4_GetLastError(nPort);

// break;

//}

//打開視頻解碼

if (!PlayM4_Play(nPort,hWnd))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//打開音頻解碼, 需要碼流是復合流

// if (!PlayM4_PlaySound(nPort))

// {

// dRet=PlayM4_GetLastError(nPort);

// break;

// }

}

break;

case NET_DVR_STREAMDATA: //碼流數據

if (dwBufSize > 0 && nPort != -1)

{

BOOL inData=PlayM4_InputData(nPort,pBuffer,dwBufSize);

while (!inData)

{

Sleep(10);

inData=PlayM4_InputData(nPort,pBuffer,dwBufSize);

OutputDebugString(L"PlayM4_InputData failed \n");

}

}

break;

}

}

void CALLBACK g_ExceptionCallBack(DWORD dwType, LONG lUserID, LONG lHandle, void *pUser)

{

char tempbuf[256] = {0};

switch(dwType)

{

case EXCEPTION_RECONNECT: //預覽時重連

printf("----------reconnect--------%d\n", time(NULL));

break;

default:

break;

}

}

unsigned readCamera(void *param)

{

//---------------------------------------

// 初始化

NET_DVR_Init();

//設置連接時間與重連時間

NET_DVR_SetConnectTime(2000, 1);

NET_DVR_SetReconnect(10000, true);

//---------------------------------------

// 獲取控制台窗口句柄

//HMODULE hKernel32 = GetModuleHandle((LPCWSTR)"kernel32");

//GetConsoleWindow = (PROCGETCONSOLEWINDOW)GetProcAddress(hKernel32,"GetConsoleWindow");

//---------------------------------------

// 注冊設備

LONG lUserID;

NET_DVR_DEVICEINFO_V30 struDeviceInfo;

lUserID = NET_DVR_Login_V30("192.168.2.64", 8000, "admin", "a123456789", &struDeviceInfo);

if (lUserID < 0)

{

printf("Login error, %d\n", NET_DVR_GetLastError());

NET_DVR_Cleanup();

return -1;

}

//---------------------------------------

//設置異常消息回調函數

NET_DVR_SetExceptionCallBack_V30(0, NULL,g_ExceptionCallBack, NULL);

//cvNamedWindow("IPCamera");

//---------------------------------------

//啟動預覽並設置回調數據流

NET_DVR_CLIENTINFO ClientInfo;

ClientInfo.lChannel = 1; //Channel number 設備通道號

ClientInfo.hPlayWnd = NULL; //窗口為空,設備SDK不解碼只取流

ClientInfo.lLinkMode = 0; //Main Stream

ClientInfo.sMultiCastIP = NULL;

LONG lRealPlayHandle;

lRealPlayHandle = NET_DVR_RealPlay_V30(lUserID,&ClientInfo,fRealDataCallBack,NULL,TRUE);

if (lRealPlayHandle<0)

{

printf("NET_DVR_RealPlay_V30 failed! Error number: %d\n",NET_DVR_GetLastError());

return 0;

}

//cvWaitKey(0);

Sleep(-1);

//fclose(fp);

//---------------------------------------

//關閉預覽

if(!NET_DVR_StopRealPlay(lRealPlayHandle))

{

printf("NET_DVR_StopRealPlay error! Error number: %d\n",NET_DVR_GetLastError());

return 0;

}

//注銷用戶

NET_DVR_Logout(lUserID);

NET_DVR_Cleanup();

return 0;

} 其中最終得到的幀保存在g_frameList.push_back(frametemp);中。前後設置了對應的鎖,用來對該幀序列的讀寫進行保護。這一部分內容是要自己完成的。即定義變量:

CRITICAL_SECTION g_cs_frameList;

std::listg_frameList;

主函數中的調用代碼,先建立線程,調用上述讀攝像頭的函數的回調,並把讀到的幀序列保存在g_frameList中,然後再讀取該序列,保存到Mat裡即可:

int main()

{

HANDLE hThread;

unsigned threadID;

Mat frame1;

InitializeCriticalSection(&g_cs_frameList);

hThread = (HANDLE)_beginthreadex( NULL, 0, &readCamera, NULL, 0, &threadID );

...

EnterCriticalSection(&g_cs_frameList);

if(g_frameList.size())

{

list::iterator it;

it = g_frameList.end();

it--;

Mat dbgframe = (*(it));

//imshow("frame from camera",dbgframe);

//dbgframe.copyTo(frame1);

//dbgframe.release();

(*g_frameList.begin()).copyTo(frame[i]);

frame1 = dbgframe;

g_frameList.pop_front();

}

g_frameList.clear(); // 丟掉舊的幀

LeaveCriticalSection(&g_cs_frameList);

...

return 0;

}