SiftDetector類的實現代碼如下所示:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Drawing;

using System.Runtime.InteropServices;

using Emgu.CV;

using Emgu.CV.Structure;

namespace ImageProcessLearn

{

/// <summary>

/// SIFT檢測器

/// </summary>

public class SiftDetector : IDisposable

{

//成員變量

private IntPtr ptrSiftFilt;

//屬性

/// <summary>

/// SiftFilt指針

/// </summary>

public IntPtr PtrSiftFilt

{

get

{

return ptrSiftFilt;

}

}

/// <summary>

/// 獲取SIFT檢測器中的SiftFilt

/// </summary>

public VlSiftFilt SiftFilt

{

get

{

return (VlSiftFilt)Marshal.PtrToStructure (ptrSiftFilt, typeof(VlSiftFilt));

}

}

/// <summary>

/// 構造函數

/// </summary>

/// <param name="width">圖像的寬度</param>

/// <param name="height">圖像的高度</param>

/// <param name="noctaves">階數</param>

/// <param name="nlevels">每一階的層數 </param>

/// <param name="o_min">最小階的索引 </param>

public SiftDetector(int width, int height, int noctaves, int nlevels, int o_min)

{

ptrSiftFilt = VlFeatInvoke.vl_sift_new(width, height, noctaves, nlevels, o_min);

}

public SiftDetector(int width, int height)

: this(width, height, 4, 2, 0)

{ }

public SiftDetector(Size size, int noctaves, int nlevels, int o_min)

: this(size.Width, size.Height, noctaves, nlevels, o_min)

{ }

public SiftDetector(Size size)

: this(size.Width, size.Height, 4, 2, 0)

{ }

/// <summary>

/// 進行SIFT檢測,並返回檢測的結果

/// </summary>

/// <param name="im">單通道浮點型圖像數據,圖像數據不 必歸一化到區間[0,1]</param>

/// <param name="resultType">SIFT檢測的結果類型 </param>

/// <returns>返回SIFT檢測結果——SIFT特征列表;如果檢 測失敗,返回null。</returns>

unsafe public List<SiftFeature> Process(IntPtr im, SiftDetectorResultType resultType)

{

//定義變量

List<SiftFeature> features = null; //檢測結果:SIFT特征列表

VlSiftFilt siftFilt; //

VlSiftKeypoint* pKeyPoints; //指向關鍵點的指針

VlSiftKeypoint keyPoint; //關鍵點

SiftKeyPointOrientation[] orientations; //關鍵點對 應的方向及描述

double[] angles = new double[4]; //關鍵點對應的方向(角度)

int angleCount; //某個關鍵點的方向數目

double angle; //方向

float[] descriptors; //關鍵點某個方向的描述

IntPtr ptrDescriptors = Marshal.AllocHGlobal(128 * sizeof(float)); //指向描述的緩沖區指針

//依次遍歷每一階

if (VlFeatInvoke.vl_sift_process_first_octave (ptrSiftFilt, im) != VlFeatInvoke.VL_ERR_EOF)

{

features = new List<SiftFeature> (100);

while (true)

{

//計算每組中的關鍵點

VlFeatInvoke.vl_sift_detect (ptrSiftFilt);

//遍歷每個點

siftFilt = (VlSiftFilt) Marshal.PtrToStructure(ptrSiftFilt, typeof(VlSiftFilt));

pKeyPoints = (VlSiftKeypoint*) siftFilt.keys.ToPointer();

for (int i = 0; i < siftFilt.nkeys; i++)

{

keyPoint = *pKeyPoints;

pKeyPoints++;

orientations = null;

if (resultType == SiftDetectorResultType.Normal || resultType == SiftDetectorResultType.Extended)

{

//計算並遍歷每個點的 方向

angleCount = VlFeatInvoke.vl_sift_calc_keypoint_orientations(ptrSiftFilt, angles, ref keyPoint);

orientations = new SiftKeyPointOrientation[angleCount];

for (int j = 0; j < angleCount; j++)

{

angle = angles[j];

descriptors = null;

if (resultType == SiftDetectorResultType.Extended)

{

//計 算每個方向的描述

VlFeatInvoke.vl_sift_calc_keypoint_descriptor(ptrSiftFilt, ptrDescriptors, ref keyPoint, angle);

descriptors = new float[128];

Marshal.Copy(ptrDescriptors, descriptors, 0, 128);

}

orientations [j] = new SiftKeyPointOrientation(angle, descriptors); //保存關鍵點方向和 描述

}

}

features.Add(new SiftFeature(keyPoint, orientations)); //將得到的特征添加到列表中

}

//下一階

if (VlFeatInvoke.vl_sift_process_next_octave(ptrSiftFilt) == VlFeatInvoke.VL_ERR_EOF)

break;

}

}

//釋放資源

Marshal.FreeHGlobal(ptrDescriptors);

//返回

return features;

}

/// <summary>

/// 進行基本的SIFT檢測,並返回關鍵點列表

/// </summary>

/// <param name="im">單通道浮點型圖像數據,圖像數據不 必歸一化到區間[0,1]</param>

/// <returns>返回關鍵點列表;如果獲取失敗,返回null。 </returns>

public List<SiftFeature> Process(IntPtr im)

{

return Process(im, SiftDetectorResultType.Basic);

}

/// <summary>

/// 進行SIFT檢測,並返回檢測的結果

/// </summary>

/// <param name="image">圖像</param>

/// <param name="resultType">SIFT檢測的結果類型 </param>

/// <returns>返回SIFT檢測結果——SIFT特征列表;如果檢 測失敗,返回null。</returns>

public List<SiftFeature> Process(Image<Gray, Single> image, SiftDetectorResultType resultType)

{

if (image.Width != SiftFilt.width || image.Height != SiftFilt.height)

throw new ArgumentException("圖像的尺寸和構 造函數中指定的尺寸不一致。", "image");

return Process(image.MIplImage.imageData, resultType);

}

/// <summary>

/// 進行基本的SIFT檢測,並返回檢測的結果

/// </summary>

/// <param name="image">圖像</param>

/// <returns>返回SIFT檢測結果——SIFT特征列表;如果檢 測失敗,返回null。</returns>

public List<SiftFeature> Process(Image<Gray, Single> image)

{

return Process(image, SiftDetectorResultType.Basic);

}

/// <summary>

/// 釋放資源

/// </summary>

public void Dispose()

{

if (ptrSiftFilt != IntPtr.Zero)

VlFeatInvoke.vl_sift_delete(ptrSiftFilt);

}

}

/// <summary>

/// SIFT特征

/// </summary>

public struct SiftFeature

{

public VlSiftKeypoint keypoint; //關鍵點

public SiftKeyPointOrientation[] keypointOrientations; //關鍵點的方向及方向對應的描述

public SiftFeature(VlSiftKeypoint keypoint)

: this(keypoint, null)

{

}

public SiftFeature(VlSiftKeypoint keypoint, SiftKeyPointOrientation[] keypointOrientations)

{

this.keypoint = keypoint;

this.keypointOrientations = keypointOrientations;

}

}

/// <summary>

/// Sift關鍵點的方向及描述

/// </summary>

public struct SiftKeyPointOrientation

{

public double angle; //方向

public float[] descriptors; //描述

public SiftKeyPointOrientation(double angle)

: this(angle, null)

{

}

public SiftKeyPointOrientation(double angle, float[] descriptors)

{

this.angle = angle;

this.descriptors = descriptors;

}

}

/// <summary>

/// SIFT檢測的結果

/// </summary>

public enum SiftDetectorResultType

{

Basic, //基本:僅包含關鍵點

Normal, //正常:包含關鍵點、方向

Extended //擴展:包含關鍵點、方向以及描述

}

}

MSER區域

OpenCv中的函數cvExtractMSER以及EmguCv中的 Image<TColor,TDepth>.ExtractMSER方法實現了MSER區域的檢測。由於OpenCv的文檔 中目前還沒有cvExtractMSER這一部分,大家如果要看文檔的話,可以先去看EmguCv的文檔 。

需要注意的是MSER區域的檢測結果是區域中所有的點序列。例如檢測到3個區域, 其中一個區域是從(0,0)到(2,1)的矩形,那麼結果點序列為:(0,0),(1,0),(2,0),(2,1), (1,1),(0,1)。

MSER區域檢測的示例代碼如下:

MSER(區域)特征檢測

private string MserFeatureDetect()

{

//獲取參數

MCvMSERParams mserParam = new MCvMSERParams ();

mserParam.delta = int.Parse(txtMserDelta.Text);

mserParam.maxArea = int.Parse (txtMserMaxArea.Text);

mserParam.minArea = int.Parse (txtMserMinArea.Text);

mserParam.maxVariation = float.Parse (txtMserMaxVariation.Text);

mserParam.minDiversity = float.Parse (txtMserMinDiversity.Text);

mserParam.maxEvolution = int.Parse (txtMserMaxEvolution.Text);

mserParam.areaThreshold = double.Parse (txtMserAreaThreshold.Text);

mserParam.minMargin = double.Parse (txtMserMinMargin.Text);

mserParam.edgeBlurSize = int.Parse (txtMserEdgeBlurSize.Text);

bool showDetail = cbMserShowDetail.Checked;

//計算

Stopwatch sw = new Stopwatch();

sw.Start();

MemStorage storage = new MemStorage();

Seq<Point>[] regions = imageSource.ExtractMSER(null, ref mserParam, storage);

sw.Stop();

//顯示

Image<Bgr, Byte> imageResult = imageSourceGrayscale.Convert<Bgr, Byte>();

StringBuilder sbResult = new StringBuilder();

int idx = 0;

foreach (Seq<Point> region in regions)

{

imageResult.DrawPolyline(region.ToArray(), true, new Bgr(255d, 0d, 0d), 2);

if (showDetail)

{

sbResult.AppendFormat("第{0}區域,包 含{1}個頂點(", idx, region.Total);

foreach (Point pt in region)

sbResult.AppendFormat("{0},", pt);

sbResult.Append(")\r\n");

}

idx++;

}

pbResult.Image = imageResult.Bitmap;

//釋放資源

imageResult.Dispose();

storage.Dispose();

//返回

return string.Format("·MSER區域,用時{0:F05}毫秒, 參數(delta:{1},maxArea:{2},minArea:{3},maxVariation:{4},minDiversity: {5},maxEvolution:{6},areaThreshold:{7},minMargin:{8},edgeBlurSize:{9}) ,檢測到{10}個區域\r\n{11}",

sw.Elapsed.TotalMilliseconds, mserParam.delta, mserParam.maxArea, mserParam.minArea, mserParam.maxVariation, mserParam.minDiversity,

mserParam.maxEvolution, mserParam.areaThreshold, mserParam.minMargin, mserParam.edgeBlurSize, regions.Length, showDetail ? sbResult.ToString() : "");

}

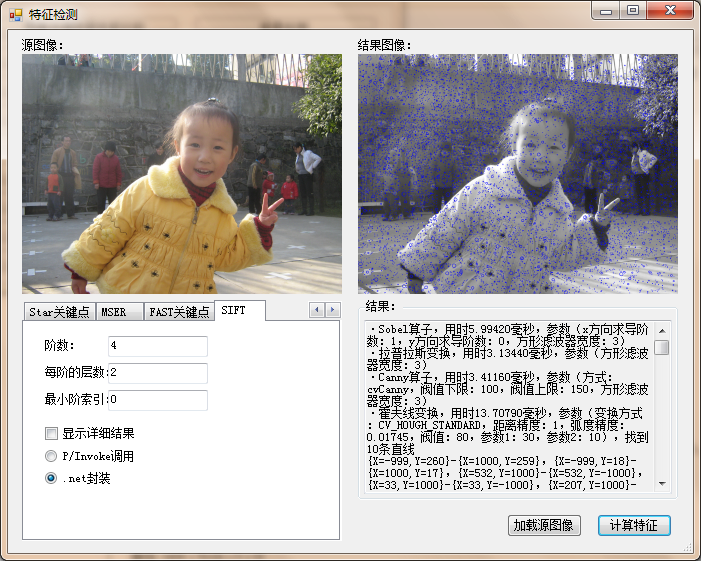

各種特征檢測方法性能對比

上面介紹了這麼多的特征檢測方法,那麼它們的性能到底如何呢?因為它們的參數設置 對處理時間及結果的影響很大,我們在這裡基本都使用默認參數處理同一幅圖像。在我機器 上的處理結果見下表:

特征 用時(毫秒) 特征數目 Sobel算子 5.99420 n/a 拉普拉斯算子 3.13440 n/a Canny算子 3.41160 n/a 霍夫線變換 13.70790 10 霍夫圓變換 78.07720 0 Harris角點 9.41750 n/a ShiTomasi角點 16.98390 18 亞像素級角點 3.63360 18 SURF角點 266.27000 151 Star關鍵點 14.82800 56 FAST角點 31.29670 159 SIFT角點 287.52310 54 MSER區域 40.62970 2

(圖片尺寸:583x301,處理器:AMD ATHLON IIx2 240,內存:DDR3 4G,顯卡: GeForce 9500GT,操作系統:Windows 7)

下一篇文章我們將一起看看如何來跟蹤本文講到的特征點(角點)。