python爬蟲並且將數據寫入csv的三種方法,前面兩種是pandas寫入csv ,後面是with open/open 直接寫入,直接上測試代碼。

import pandas as pd

import numpy as np

# 首先自己定義一些做測試的數據和表頭

company, salary, address, experience, education, number_people = '北京知帆科技有限公司', '10.0k-18.0k', '北京-海澱區', '3-4年經驗', '本科', '招3人'

data_list = (company, salary, address, experience, education, number_people)

# tuple list 類型都可以 區別:一個可變 一個不可變

# data_tuple = [company, salary, address, experience, education, number_people]

head = ('company', 'salary', 'address', 'experience', 'education', 'number_people')

""" 方法一 傳入元組或者列表 """

# data = np.array([

# [1, 2, 3, 4, 6, 6, 7],

# [1, 2, 3, 4, 6, 6, 7]])

# data 傳入list 和 array類型都可以

df = pd.DataFrame(columns=head, data=list([data_list]))

# df = pd.DataFrame(columns=head, data=data)

df.to_csv('aa11.csv', mode='w', index=False, sep=',')

""" 方法二 傳入字典 """

# dic1 = {'company': ['北京知帆科技有限公司'], 'salary': ['10.0k-18.0k'], 'address': ['北京-海澱區'],

# 'experience': ['3-4年經驗'], 'education': ['本科'], 'number_people': ['招3人']}

# 字典打包

dic = dict(zip(['company', 'salary', 'address', 'experience', 'education', 'number_people'],

[[company], [salary], [address], [experience], [education], [number_people]]))

df = pd.DataFrame(columns=head, data=dic)

# df = pd.DataFrame(columns=head, data=dic1)

df.to_csv('aa22.csv', mode='w', header=True, index=False, sep=',')

""" 方法三 文件讀寫 """

import codecs

import csv

# 文件讀盡量用codecs.open方法,一般不會出現編碼的問題。

f = codecs.open('aa33.csv', 'w', encoding='utf-8')

writer = csv.writer(f)

writer.writerow(head) # 寫入表頭 也就是文件標題

data_list = ['北京知帆科技有限公司', '10.0k-18.0k', '北京-海澱區', '3-4年經驗', '本科', '招3人']

writer.writerow(data_list)

# 如果codes.open用不習慣的話 直接用with open

data_list = ['北京知帆科技有限公司', '10.0k-18.0k', '北京-海澱區', '3-4年經驗', '本科', '招3人']

with open('aa44.csv', 'w', encoding='utf-8', newline='') as file:

writer = csv.writer(file)

writer.writerow(head) # 寫入表頭 也就是文件標題

writer.writerow(data_list)

下面分享一下爬蟲+寫入csv的代碼

爬蟲+pandas寫入csv,參考另一篇文章中的代碼

爬蟲+pandas寫入csv

import requests

from bs4 import BeautifulSoup

import json

import csv

def get_city_aqi(pinyin):

url = 'http://www.pm25.in/' + pinyin

r = requests.get(url, timeout=60)

soup = BeautifulSoup(r.text, 'lxml')

div_list = soup.find_all('div', {

'class': 'span1'})

city_aqi = []

for i in range(8):

div_content = div_list[i]

caption = div_content.find('div', {

'class': 'caption'}).text.strip()

value = div_content.find('div', {

'class': 'value'}).text.strip()

# city_aqi.append((caption, value))

city_aqi.append(value)

return city_aqi

def get_all_cities():

url = 'http://www.pm25.in/'

city_list = []

r = requests.get(url, timeout=60)

soup = BeautifulSoup(r.text, 'lxml')

city_div = soup.find_all('div', {

'class': 'bottom'})[1]

city_link_list = city_div.find_all('a')

for city_link in city_link_list:

city_name = city_link.text

city_pinyin = city_link['href'][1:]

city_list.append((city_name, city_pinyin))

return city_list

def main():

city_list = get_all_cities()

header = ['City', 'AQI', 'PM2.5/h', 'PM10/h', 'CO/h', 'NO2/h', 'O3/h', 'O3/8h', 'SO2/h']

with open('./china_city_aqi.csv', 'w', encoding='utf-8', newline='')as f:

writer = csv.writer(f)

writer.writerow(header)

for i, city in enumerate(city_list):

print(f'處理第{i + 1}條, 共{len(city_list)}條')

city_name = city[0]

city_pinyin = city[1]

city_aqi = get_city_aqi(city_pinyin)

row = [city_name] + city_aqi

print(row)

writer.writerow(row)

if __name__ == '__main__':

main()

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from datetime import datetime

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_split

from sklearn.utils import shuffle

data = pd.read_csv('./dataset/data.csv')

data

43824 rows × 13 columns

data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 43824 entries, 0 to 43823

Data columns (total 13 columns):

No 43824 non-null int64

year 43824 non-null int64

month 43824 non-null int64

day 43824 non-null int64

hour 43824 non-null int64

pm2.5 41757 non-null float64

DEWP 43824 non-null int64

TEMP 43824 non-null float64

PRES 43824 non-null float64

cbwd 43824 non-null object

Iws 43824 non-null float64

Is 43824 non-null int64

Ir 43824 non-null int64

dtypes: float64(4), int64(8), object(1)

memory usage: 4.3+ MB

data['pm2.5'].isna().sum()

2067

pm2.5有空缺 前面的刪除 後面的填充 保證是一個完整的序列

data = data.iloc[24:, :].fillna(method='ffill') # 使用前向填充 空值的前一個值填充

data

43800 rows × 13 columns

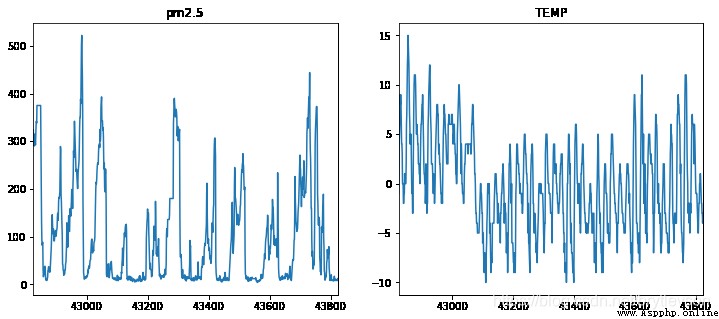

plt.figure(figsize=(12, 5))

# 查看最後1000次pm2.5觀測狀態

plt.subplot(1, 2, 1)

data['pm2.5'][-1000:].plot()

plt.title('pm2.5')

# 查看最後1000次溫度觀測狀態

plt.subplot(1, 2, 2)

plt.title('TEMP')

data['TEMP'][-1000:].plot()

plt.show()

對時間做處理,將時間合並作為index

data['time'] = data.apply(lambda x: datetime(x['year'], x['month'], x['day'], x['hour']), axis=1)

data.drop(['year', 'month', 'day', 'hour', 'No'], axis=1, inplace=True)

data.set_index('time', inplace=True)

data

43800 rows × 8 columns

# 將 cbwd 風向one_hot編碼

data = data.join(pd.get_dummies(data['cbwd']))

del data['cbwd']

data

43800 rows × 11 columns

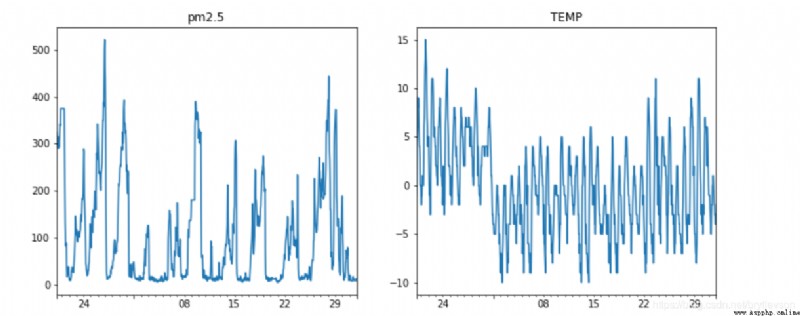

plt.figure(figsize=(12, 5))

# 查看最後1000次pm2.5觀測狀態

plt.subplot(1, 2, 1)

data['pm2.5'][-1000:].plot()

plt.title('pm2.5')

# 查看最後1000次溫度觀測狀態

plt.subplot(1, 2, 2)

plt.title('TEMP')

data['TEMP'][-1000:].plot()

plt.show()

# 從序列中提取train test

seq_length = 5 * 24 # 提前每個時刻前五天的數據

delay = 24 # 預測第六天的數據

# 先將 每隔前6天的數據提取出來

_data_list = []

for i in range(len(data)-seq_length-delay):

_data_list.append(data.iloc[i: i+seq_length+delay])

print(_data_list[0].shape)

(144, 11)

# 將dataframe轉成array

_data = np.array([df.values for df in _data_list])

_data.shape

(43656, 144, 11)

# 劃分訓練集和測試集

x = _data[:, :seq_length, 1:]

y = _data[:, -1, 0]

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=1000)

print(X_train.shape)

print(y_train.shape)

(34924, 120, 10)

(34924,)

# 數據標准化

mean = X_train.mean(axis=0)

std = X_train.std(axis=0)

X_train = (X_train - mean)/std

X_test = (X_test - mean)/std

from tensorflow import keras

batch_size = 128

# 使用多層lstm (該數據有時間變化趨勢, 適合使用lstm)

model = keras.Sequential()

# model.add(keras.layers.LSTM(32, input_shape=[X_train[1:]], return_sequences=True))

model.add(keras.layers.LSTM(32, return_sequences=True)) # 有4個神經元數量為32的前饋網絡層

model.add(keras.layers.LSTM(32, return_sequences=True))

model.add(keras.layers.LSTM(32, return_sequences=True))

model.add(keras.layers.LSTM(32, return_sequences=False))

model.add(keras.layers.Dense(1))

# 優化 訓練過程中降低學習速率

# 理解: 連續3個epoch學習速率沒下降 lr*0.5 最後不超過最低值

lr_reduce = keras.callbacks.ReduceLROnPlateau(monitor='val_loss', patience=3, factor=0.5,

min_lr=0.00001)

model.compile(optimizer='adam', loss='mse', metrics=['mae']) # mae平均絕對誤差

history = model.fit(X_train, y_train, batch_size=batch_size,

epochs=150,

callbacks=[lr_reduce],

validation_data=(X_test, y_test))

# model.save('./pm2.5.h5')

print(history.history.keys())

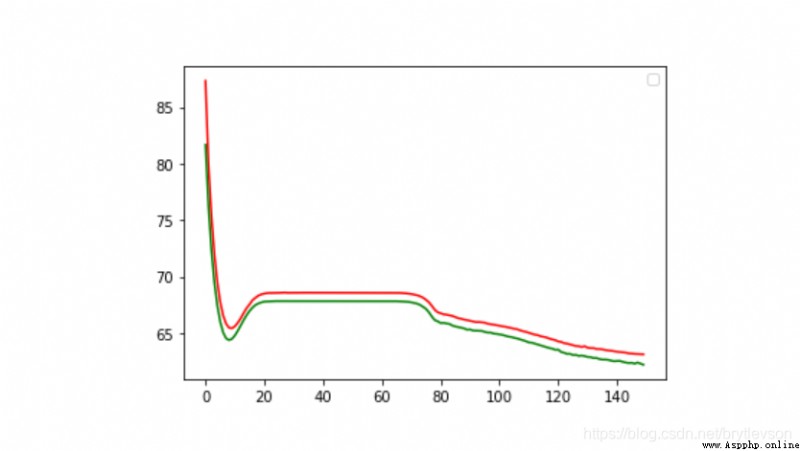

plt.plot(history.epoch, history.history['mae'], c='r')

plt.plot(history.epoch, history.history['val_mae'], c='g')

plt.legend()

plt.show()

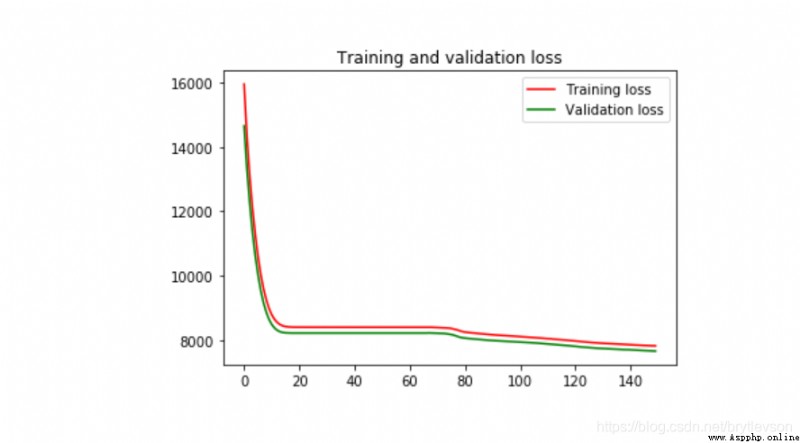

plt.plot(history.epoch, history.history['loss'], c='r', label='Training loss')

plt.plot(history.epoch, history.history['val_loss'], c='g', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

Epoch 1/150

273/273 [==============================] - 61s 206ms/step - loss: 16742.4334 - mae: 90.9238 - val_loss: 14646.6338 - val_mae: 81.6788

Epoch 2/150

273/273 [==============================] - 57s 209ms/step - loss: 14607.2083 - mae: 81.2521 - val_loss: 13331.6240 - val_mae: 76.2547

Epoch 3/150

273/273 [==============================] - 58s 212ms/step - loss: 13062.3841 - mae: 75.8968 - val_loss: 12259.8438 - val_mae: 72.3604

Epoch 4/150

273/273 [==============================] - 60s 218ms/step - loss: 12766.5135 - mae: 73.8100 - val_loss: 11373.2275 - val_mae: 69.4936

Epoch 5/150

273/273 [==============================] - 63s 230ms/step - loss: 11479.0624 - mae: 70.2214 - val_loss: 10639.9043 - val_mae: 67.4292

Epoch 6/150

273/273 [==============================] - 62s 228ms/step - loss: 10776.3757 - mae: 68.3468 - val_loss: 10039.1123 - val_mae: 65.9866

Epoch 7/150

273/273 [==============================] - 62s 227ms/step - loss: 10341.7646 - mae: 67.2075 - val_loss: 9554.6572 - val_mae: 65.0422

Epoch 8/150

273/273 [==============================] - 61s 224ms/step - loss: 9624.0328 - mae: 65.5107 - val_loss: 9170.4385 - val_mae: 64.5219

Epoch 9/150

273/273 [==============================] - 60s 221ms/step - loss: 9403.6663 - mae: 65.6162 - val_loss: 8875.7959 - val_mae: 64.3700

Epoch 10/150

273/273 [==============================] - 61s 222ms/step - loss: 9010.0623 - mae: 65.4931 - val_loss: 8653.1328 - val_mae: 64.4761

Epoch 11/150

273/273 [==============================] - 61s 224ms/step - loss: 8827.4738 - mae: 65.5057 - val_loss: 8494.2217 - val_mae: 64.7843

Epoch 12/150

273/273 [==============================] - 60s 220ms/step - loss: 8426.1633 - mae: 65.4281 - val_loss: 8383.6738 - val_mae: 65.2093

Epoch 13/150

273/273 [==============================] - 60s 220ms/step - loss: 8401.8991 - mae: 65.6818 - val_loss: 8311.4551 - val_mae: 65.6845

Epoch 14/150

273/273 [==============================] - 60s 220ms/step - loss: 8488.3962 - mae: 66.8363 - val_loss: 8268.0596 - val_mae: 66.1579

Epoch 15/150

273/273 [==============================] - 60s 220ms/step - loss: 8513.0347 - mae: 67.4366 - val_loss: 8243.9941 - val_mae: 66.5921

Epoch 16/150

273/273 [==============================] - 60s 222ms/step - loss: 8277.4878 - mae: 67.0897 - val_loss: 8232.0371 - val_mae: 66.9601

Epoch 17/150

273/273 [==============================] - 60s 220ms/step - loss: 8663.8273 - mae: 68.2389 - val_loss: 8226.9561 - val_mae: 67.2551

Epoch 18/150

273/273 [==============================] - 61s 222ms/step - loss: 8288.9277 - mae: 67.6184 - val_loss: 8225.4375 - val_mae: 67.4816

Epoch 19/150

273/273 [==============================] - 61s 224ms/step - loss: 8567.5850 - mae: 68.9895 - val_loss: 8225.4082 - val_mae: 67.6185

Epoch 20/150

273/273 [==============================] - 61s 222ms/step - loss: 8487.0704 - mae: 68.8503 - val_loss: 8225.7344 - val_mae: 67.7098

Epoch 21/150

273/273 [==============================] - 61s 223ms/step - loss: 8613.7959 - mae: 69.0857 - val_loss: 8226.1621 - val_mae: 67.7786

Epoch 22/150

273/273 [==============================] - 60s 220ms/step - loss: 8356.8230 - mae: 68.3529 - val_loss: 8226.2686 - val_mae: 67.7927

Epoch 23/150

273/273 [==============================] - 60s 219ms/step - loss: 8468.6951 - mae: 68.4023 - val_loss: 8226.3145 - val_mae: 67.7986

Epoch 24/150

273/273 [==============================] - 60s 221ms/step - loss: 8291.6117 - mae: 68.0808 - val_loss: 8226.4033 - val_mae: 67.8098

Epoch 25/150

273/273 [==============================] - 61s 223ms/step - loss: 8332.3899 - mae: 68.5825 - val_loss: 8226.5439 - val_mae: 67.8266

Epoch 26/150

273/273 [==============================] - 61s 222ms/step - loss: 8465.6008 - mae: 68.7638 - val_loss: 8226.5449 - val_mae: 67.8265

Epoch 27/150

273/273 [==============================] - 61s 225ms/step - loss: 8370.8415 - mae: 68.7813 - val_loss: 8226.5410 - val_mae: 67.8264

Epoch 28/150

273/273 [==============================] - 61s 225ms/step - loss: 8463.0080 - mae: 68.5800 - val_loss: 8226.5957 - val_mae: 67.8325

Epoch 29/150

273/273 [==============================] - 63s 231ms/step - loss: 8455.1988 - mae: 68.3467 - val_loss: 8226.5693 - val_mae: 67.8294

Epoch 30/150

273/273 [==============================] - 61s 222ms/step - loss: 8236.1620 - mae: 67.9002 - val_loss: 8226.5703 - val_mae: 67.8297

Epoch 31/150

273/273 [==============================] - 60s 219ms/step - loss: 8181.2296 - mae: 67.7665 - val_loss: 8226.5859 - val_mae: 67.8314

Epoch 32/150

273/273 [==============================] - 60s 219ms/step - loss: 8321.2633 - mae: 68.2151 - val_loss: 8226.5898 - val_mae: 67.8316

Epoch 33/150

273/273 [==============================] - 61s 222ms/step - loss: 8462.1091 - mae: 68.9432 - val_loss: 8226.6045 - val_mae: 67.8336

Epoch 34/150

273/273 [==============================] - 61s 224ms/step - loss: 8383.3842 - mae: 68.7199 - val_loss: 8226.5908 - val_mae: 67.8318

Epoch 35/150

273/273 [==============================] - 61s 224ms/step - loss: 8547.4360 - mae: 68.6443 - val_loss: 8226.5996 - val_mae: 67.8329

Epoch 36/150

273/273 [==============================] - 62s 227ms/step - loss: 8406.0428 - mae: 68.6697 - val_loss: 8226.5967 - val_mae: 67.8328

Epoch 37/150

273/273 [==============================] - 61s 225ms/step - loss: 8370.8651 - mae: 68.4359 - val_loss: 8226.5947 - val_mae: 67.8324

Epoch 38/150

273/273 [==============================] - 62s 226ms/step - loss: 8351.3386 - mae: 68.5521 - val_loss: 8226.5957 - val_mae: 67.8324

Epoch 39/150

273/273 [==============================] - 61s 222ms/step - loss: 8484.4870 - mae: 68.4776 - val_loss: 8226.5947 - val_mae: 67.8323

Epoch 40/150

273/273 [==============================] - 61s 222ms/step - loss: 8532.6639 - mae: 68.9514 - val_loss: 8226.5986 - val_mae: 67.8328

Epoch 41/150

273/273 [==============================] - 61s 225ms/step - loss: 8536.1996 - mae: 68.9368 - val_loss: 8226.5947 - val_mae: 67.8323

Epoch 42/150

273/273 [==============================] - 61s 222ms/step - loss: 8424.5842 - mae: 68.4884 - val_loss: 8226.5967 - val_mae: 67.8325

Epoch 43/150

273/273 [==============================] - 60s 221ms/step - loss: 8510.1979 - mae: 68.9535 - val_loss: 8226.5967 - val_mae: 67.8326

Epoch 44/150

273/273 [==============================] - 60s 220ms/step - loss: 8414.9673 - mae: 68.4775 - val_loss: 8226.5967 - val_mae: 67.8326

Epoch 45/150

273/273 [==============================] - 60s 221ms/step - loss: 8424.7148 - mae: 68.5667 - val_loss: 8226.5986 - val_mae: 67.8328

Epoch 46/150

273/273 [==============================] - 63s 231ms/step - loss: 8584.4803 - mae: 69.1215 - val_loss: 8226.5977 - val_mae: 67.8326

Epoch 47/150

273/273 [==============================] - 61s 224ms/step - loss: 8676.9013 - mae: 69.2567 - val_loss: 8226.5977 - val_mae: 67.8328

Epoch 48/150

273/273 [==============================] - 61s 224ms/step - loss: 8390.5315 - mae: 68.5066 - val_loss: 8226.5947 - val_mae: 67.8324

Epoch 49/150

273/273 [==============================] - 61s 224ms/step - loss: 8349.9124 - mae: 68.4460 - val_loss: 8226.5986 - val_mae: 67.8327

Epoch 50/150

273/273 [==============================] - 60s 221ms/step - loss: 8205.4177 - mae: 67.7604 - val_loss: 8226.5967 - val_mae: 67.8325

Epoch 51/150

273/273 [==============================] - 61s 223ms/step - loss: 8392.1621 - mae: 68.7458 - val_loss: 8226.5957 - val_mae: 67.8326

Epoch 52/150

273/273 [==============================] - 61s 222ms/step - loss: 8675.7586 - mae: 69.4464 - val_loss: 8226.5967 - val_mae: 67.8326

Epoch 53/150

273/273 [==============================] - 62s 226ms/step - loss: 8376.2547 - mae: 68.5158 - val_loss: 8226.5986 - val_mae: 67.8327

Epoch 54/150

273/273 [==============================] - 62s 227ms/step - loss: 8335.3379 - mae: 68.3225 - val_loss: 8226.5986 - val_mae: 67.8327

Epoch 55/150

273/273 [==============================] - 62s 226ms/step - loss: 8481.7657 - mae: 68.7639 - val_loss: 8226.5986 - val_mae: 67.8328

Epoch 56/150

273/273 [==============================] - 63s 230ms/step - loss: 8512.7698 - mae: 68.5890 - val_loss: 8226.5957 - val_mae: 67.8325

Epoch 57/150

273/273 [==============================] - 63s 230ms/step - loss: 8455.3750 - mae: 68.6572 - val_loss: 8226.5977 - val_mae: 67.8328

Epoch 58/150

273/273 [==============================] - 61s 223ms/step - loss: 8470.7624 - mae: 68.9075 - val_loss: 8226.5986 - val_mae: 67.8327

Epoch 59/150

273/273 [==============================] - 60s 221ms/step - loss: 8479.7735 - mae: 68.9081 - val_loss: 8226.5947 - val_mae: 67.8323

Epoch 60/150

273/273 [==============================] - 61s 224ms/step - loss: 8234.5817 - mae: 68.7015 - val_loss: 8226.6016 - val_mae: 67.8330

Epoch 61/150

273/273 [==============================] - 60s 222ms/step - loss: 8461.2091 - mae: 68.7669 - val_loss: 8226.5986 - val_mae: 67.8327

Epoch 62/150

273/273 [==============================] - 60s 222ms/step - loss: 8458.8530 - mae: 68.7402 - val_loss: 8226.5977 - val_mae: 67.8327

Epoch 63/150

273/273 [==============================] - 62s 226ms/step - loss: 8587.8940 - mae: 68.6250 - val_loss: 8226.6006 - val_mae: 67.8331

Epoch 64/150

273/273 [==============================] - 62s 226ms/step - loss: 8602.9138 - mae: 68.8073 - val_loss: 8226.5957 - val_mae: 67.8328

Epoch 65/150

273/273 [==============================] - 61s 224ms/step - loss: 8389.7711 - mae: 68.1815 - val_loss: 8226.5078 - val_mae: 67.8320

Epoch 66/150

273/273 [==============================] - 62s 225ms/step - loss: 8585.8489 - mae: 69.0446 - val_loss: 8225.7852 - val_mae: 67.8145

Epoch 67/150

273/273 [==============================] - 62s 228ms/step - loss: 8323.5853 - mae: 68.4999 - val_loss: 8225.4238 - val_mae: 67.8042

Epoch 68/150

273/273 [==============================] - 62s 228ms/step - loss: 8545.9198 - mae: 68.8307 - val_loss: 8228.8105 - val_mae: 67.8080

Epoch 69/150

273/273 [==============================] - 62s 226ms/step - loss: 8262.9887 - mae: 67.9555 - val_loss: 8223.8291 - val_mae: 67.7737

Epoch 70/150

273/273 [==============================] - 61s 224ms/step - loss: 8656.3001 - mae: 69.2458 - val_loss: 8222.8906 - val_mae: 67.7547

Epoch 71/150

273/273 [==============================] - 61s 224ms/step - loss: 8336.8825 - mae: 68.4149 - val_loss: 8220.6260 - val_mae: 67.7138

Epoch 72/150

273/273 [==============================] - 61s 224ms/step - loss: 8477.9109 - mae: 68.8455 - val_loss: 8218.2393 - val_mae: 67.6565

Epoch 73/150

273/273 [==============================] - 62s 226ms/step - loss: 8585.9266 - mae: 68.7750 - val_loss: 8214.5684 - val_mae: 67.5979

Epoch 74/150

273/273 [==============================] - 61s 224ms/step - loss: 8216.9939 - mae: 67.7797 - val_loss: 8208.9502 - val_mae: 67.4845

Epoch 75/150

273/273 [==============================] - 61s 224ms/step - loss: 8393.6422 - mae: 68.2826 - val_loss: 8196.3428 - val_mae: 67.3194

Epoch 76/150

273/273 [==============================] - 61s 225ms/step - loss: 8130.0958 - mae: 67.4432 - val_loss: 8181.2900 - val_mae: 67.1320

Epoch 77/150

273/273 [==============================] - 82s 300ms/step - loss: 8471.2442 - mae: 67.8909 - val_loss: 8160.0679 - val_mae: 66.8343

Epoch 78/150

273/273 [==============================] - 61s 222ms/step - loss: 8297.1601 - mae: 67.2958 - val_loss: 8129.6997 - val_mae: 66.4568

Epoch 79/150

273/273 [==============================] - 60s 221ms/step - loss: 8331.4552 - mae: 67.1481 - val_loss: 8101.7114 - val_mae: 66.1418

Epoch 80/150

273/273 [==============================] - 61s 222ms/step - loss: 8084.1070 - mae: 66.4432 - val_loss: 8080.2290 - val_mae: 66.0347

Epoch 81/150

273/273 [==============================] - 61s 223ms/step - loss: 8396.0239 - mae: 67.0763 - val_loss: 8069.1743 - val_mae: 65.8770

Epoch 82/150

273/273 [==============================] - 60s 219ms/step - loss: 8185.0534 - mae: 66.4298 - val_loss: 8060.0220 - val_mae: 65.9004

Epoch 83/150

273/273 [==============================] - 60s 220ms/step - loss: 8303.1067 - mae: 66.9463 - val_loss: 8052.3354 - val_mae: 65.8416

Epoch 84/150

273/273 [==============================] - 61s 223ms/step - loss: 8047.7834 - mae: 65.8863 - val_loss: 8045.4146 - val_mae: 65.8027

Epoch 85/150

273/273 [==============================] - 61s 222ms/step - loss: 8329.9191 - mae: 66.8130 - val_loss: 8035.4189 - val_mae: 65.6562

Epoch 86/150

273/273 [==============================] - 60s 220ms/step - loss: 8186.9528 - mae: 66.5324 - val_loss: 8027.5225 - val_mae: 65.5805

Epoch 87/150

273/273 [==============================] - 60s 221ms/step - loss: 8057.1022 - mae: 65.9535 - val_loss: 8019.4668 - val_mae: 65.5211

Epoch 88/150

273/273 [==============================] - 61s 223ms/step - loss: 8341.6970 - mae: 66.4929 - val_loss: 8012.3262 - val_mae: 65.4549

Epoch 89/150

273/273 [==============================] - 61s 225ms/step - loss: 8027.3912 - mae: 65.7953 - val_loss: 8005.6152 - val_mae: 65.4042

Epoch 90/150

273/273 [==============================] - 61s 225ms/step - loss: 8064.1161 - mae: 66.0203 - val_loss: 7999.6040 - val_mae: 65.2895

Epoch 91/150

273/273 [==============================] - 62s 226ms/step - loss: 8208.3036 - mae: 66.3922 - val_loss: 7995.8965 - val_mae: 65.3147

Epoch 92/150

273/273 [==============================] - 60s 221ms/step - loss: 8032.6061 - mae: 65.7072 - val_loss: 7989.3223 - val_mae: 65.2171

Epoch 93/150

273/273 [==============================] - 61s 222ms/step - loss: 7989.7291 - mae: 65.4608 - val_loss: 7983.4604 - val_mae: 65.2041

Epoch 94/150

273/273 [==============================] - 61s 224ms/step - loss: 8133.9053 - mae: 66.1046 - val_loss: 7980.9595 - val_mae: 65.1901

Epoch 95/150

273/273 [==============================] - 62s 226ms/step - loss: 7963.0258 - mae: 65.6962 - val_loss: 7975.8901 - val_mae: 65.1806

Epoch 96/150

273/273 [==============================] - 62s 226ms/step - loss: 8335.9137 - mae: 66.2708 - val_loss: 7970.1880 - val_mae: 65.0943

Epoch 97/150

273/273 [==============================] - 79s 290ms/step - loss: 8136.6836 - mae: 66.1052 - val_loss: 7964.5376 - val_mae: 65.0385

Epoch 98/150

273/273 [==============================] - 61s 224ms/step - loss: 8103.1769 - mae: 65.9585 - val_loss: 7962.0000 - val_mae: 65.0376

Epoch 99/150

273/273 [==============================] - 60s 218ms/step - loss: 8051.2684 - mae: 65.7560 - val_loss: 7956.1284 - val_mae: 64.9462

Epoch 100/150

273/273 [==============================] - 61s 222ms/step - loss: 8102.4803 - mae: 65.4313 - val_loss: 7951.5518 - val_mae: 64.9178

Epoch 101/150

273/273 [==============================] - 61s 222ms/step - loss: 8274.3461 - mae: 65.8671 - val_loss: 7946.9150 - val_mae: 64.8726

Epoch 102/150

273/273 [==============================] - 61s 223ms/step - loss: 8052.9716 - mae: 65.5578 - val_loss: 7943.2925 - val_mae: 64.8318

Epoch 103/150

273/273 [==============================] - 61s 224ms/step - loss: 8165.8697 - mae: 65.6790 - val_loss: 7936.5962 - val_mae: 64.7525

Epoch 104/150

273/273 [==============================] - 61s 223ms/step - loss: 8129.4666 - mae: 65.5902 - val_loss: 7931.2388 - val_mae: 64.6802

Epoch 105/150

273/273 [==============================] - 61s 222ms/step - loss: 8077.7160 - mae: 65.1185 - val_loss: 7925.7744 - val_mae: 64.6451

Epoch 106/150

273/273 [==============================] - 66s 241ms/step - loss: 8382.6036 - mae: 66.0239 - val_loss: 7919.8882 - val_mae: 64.5722

Epoch 107/150

273/273 [==============================] - 70s 256ms/step - loss: 8135.8677 - mae: 65.2656 - val_loss: 7916.8335 - val_mae: 64.5181

Epoch 108/150

273/273 [==============================] - 71s 258ms/step - loss: 8049.9404 - mae: 65.0456 - val_loss: 7908.3511 - val_mae: 64.4399

Epoch 109/150

273/273 [==============================] - 72s 264ms/step - loss: 7939.6620 - mae: 65.1306 - val_loss: 7901.5796 - val_mae: 64.3617

Epoch 110/150

273/273 [==============================] - 70s 255ms/step - loss: 8059.7353 - mae: 65.2351 - val_loss: 7894.9629 - val_mae: 64.2769

Epoch 111/150

273/273 [==============================] - 71s 259ms/step - loss: 8078.8482 - mae: 64.9532 - val_loss: 7889.0737 - val_mae: 64.1602

Epoch 112/150

273/273 [==============================] - 71s 259ms/step - loss: 8203.2952 - mae: 65.1534 - val_loss: 7881.8872 - val_mae: 64.1428

Epoch 113/150

273/273 [==============================] - 71s 260ms/step - loss: 8021.4052 - mae: 64.7163 - val_loss: 7875.6646 - val_mae: 64.0615

Epoch 114/150

273/273 [==============================] - 71s 258ms/step - loss: 8201.4341 - mae: 65.2901 - val_loss: 7867.0938 - val_mae: 63.9763

Epoch 115/150

273/273 [==============================] - 72s 263ms/step - loss: 8111.1570 - mae: 64.7002 - val_loss: 7860.6860 - val_mae: 63.8970

Epoch 116/150

273/273 [==============================] - 69s 253ms/step - loss: 7792.6684 - mae: 64.3929 - val_loss: 7853.4888 - val_mae: 63.8189

Epoch 117/150

273/273 [==============================] - 68s 250ms/step - loss: 8090.4443 - mae: 64.7919 - val_loss: 7846.6753 - val_mae: 63.7234

Epoch 118/150

273/273 [==============================] - 68s 250ms/step - loss: 8018.4777 - mae: 64.5421 - val_loss: 7838.5083 - val_mae: 63.6637

Epoch 119/150

273/273 [==============================] - 70s 255ms/step - loss: 8078.1974 - mae: 64.7491 - val_loss: 7832.1821 - val_mae: 63.6111

Epoch 120/150

273/273 [==============================] - 69s 252ms/step - loss: 7839.0215 - mae: 63.8854 - val_loss: 7820.8467 - val_mae: 63.5104

Epoch 121/150

273/273 [==============================] - 68s 249ms/step - loss: 7987.1598 - mae: 64.1657 - val_loss: 7815.5684 - val_mae: 63.5297

Epoch 122/150

273/273 [==============================] - 68s 248ms/step - loss: 8036.7563 - mae: 64.6019 - val_loss: 7803.7461 - val_mae: 63.3131

Epoch 123/150

273/273 [==============================] - 68s 250ms/step - loss: 7949.9265 - mae: 63.8457 - val_loss: 7795.9517 - val_mae: 63.2526

Epoch 124/150

273/273 [==============================] - 71s 261ms/step - loss: 8143.0449 - mae: 64.3155 - val_loss: 7789.0024 - val_mae: 63.1432

Epoch 125/150

273/273 [==============================] - 68s 250ms/step - loss: 8140.8686 - mae: 64.2501 - val_loss: 7782.2739 - val_mae: 63.1526

Epoch 126/150

273/273 [==============================] - 69s 253ms/step - loss: 8049.5511 - mae: 64.2005 - val_loss: 7775.1011 - val_mae: 63.0455

Epoch 127/150

273/273 [==============================] - 69s 251ms/step - loss: 7764.5967 - mae: 63.6608 - val_loss: 7768.4590 - val_mae: 63.0593

Epoch 128/150

273/273 [==============================] - 67s 245ms/step - loss: 7836.4137 - mae: 63.7229 - val_loss: 7761.5576 - val_mae: 62.9700

Epoch 129/150

273/273 [==============================] - 67s 246ms/step - loss: 7844.0585 - mae: 63.6464 - val_loss: 7756.0679 - val_mae: 63.0015

Epoch 130/150

273/273 [==============================] - 66s 244ms/step - loss: 7920.9684 - mae: 64.0747 - val_loss: 7753.7666 - val_mae: 62.9503

Epoch 131/150

273/273 [==============================] - 68s 248ms/step - loss: 7833.7799 - mae: 63.6380 - val_loss: 7747.2690 - val_mae: 62.8746

Epoch 132/150

273/273 [==============================] - 68s 248ms/step - loss: 7904.2761 - mae: 63.7532 - val_loss: 7742.7090 - val_mae: 62.8583

Epoch 133/150

273/273 [==============================] - 69s 254ms/step - loss: 8047.6140 - mae: 63.9913 - val_loss: 7737.4053 - val_mae: 62.7785

Epoch 134/150

273/273 [==============================] - 67s 246ms/step - loss: 7943.3913 - mae: 63.6909 - val_loss: 7732.6128 - val_mae: 62.7872

Epoch 135/150

273/273 [==============================] - 68s 248ms/step - loss: 7937.4888 - mae: 63.6617 - val_loss: 7728.6724 - val_mae: 62.6928

Epoch 136/150

273/273 [==============================] - 62s 228ms/step - loss: 7955.2694 - mae: 63.7793 - val_loss: 7724.0063 - val_mae: 62.6431

Epoch 137/150

273/273 [==============================] - 62s 226ms/step - loss: 8021.3685 - mae: 63.9636 - val_loss: 7719.7700 - val_mae: 62.6444

Epoch 138/150

273/273 [==============================] - 61s 225ms/step - loss: 7850.8130 - mae: 63.3130 - val_loss: 7714.8394 - val_mae: 62.6192

Epoch 139/150

273/273 [==============================] - 61s 225ms/step - loss: 7899.5321 - mae: 63.5370 - val_loss: 7710.7456 - val_mae: 62.5520

Epoch 140/150

273/273 [==============================] - 61s 222ms/step - loss: 8138.2508 - mae: 64.0794 - val_loss: 7711.3955 - val_mae: 62.5012

Epoch 141/150

273/273 [==============================] - 60s 222ms/step - loss: 7783.9759 - mae: 63.3851 - val_loss: 7705.9009 - val_mae: 62.5233

Epoch 142/150

273/273 [==============================] - 61s 223ms/step - loss: 7844.7223 - mae: 63.2917 - val_loss: 7701.9321 - val_mae: 62.5271

Epoch 143/150

273/273 [==============================] - 60s 220ms/step - loss: 7773.0852 - mae: 62.9951 - val_loss: 7697.3213 - val_mae: 62.4414

Epoch 144/150

273/273 [==============================] - 61s 222ms/step - loss: 7961.3421 - mae: 63.7030 - val_loss: 7691.9458 - val_mae: 62.3815

Epoch 145/150

273/273 [==============================] - 60s 222ms/step - loss: 7652.2020 - mae: 62.5647 - val_loss: 7687.1699 - val_mae: 62.3395

Epoch 146/150

273/273 [==============================] - 61s 224ms/step - loss: 7828.0561 - mae: 63.0869 - val_loss: 7683.5947 - val_mae: 62.3607

Epoch 147/150

273/273 [==============================] - 60s 220ms/step - loss: 7741.2522 - mae: 62.8325 - val_loss: 7679.0864 - val_mae: 62.2841

Epoch 148/150

273/273 [==============================] - 60s 222ms/step - loss: 7799.2858 - mae: 62.9819 - val_loss: 7680.0063 - val_mae: 62.3857

Epoch 149/150

273/273 [==============================] - 60s 222ms/step - loss: 7844.7404 - mae: 63.3468 - val_loss: 7672.7368 - val_mae: 62.2881

Epoch 150/150

273/273 [==============================] - 61s 224ms/step - loss: 7807.6913 - mae: 62.7041 - val_loss: 7667.2842 - val_mae: 62.1901

No handles with labels found to put in legend.

dict_keys(['loss', 'mae', 'val_loss', 'val_mae', 'lr'])

# 預測1

predict = model.predict(X_test[:5])

print('預測結果為:', predict)

print('真實結果為:', y_test[:5])

預測結果為: [[ 98.9861 ]

[ 55.642155]

[102.504036]

[ 58.44735 ]

[101.403114]]

真實結果為: [ 86. 89. 151. 41. 121.]

# 預測2 用前五天數據預測第六天數據

data_test = pd.read_csv('./dataset/data.csv')

data_test = data_test.iloc[-120:]

# print(data_test.head())

data_test = data_test.iloc[:, 6:]

# print(data_test)

data_test = data_test.join(pd.get_dummies(data_test['cbwd']))

del data_test['cbwd']

# print(data_test)

# 歸一化 使用訓練集的mean std

data_test = (data_test-mean)/std

# data_test = data_test.to_numpy()

data_test = np.array(data_test)

print(data_test.shape)

# 將數據擴展成三維

# data_test_expand = np.expand_dims(data_test, 0)

data_test_expand = data_test.reshape((1,)+(data_test.shape))

print(data_test_expand.shape)

# load_model = keras.models.load_model('./pm2.5.h5')

# predict1 = load_model.predict(data_test_expand)

predict1 = model.predict(data_test_expand)

print('北京2020-01 PM2.5預測結果為', predict1)

(120, 10)

(1, 120, 10)

北京2020-01 PM2.5預測結果為 [[58.903873]]

真實數據對比

Graduation design based on python+vue+elementui+django freshman enrollment management system (separation of front and back ends)

Graduation design based on python+vue+elementui+django freshman enrollment management system (separation of front and back ends)

The content of this graduation