智能問答系統(對話系統)application is very common,such as customer service,前台機器人,It may be used in many scenarios such as explaining robotsFAQ問答系統.所謂的FAQ就是 frequently asked questions,That is to say, in a certain situation,Algorithms can answer some of the more common questions.

Integrate the Winter Olympics knowledge data provided by the data party,The input side accepts input in natural language(For example, ask in Chinese“China wins gold medals at Sochi Winter Olympics”),The output terminal outputs the corresponding answer to the question.

There are two packets:標注數據1和標注數據2.標注數據1The problem of data for the single layer,標注數據2The data in is an overlay problem.

問句:Which Olympic Games has the most gold medals?

分詞:哪 一屆 奧運會 的 金牌 總數 最 多 ?

# 1問句類型:Which多選一

# 1領域類型:Competition比賽

# 1語義類型:Calculation計算

# 2問句類型:NA

# 2領域類型:NA

# 2語義類型:NA

問句:Is the first Chinese Olympic man worthy of respect??

分詞:中國 奧運 第一 人 值得 尊敬 嗎 ?

# 1問句類型:Who

# 1領域類型:Competition

# 1語義類型:Factoid

# 2問句類型:Whether

# 2領域類型:Competition

# 2語義類型:Opinion

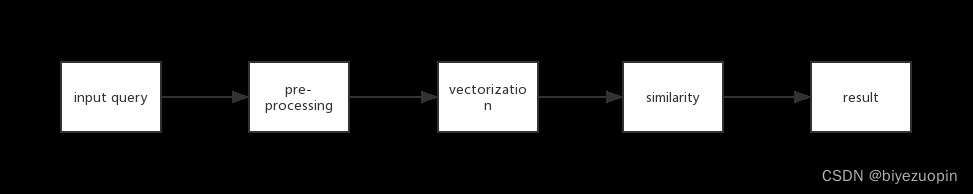

For a complete dialogue systemFAQ的構建,The first step is to preprocess the input question.The main thing that needs to be done in preprocessing is to delete useless text,去除停用詞,Divide the question into Chinese words.

The second step is to vectorize the processed corpus.Common vectorization methods include word frequency vectorization、word2vec、tf-idf 等方法.向量化之後,Each question corresponds to a high-dimensional vector,When a question is entered,Preprocess the problem first、向量化,And then the data set of data,Output the answer to the question with the highest similarity,This is the general framework of the retrieval dialogue system.

查看測試集,Found some questions unanswered,So the first step in preprocessing is to remove unanswered questions.The second step of preprocessing is to delete text that does not belong to Chinese(包括各種標點符號).The third step of preprocessing is to segment the revised text into words,break the whole sentence into words.

使用CountVectorizerGenerate word frequency matrix for each corpus,So as to complete the spatial vectorization of the corpus.

Compare the similarity between the corpus in the database and the vectorized vector of the input question.Here we use the cosine similarity comparison algorithm.After the comparison is complete,Return the answer for the corpus with the highest score.

The following is the specific implementation of the entire processing process:

Cleaning up non-Chinese useless data,The following categories of data need to be removed from the training and test sets:

html = re.compile('<.*?>')

http = re.compile(r'http[s]?://(?:[a-zA-Z]|[0-9]|[[email protected]&+]|[!*\(\),]|(?:%[0-9a-fA-F][0-9a-f

A-F]))+')

src = re.compile(r'\b(?!src|href)\w+=[\'\"].*?[\'\"](?=[\s\>])')

space = re.compile(r'[\\][n]')

ids = re.compile('[(]["微信"]?id:(.*?)[)]')

wopen = re.compile('window.open[(](.*?)[)]')

english = re.compile('[a-zA-Z]')

others= re.compile(u'[^\u4e00-\u9fa5\u0041-\u005A\u0061-\u007A\u0030-\u0039\u3002\uFF1F\uFF01\uFF0C\u3001\uFF1B\uFF1A\u300C\u300D\u300E\u300F\u2018\u2019\u201C\u201D\uFF08\uFF09\u3014\u3015\u3010\u3011\u2014\u2026\u2013\uFF0E\u300A\u300B\u3008\u3009\!\@\#\$\%\^\&\*\(\)\-\=\[\]\{\}\\\|\;\'\:\"\,\.\/\<\>\?\/\*\+\_"\u0020]+')

需要將html鏈接、數據來源、用戶名、English characters and other single non-Chinese characters clear,Use the above regular expression to describe the categories that need to be deleted,配合subcommand to remove it from training set and dataset.Due to the better quality of the data,This step does very little processing of the source data.

There are two ways to divide the data:Keep stop words and remove stop words.The library used for data partitioning isjieba分詞,具體的操作如下:

Question.txt和Answer.txtSeparate questions and answers,After preprocessing, output toQuestionSeg.txt和AnswerSeg.txt.

inputQ = open('Question.txt', 'r', encoding='gbk')

outputQ = open('QuestionSeg.txt', 'w', encoding='gbk')

inputA = open('Answer.txt', 'r', encoding='gbk')

outputA = open('AnswerSeg.txt', 'w', encoding='gbk')

Let's first look at the division of reserved stop words:

def segmentation(sentence):

sentence_seg = jieba.cut(sentence.strip())

out_string = ''

for word in sentence_seg:

out_string += word

out_string += " "

return out_string

for line in inputQ:

line_seg = segmentation(line)

outputQ.write(line_seg + '\n')

outputQ.close()

inputQ.close()

for line in inputA:

line_seg = segmentation(line)

outputA.write(line_seg + '\n')

outputA.close()

inputA.close()

Next, let's look at the division method of not retaining stop words,The test results show that retaining stopwords is more effective for partitioning,查閱資料

顯示原因為CountVectorizerThe current version optimizes stop words better.

def stopword_list():

stopwords = [line.strip() for line in open('stopword.txt', encoding='utf-8').readlines()]

return stopwords

def seg_with_stop(sentence):

sentence_seg = jieba.cut(sentence.strip())

stopwords = stopword_list()

out_string = ''

for word in sentence_seg:

if word not in stopwords:

if word != '\t':

out_string += word

out_string += " "

return out_string

for line in inputQ:

line_seg = seg_with_stop(line)

outputQ.write(line_seg + '\n')

outputQ.close()

inputQ.close()

for line in inputA:

line_seg = seg_with_stop(line)

outputA.write(line_seg + '\n')

outputA.close()

inputA.close()

經過以上兩步,We have successfully segmented the text into separate Chinese words,Next, we need to count the frequency and distribution of each word..

stopwords = [line.strip() for line in open('stopword.txt',encoding='utf-8').readlines()]

First you need to get the stop word list.Here we use Baidu stop word list、哈工大停用詞表、Comprehensive results of multiple word lists such as Chinese stop word lists.

CountVectorizer是通過fit_transform函數將文本中的詞語轉換為詞頻矩陣,矩陣元素a[i][j] 表示j詞在第i個文本下的詞頻.即各個詞語出現的次數,通過get_feature_names()可看到所有文本的關鍵字,通過toarray()可看到詞頻矩陣的結果.

count_vec = CountVectorizer()

First perform word frequency statistics on the text content.需要說明的是,Due to the poor first edition code optimization,No saving of vectorized data is done,After each input question, the entire corpus needs to be vectorized and recalculated,Long query time.

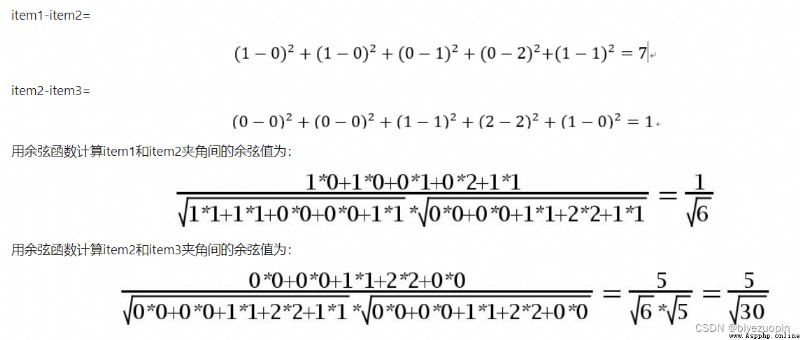

余弦相似度量:Calculate the similarity between individuals.

相似度越小,距離越大.相似度越大,距離越小.

假設有3個物品,item1,item2和item3,represented by a vector as:

item1[1,1,0,0,1],

item2[0,0,1,2,1],

item3[0,0,1,2,0],

in five-dimensional space3個點.Calculated using the Euclidean distance formulaitem1、itme2之間的距離,以及item2和item3之間的距離,分別是:

由此可得出item1和item2相似度小,The distance between the two is large(距離為7),item2和itme3相似度大,The distance between the two is small(距離為1).

余弦相似度算法:The cosine value of the angle between two vectors in a vector space is used as a measure of the difference between two individuals,Cosine is close to1,The angle tends to0,表明兩個向量越相似,The cosine is close to0,The angle tends to90度,Indicates that the less similar the two vectors are.

Based on cosine similarity algorithm,We compare the vector of the input question with the vector of the corpus in the database one by one,The output has the highest similarity(最接近1)corpus of answers.

Cosine similarity calculation function:

def count_cos_similarity(vec_1, vec_2):

if len(vec_1) != len(vec_2):

return 0

s = sum(vec_1[i] * vec_2[i] for i in range(len(vec_2)))

den1 = math.sqrt(sum([pow(number, 2) for number in vec_1]))

den2 = math.sqrt(sum([pow(number, 2) for number in vec_2]))

return s / (den1 * den2)

Calculate the cosine similarity of two sentences

def cos_sim(sentence1, sentence2):

sentences = [sentence1, sentence2]

vec_1 = count_vec.fit_transform(sentences).toarray()[0] #input problem vectorization

vec_2 = count_vec.fit_transform(sentences).toarray()[1] #Corpus to quantify

return count_cos_similarity(vec_1, vec_2)

def get_answer(sentence1):

sentence1 = segmentation(sentence1)

score = []

for idx, sentence2 in enumerate(open('QuestionSeg.txt', 'r')):

# print('idx: {}, sentence2: {}'.format(idx, sentence2))

# print('idx: {}, cos_sim: {}'.format(idx, cos_sim(sentence1, sentence2)))

score.append(cos_sim(sentence1, sentence2))

if len(set(score)) == 1:

print('Couldn't find the answer you were looking for at the moment.')

else:

index = score.index(max(score))

file = open('Answer.txt', 'r').readlines()

print(file[index])

while True:

sentence1 = input('Please enter the question you need to ask(輸入q退出):\n')

if sentence1 == 'q':

break

else:

get_answer(sentence1)

To improve the performance of the question answering system,We did not split the dataset in this experiment,Instead, use all the problems to train the model.

We test against tricky questions from several angles,結果如下:

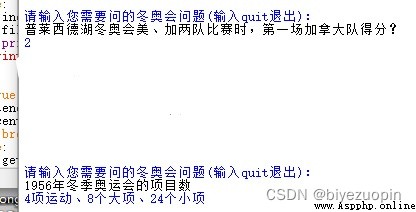

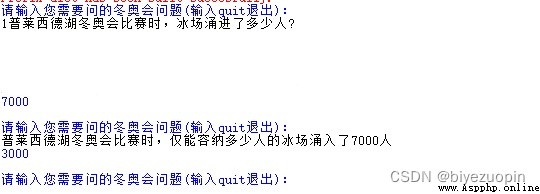

Testing for high similarity problems:

Screenshot of original question and answer and test result:

During the Lake Placid Winter Olympics,僅能容納3000people's ice rink,How many people came in at once?

答案:7000

由此可見,The retrieval system can still make a clear distinction between questions with high similarity.

Questions for some missing questions:

Screenshot of original question and answer and test result:

Lake Placid Winter Olympics Beauty、When adding two teams,The first U.S. teaml:how many defeats?

答案:2

What is the number of events in the Winter Olympics in Cortina d'Ampezzo??

答案:4項運動、8個大項、24個小項

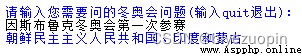

What did the 2019 Innsbruck Winter Olympics take part in for the first time??

答案:朝鮮民主主義人民共和國、India and Mongolia