Hello everyone , I'm brother spicy ~

In the past, we have updated many basic related , I'll give you some hard goods today , Be cautious if you have a bad foundation , So as not to dampen your enthusiasm ~

Secondly, analyze the data | Data visualization |pandas Those who are interested can come here to brush the questions : →→→《Pandas Brush wildly 120 topic 》←←←

development tool :pycharm

development environment :python3.7, Windows10

Using the toolkit :requests

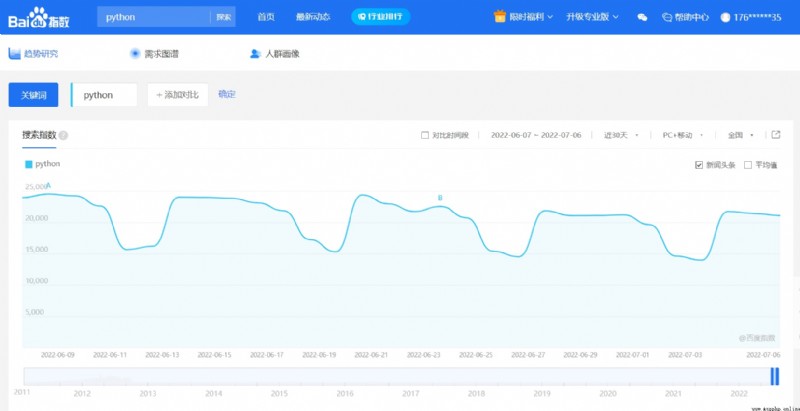

You need to get the curve index data on the current web page through code

This is a single point , You need to get the data information of all points

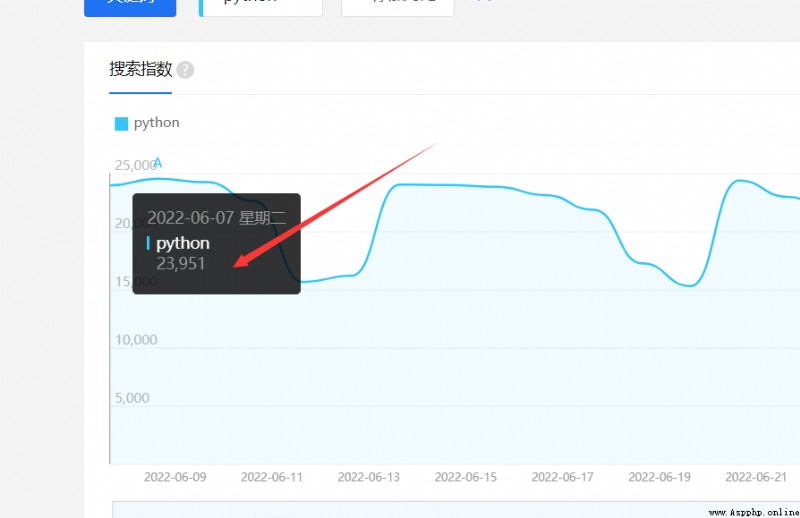

The data we get are static and dynamic , First, distinguish between static and dynamic data , Right click on the page to view the web page source code , Search on the source code page to see whether our data exists on the static page

It can be seen that our data can not be found on the page that the data we want is dynamic data

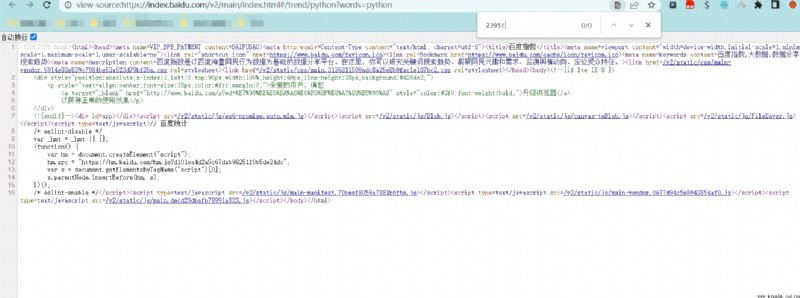

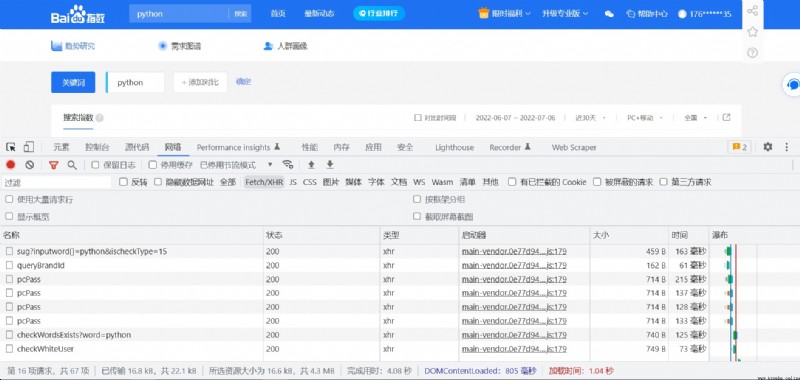

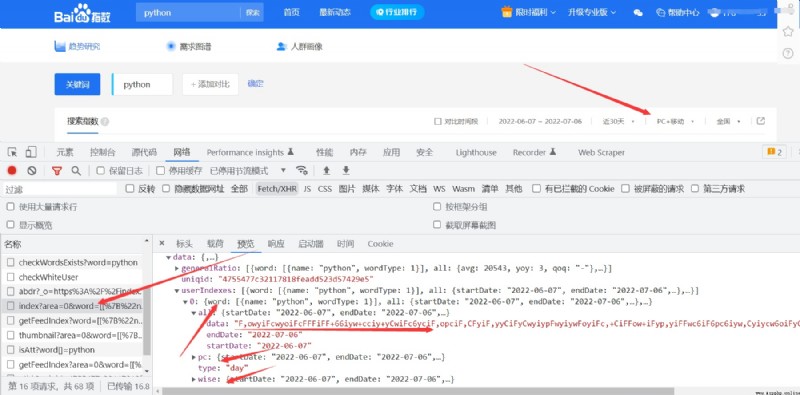

To obtain dynamic data, we need to capture packets , Right click on the browser page and click Check , Open our packet capture tool , Click on network, choice xhr Options ,xhr Dynamic data for filtering , Refresh the page , Now we are showing dynamic data

Locate the data we want , If you are not skilled, you can confirm one by one to see that the data is what we want , We can roughly judge that the data we want is in the current request package

But this data is special , This data doesn't look like the coordinate point data we want to obtain , It can be concluded that , The current data is encrypted loaded by the server json data , What we need to consider is how to find the decryption location of this data , A web page has html、css、js Made up of , Data can only be processed in js In the code, we find js The process of decrypting the location is called js reverse

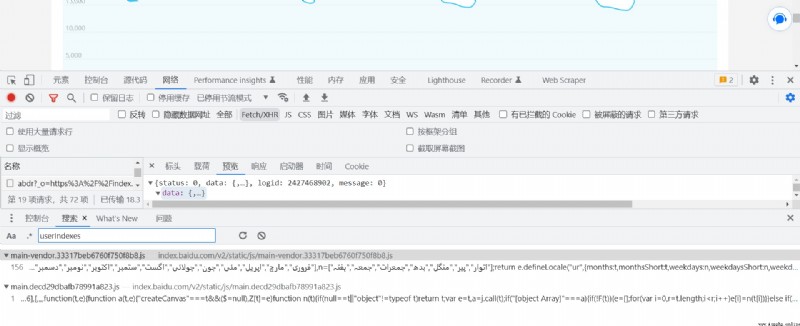

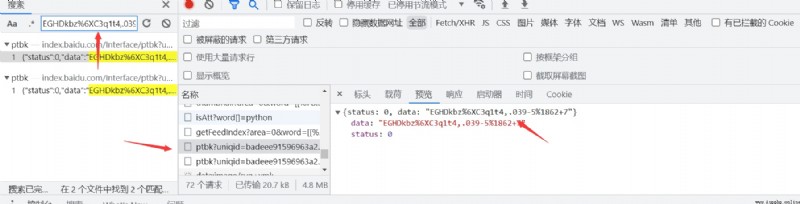

Search globally to locate the location of our data , There are two ways to locate. The data transmitted by the server is json Information , We can go straight through JSON.parse To locate ,js Code wants to handle js Data needs to be converted through this keyword , Then we can pass userIndexes To locate , Because when the front end fetches data, it must be based on userIndexes To locate

Located at js There are two files , Those who are interested can visit one by one , The data we want can be found in the second file , Break the point for parsing , See how our data is processed and decrypted , You can intuitively see that there is a decrypt Function roughly infers that it is our decryption function

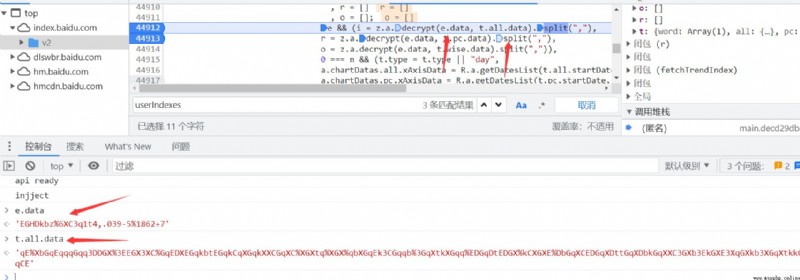

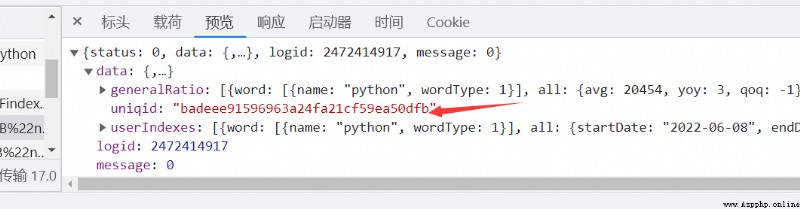

Refresh the page again after the breakpoint , You can see that two parameters are passed in the decryption function , The second parameter is that we start to capture the encrypted data transmitted by the server , The first parameter is not very clear at present

We can search the content of the first parameter passed , We can see that our data is requested by another interface , The first parameter requires us to send the request again for this interface

How does our interface relate to the data we requested earlier , The URL of the interface data request is based on uniqid To get it

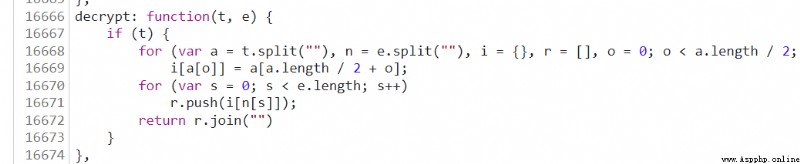

Both parameters are clear , Then we will begin to treat him js Code to parse ,

In fact, the thing to do is very simple , Rearrange the data according to the index of encrypted data , According to the index value , Get the coordinate data on the final curve , Now what we need to do is put js Code to py Code

def decrypt(t, e):

n = list(t)

i = list(e)

a = {}

result = []

ln = int(len(n) / 2)

start = n[ln:]

end = n[:ln]

for j, k in zip(start, end):

a.update({k: j})

for j in e:

result.append(a.get(j))

return ''.join(result)

This article is only for technology sharing , Do not use for other purposes !!

import requests

import sys

import time

word_url = 'http://index.baidu.com/api/SearchApi/thumbnail?area=0&word={}'

headers = {

'Cipher-Text': ' Your data ',

'Cookie': ' Yours cookie',

'Host': 'index.baidu.com',

'Referer': 'https://index.baidu.com/v2/main/index.html',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.63 Safari/537.36',

# 'X-Requested-With': 'XMLHttpRequest',

}

def decrypt(t, e):

n = list(t)

i = list(e)

a = {}

result = []

ln = int(len(n) / 2)

start = n[ln:]

end = n[:ln]

for j, k in zip(start, end):

a.update({k: j})

for j in e:

result.append(a.get(j))

return ''.join(result)

def get_ptbk(uniqid):

url = 'http://index.baidu.com/Interface/ptbk?uniqid={}'

resp = requests.get(url.format(uniqid), headers=headers)

if resp.status_code != 200:

print(' obtain uniqid Failure ')

sys.exit(1)

return resp.json().get('data')

def get_index_data(keyword, start='2011-02-10', end='2021-08-16'):

keyword = str(keyword).replace("'", '"')

url = f'https://index.baidu.com/api/SearchApi/index?area=0&word=[[%7B%22name%22:%22python%22,%22wordType%22:1%7D]]&days=30'

resp = requests.get(url, headers=headers)

print(resp.json())

content = resp.json()

data = content.get('data')

user_indexes = data.get('userIndexes')[0]

uniqid = data.get('uniqid')

ptbk = get_ptbk(uniqid)

all_data = user_indexes.get('all').get('data')

result = decrypt(ptbk, all_data)

result = result.split(',')

print(result)