In the past two years, the requirements and difficulties of graduation design and graduation defense have been increasing , The traditional design topic lacks innovation and highlights , Often fail to meet the requirements of graduation defense , In the past two years, younger students and younger students have been telling their elders that the project system they have built cannot meet the requirements of teachers .

In order that everyone can pass BiShe smoothly and with the least energy , Senior students share high-quality graduation design projects , What I want to share today is

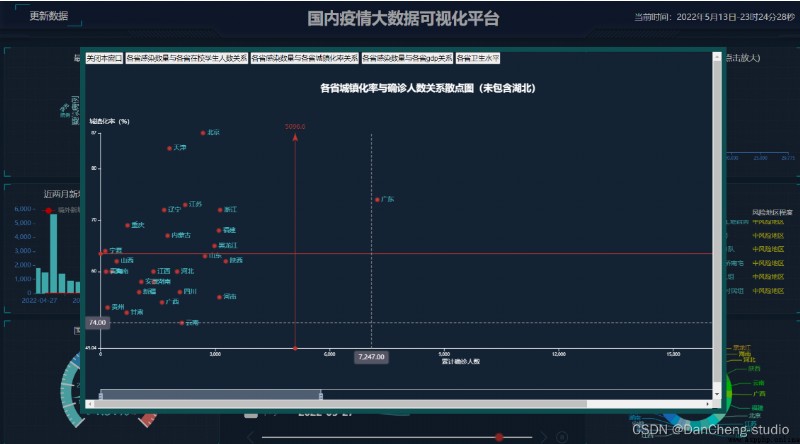

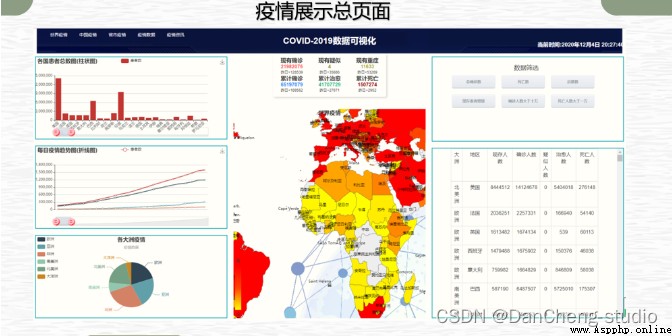

be based on python/ Big data epidemic analysis and visualization system

The senior here gives a comprehensive score for a topic ( Each full marks 5 branch )

🧿 Topic selection guidance , Project sharing :

https://gitee.com/dancheng-senior/project-sharing-1/blob/master/%E6%AF%95%E8%AE%BE%E6%8C%87%E5%AF%BC/README.md

The global Covid-19 The great crisis affects our lives , Our trip 、 communication 、 education 、 Great changes have taken place in the economy , Visual analysis and display of global epidemic big data , It can be used by all sectors of society to access epidemic data , Real time interactive situation perception of epidemic situation , Is an important epidemic analysis 、 Basis for prevention and control decision .

The epidemic outbreak in China , It has a great impact on our daily life , During the severe epidemic , Everyone talks “ Epidemic disease ” Color change , There is a great need to understand the situation of the epidemic ; also , At present, there is still the possibility of a second outbreak of the epidemic in China , Everyone is also eager to understand the situation of the epidemic .

Based on this situation , The seniors crawled and visualized the epidemic data and tracked the epidemic , That is, the works designed by the seniors .

PS: Limited space , Senior students only show some key codes

Epidemic data crawler , Is to send a request to the website , And extract the required data from the response

1、 Initiate request , Get a response

2、 Parsing content

3、 Save the data

import pymysql

import time

import json

import traceback # Tracking exception

import requests

def get_tencent_data():

""" :return: Return historical data and detailed data of the current day """

url = ''

url_his=''

# The most basic anti crawler

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36',

}

r = requests.get(url, headers) # Use requests request

res = json.loads(r.text) # json String to dictionary

data_all = json.loads(res['data'])

# Plus history Matching things

r_his=requests.get(url_his,headers)

res_his=json.loads(r_his.text)

data_his=json.loads(res_his['data'])

history = {

} # The historical data

for i in data_his["chinaDayList"]:

ds = "2020." + i["date"]

tup = time.strptime(ds, "%Y.%m.%d")

ds = time.strftime("%Y-%m-%d", tup) # Change the time format , Otherwise, an error will be reported when inserting the database , The database is datetime type

confirm = i["confirm"]

suspect = i["suspect"]

heal = i["heal"]

dead = i["dead"]

history[ds] = {

"confirm": confirm, "suspect": suspect, "heal": heal, "dead": dead}

for i in data_his["chinaDayAddList"]:

ds = "2020." + i["date"]

tup = time.strptime(ds, "%Y.%m.%d")

ds = time.strftime("%Y-%m-%d", tup)

confirm = i["confirm"]

suspect = i["suspect"]

heal = i["heal"]

dead = i["dead"]

history[ds].update({

"confirm_add": confirm, "suspect_add": suspect, "heal_add": heal, "dead_add": dead})

details = [] # Detailed data of the day

update_time = data_all["lastUpdateTime"]

data_country = data_all["areaTree"] # list 25 A country

data_province = data_country[0]["children"] # China's provinces

for pro_infos in data_province:

province = pro_infos["name"] # Provincial name

for city_infos in pro_infos["children"]:

city = city_infos["name"]

confirm = city_infos["total"]["confirm"]

confirm_add = city_infos["today"]["confirm"]

heal = city_infos["total"]["heal"]

dead = city_infos["total"]["dead"]

details.append([update_time, province, city, confirm, confirm_add, heal, dead])

return history, details

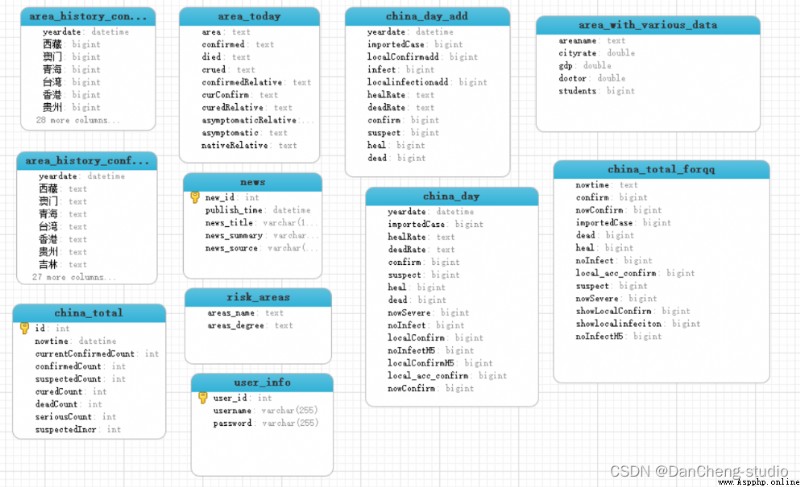

Data table structure

history The table stores the total daily data

CREATE TABLE history (

ds datetime NOT NULL COMMENT ‘ date ’,

confirm int(11) DEFAULT NULL COMMENT ‘ Cumulative diagnosis ’,

confirm_add int(11) DEFAULT NULL COMMENT ‘ Newly added on the same day ’,

suspect int(11) DEFAULT NULL COMMENT ‘ Remaining suspected ’,

suspect_add int(11) DEFAULT NULL COMMENT ‘ Suspected new on the same day ’,

heal int(11) DEFAULT NULL COMMENT ‘ Cumulative cure ’,

heal_add int(11) DEFAULT NULL COMMENT ‘ New healing on that day ’,

dead int(11) DEFAULT NULL COMMENT ‘ Cumulative death ’,

dead_add int(11) DEFAULT NULL COMMENT ‘ New deaths on that day ’,

PRIMARY KEY (ds) USING BTREE ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

details The table stores daily detailed data

CREATE TABLE details (

id int(11) NOT NULL AUTO_INCREMENT,

update_time datetime DEFAULT NULL COMMENT ‘ Data last updated ’,

province varchar(50) DEFAULT NULL COMMENT ‘ province ’,

city varchar(50) DEFAULT NULL COMMENT ‘ City ’,

confirm int(11) DEFAULT NULL COMMENT ‘ Cumulative diagnosis ’,

confirm_add int(11) DEFAULT NULL COMMENT ‘ New diagnosis ’,

heal int(11) DEFAULT NULL COMMENT ‘ Cumulative cure ’,

dead int(11) DEFAULT NULL COMMENT ‘ Cumulative death ’,

PRIMARY KEY (id) ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

Overall database diagram :

echarts Charting

def get_c1_data():

""" :return: Return to the large screen div id=c1 The data of """

# Because the data will be updated many times , Take the latest set of data from the timestamp

sql = "select sum(confirm)," \

"(select suspect from history order by ds desc limit 1)," \

"sum(heal)," \

"sum(dead) " \

"from details " \

"where update_time=(select update_time from details order by update_time desc limit 1) "

res = query(sql)

res_list = [str(i) for i in res[0]]

res_tuple=tuple(res_list)

return res_tuple

Implementation of epidemic map in China

def get_c2_data():

""" :return: Return provincial data """

# Because the data will be updated many times , Take the latest set of data from the timestamp

sql = "select province,sum(confirm) from details " \

"where update_time=(select update_time from details " \

"order by update_time desc limit 1) " \

"group by province"

res = query(sql)

return res

National cumulative trend

def get_l1_data():

""" :return: Return daily historical cumulative data """

sql = "select ds,confirm,suspect,heal,dead from history"

res = query(sql)

return res

def get_l2_data():

""" :return: Return the newly added confirmed and suspected data every day """

sql = "select ds,confirm_add,suspect_add from history"

res = query(sql)

return res

def get_r1_data():

""" :return: Before returning to cities in non Hubei region 5 name """

sql = 'SELECT city,confirm FROM ' \

'(select city,confirm from details ' \

'where update_time=(select update_time from details order by update_time desc limit 1) ' \

'and province not in (" hubei "," Beijing "," Shanghai "," tianjin "," Chongqing ") ' \

'union all ' \

'select province as city,sum(confirm) as confirm from details ' \

'where update_time=(select update_time from details order by update_time desc limit 1) ' \

'and province in (" Beijing "," Shanghai "," tianjin "," Chongqing ") group by province) as a ' \

'ORDER BY confirm DESC LIMIT 5'

res = query(sql)

return res

Epidemic hot search

def get_r2_data():

""" :return: Go back to the nearest 20 Hot search """

sql = 'select content from hotsearch order by id desc limit 20'

res = query(sql) # Format ((' The police fight against the epidemic 16 God sacrifice 1037364',), (' Sichuan will send two more medical teams 1537382',)

return res

🧿 Topic selection guidance , Project sharing :

https://gitee.com/dancheng-senior/project-sharing-1/blob/master/%E6%AF%95%E8%AE%BE%E6%8C%87%E5%AF%BC/README.md