DeepStack的簡介及安裝運行准備等

參見第一篇文章:

ImageAI續-DeepStack(一) 使用Python快速簡單實現人臉檢測、人臉匹配、人臉比較

上一篇人臉識別 使用了VISION-FACE功能 這一次物體檢測需要VISION-DETECTION

#linux

docker run -e VISION-DETECTION=True -e MODE=High -v localstorage:/datastore -p 8080:5000 deepquestai/deepstack

#windows

deepstack --VISION-DETECTION True --PORT 8080

import requests

from PIL import Image

import matplotlib.pyplot as plt

import cv2

def useUrl():

host = "http://192.168.0.101:8080"

# 加載待測圖片

image_data = open("1.jpg","rb").read()

img = cv2.imread("1.jpg")

# 調用 http://192.168.0.101:8080/v1/vision/detection 檢測圖片內物體

response = requests.post(host+"/v1/vision/detection",files={

"image":image_data}).json()

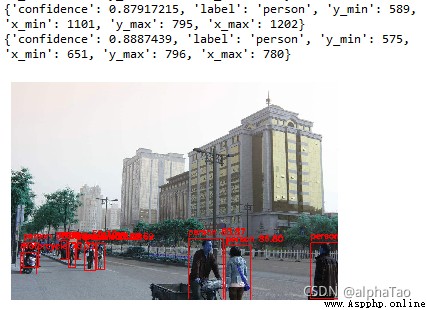

# 將檢測結果在圖片內框出標注

font = cv2.FONT_HERSHEY_SIMPLEX

for obj in response["predictions"]:

print(obj)

conf = obj["confidence"]*100

label = obj["label"]

y_max = int(obj["y_max"])

y_min = int(obj["y_min"])

x_max = int(obj["x_max"])

x_min = int(obj["x_min"])

pt1 = (x_min,y_min)

pt2 = (x_max,y_max)

cv2.rectangle(img,pt1,pt2,(255,0,0),2)

cv2.putText(img,'{} {:.2f}'.format(label,conf),(x_min,y_min-15),font,1,(255,0,0),4)

plt.imshow(img)

plt.axis('off')

plt.show()

from deepstack_sdk import ServerConfig, Detection

def pythonsdk():

config = ServerConfig("http://192.168.0.101:8080")

detection = Detection(config)

##檢測圖片中物體

response=detection.detectObject("2.jpg",output="2_output.jpg")

for obj in response:

print("Name: {}, Confidence: {}, x_min: {}, y_min: {}, x_max: {}, y_max: {}".format(obj.label, obj.confidence, obj.x_min, obj.y_min, obj.x_max, obj.y_max))

Name: person, Confidence: 0.5288314, x_min: 195, y_min: 220, x_max: 464, y_max: 816

Name: horse, Confidence: 0.5871692, x_min: 135, y_min: 220, x_max: 475, y_max: 821

Name: dog, Confidence: 0.9199933, x_min: 105, y_min: 496, x_max: 350, y_max: 819

當前支持檢測的物體類型

person, bicycle, car, motorcycle, airplane,

bus, train, truck, boat, traffic light, fire hydrant, stop_sign,

parking meter, bench, bird, cat, dog, horse, sheep, cow, elephant,

bear, zebra, giraffe, backpack, umbrella, handbag, tie, suitcase,

frisbee, skis, snowboard, sports ball, kite, baseball bat, baseball glove,

skateboard, surfboard, tennis racket, bottle, wine glass, cup, fork,

knife, spoon, bowl, banana, apple, sandwich, orange, broccoli, carrot,

hot dog, pizza, donot, cake, chair, couch, potted plant, bed, dining table,

toilet, tv, laptop, mouse, remote, keyboard, cell phone, microwave,

oven, toaster, sink, refrigerator, book, clock, vase, scissors, teddy bear,

hair dryer, toothbrush.

import requests

from PIL import Image

import matplotlib.pyplot as plt

import cv2

def useUrl():

host = "http://192.168.0.101:8080"

image_data = open("1.jpg","rb").read()

img = cv2.imread("1.jpg")

response = requests.post(host+"/v1/vision/detection",files={

"image":image_data}).json()

font = cv2.FONT_HERSHEY_SIMPLEX

for obj in response["predictions"]:

print(obj)

conf = obj["confidence"]*100

label = obj["label"]

y_max = int(obj["y_max"])

y_min = int(obj["y_min"])

x_max = int(obj["x_max"])

x_min = int(obj["x_min"])

pt1 = (x_min,y_min)

pt2 = (x_max,y_max)

cv2.rectangle(img,pt1,pt2,(255,0,0),2)

cv2.putText(img,'{} {:.2f}'.format(label,conf),(x_min,y_min-15),font,1,(255,0,0),4)

plt.imshow(img)

plt.axis('off')

plt.show()

from deepstack_sdk import ServerConfig, Detection

def pythonsdk():

config = ServerConfig("http://192.168.0.101:8080")

detection = Detection(config)

##檢測圖片中物體

response=detection.detectObject("2.jpg",output="2_output.jpg")

for obj in response:

print("Name: {}, Confidence: {}, x_min: {}, y_min: {}, x_max: {}, y_max: {}".format(obj.label, obj.confidence, obj.x_min, obj.y_min, obj.x_max, obj.y_max))

useUrl()

pythonsdk()