With the advent of the era of big data , The amount of network information has also become more and more , Based on the limitations of traditional search engines , Web crawlers emerge as the times require ,

See more and more people learning to crawl , In particular, many friends from non-technical backgrounds are also getting started , The fact proved that , The data age is changing our way of learning and thinking .

On the one hand, there are all kinds of data , Let's have “ resources ” To explore the internal operation rules of a field , These skills that can draw conclusions through a process approach , It may even be more reliable than experience .

On the other hand , The increase in the amount of data , People need to distinguish more and more things , The human brain, which is not good at computing, has lost its natural advantage at this time , We need tools to collect information , Then use the computer to help analyze and make decisions .

So knowledge and skills to some extent , Can change how we get information 、 The way of knowing the world , And these transferable methods and skills , It may be another recessive force of personal development .

Here I would like to give two examples , About skills changing thinking :

@ Southern end ( Our product manager )

Nanmo is one of the most unconventional people I have ever seen , I was a serious product manager , But I am a data analyst all day .

Unknown people , I'm sure I will “ except ” My hat is buttoned up .

What makes him special is that , For new needs , Will crawl a large number of data for analysis and evidence collection , Do all kinds of comparative analysis .

Such operation , The pace of natural living is slightly slower , But for the market 、 user 、 Understanding of competitive products , It is more profound than ordinary products .

In his place , You can always find a lot of useful data . In his words : These crawling back resources , It is the key to higher dimensions and deeper cognition .

@ Wangxiaozao ( A friend )

This friend is one I have met , Zero basic entry crawler is the fastest person , Not one of them. .

It took him a day to get started , Just delve into a case , By referring to the implementation process of others , And search for all kinds of targeted Python knowledge , And then independently crawled tens of thousands of rows of data .

By doing this , In two months , Most websites' anti - crawling has not been difficult for him , And through distributed technology , Realize multi thread crawling .

Through the learning of reptiles , He has mastered Python, Be able to write some scripts to deal with repetitive work , Work automatically .

Although this friend is not a technical , But cross-border learning makes him used to the comprehensive application of various technologies in one field .

In every industry , The more powerful the boss is , The more difficult it is for you to clearly define what he does , This is a profound interpretation of cross-border capabilities .

When it comes to learning “ Data acquisition ”, Online articles about crawlers 、 course 、 Don't have too many videos , But really learn quickly , Not many people can crawl data independently .

According to the feedback and roast of most people , There are mainly the following problems :

1. Information Asymmetry

mention Python Reptiles , Many people think that we should put Python Learn to be proficient , Then try to use programming skills to achieve crawler . So many people read the grammar on both sides , Not too much feeling , You can't program independently , I can't climb the data .

Some people think that HTML、CSS、Javascript Front three swordsmen +HTTP The set meal must go through first , In order to be able to learn in the process of reptiles . This one comes down , At least a few months , Many give up directly .

So the occurrence of these tragedies , It all comes down to one reason , The lead time is too long , These are caused by asymmetric information .

2. The programming gap

A person who has no programming experience , To acquire programming skills , It has to go through some pain and change of thinking . After all , The way people think , Computer system with computer , There is still a big difference .

Human thinking is logical and has strong adaptability , The computer relies on a complete set of rules , And commands based on these rules . What we need to do is , Use these rules to express your ideas , Let the computer realize .

So zero basic learning Python, You will also encounter these problems , Many grammars are incomprehensible , Understand that you can't apply , These are common .

And that is , What is the level of programming to learn , When to start practicing , It is also a place that beginners cannot control . So many people have data types 、 function 、 I learned a lot of sentences , I haven't really written a usable program yet .

3. Problem solving

Reptile is a kind of cross technology , Including the network 、 Programming 、 Knowledge points of multiple dimensions such as the front end , A lot of times there are problems , There is no way to solve this problem without experience , Even many people can not clearly describe the specific problems .

And for programming and crawlers , Different compilation environments 、 Web pages are very different , It is often difficult to find accurate solutions to problems , This is particularly distressing .

So when there are all kinds of mistakes and no progress , You will find that everything is a mountain and a river , But after solving the problem, there must be a bright future 、 Confidence is bursting .

Is the reptile not suitable for zero basic learning , The entry cycle will be long and painful ? It's not .

Find the right path , Grasp the basic knowledge pertinently , Have a clear output target , Plus reasonable practical training , Getting started can also be like learning Office equally , When water flows, a channel is formed .

These can be enough for you to climb the mainstream web pages , But it doesn't mean that you need to master it from the beginning , Learning is a gradual process , But there are some experiences to share . I would like to recommend one to you 《 Python Reptile development and project practice 》.

This book starts with the basic principles of reptiles , By introducing Pthyon Programming languages and Web The front-end basics lead the reader to the beginning , Then the principle of dynamic crawler and Scrapy The crawler frame , Finally, the design of distributed crawler under large-scale data and PySpider Crawler frames, etc .

The main features :

l from the shallower to the deeper , from Python and Web The front-end foundation starts to talk about , Gradually deepen the difficulty , Step by step .

l Detailed content , From static website to dynamic website , From stand-alone crawler to distributed crawler , It includes basic knowledge points , It also explains the key problems and difficulty analysis , It is convenient for readers to complete advanced .

l Practical , This book has 9 A reptile project , Driven by the actual combat project of the system , Explain the knowledge and skills required in crawler development in a shallow and deep way .

Detailed analysis of difficulties , Yes js Analysis of encryption 、 A breakthrough in anti - reptile measures 、 Design of de duplication scheme 、 The development of distributed crawler is explained in detail .

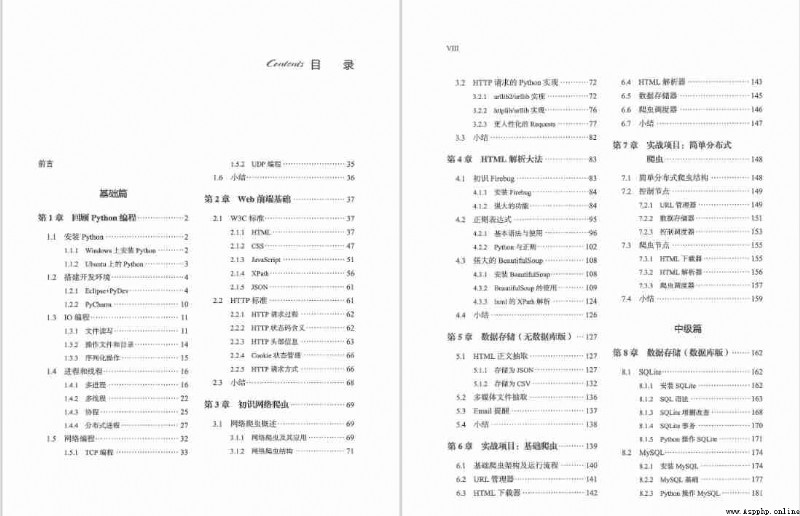

Next, let's take a look at the contents of this book :

Friends, if you need a full set of 《 Python Reptile development and project practice 》PDF, Scan the QR code below for free ( In case of code scanning problem , Comment area message collection )~

1.1 install Python 2

1.1.1 Windows Installation on Python 2

1.1.2 Ubuntu Upper Python 3

1.2 Build development environment 4

1.2.1 Eclipse+PyDev 4

1.2.2 PyCharm 10

1.3 IO Programming 11

1.3.1 File read and write 11

1.3.2 Operating files and directories 14

1.3.3 Serialization operation 15

1.4 Processes and threads 16

1.4.1 Multi process 16

1.4.2 Multithreading 22

1.4.3 coroutines 25

1.4.4 Distributed processes 27

1.5 Network programming 32

1.5.1 TCP Programming 33

1.5.2 UDP Programming 35

1.6 Summary 36

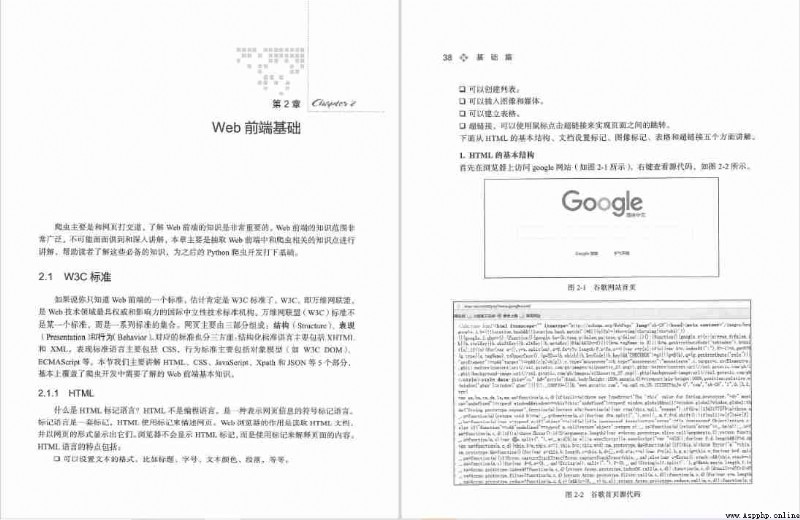

2.1 W3C standard 37

2.1.1 HTML 37

2.1.2 CSS 47

2.1.3 JavaScript 51

2.1.4 XPath 56

2.1.5 JSON 61

2.2 HTTP standard 61

2.2.1 HTTP Request process 62

2.2.2 HTTP Status code meaning 62

2.2.3 HTTP Header information 63

2.2.4 Cookie State management 66

2.2.5 HTTP Request mode 66

2.3 Summary 68

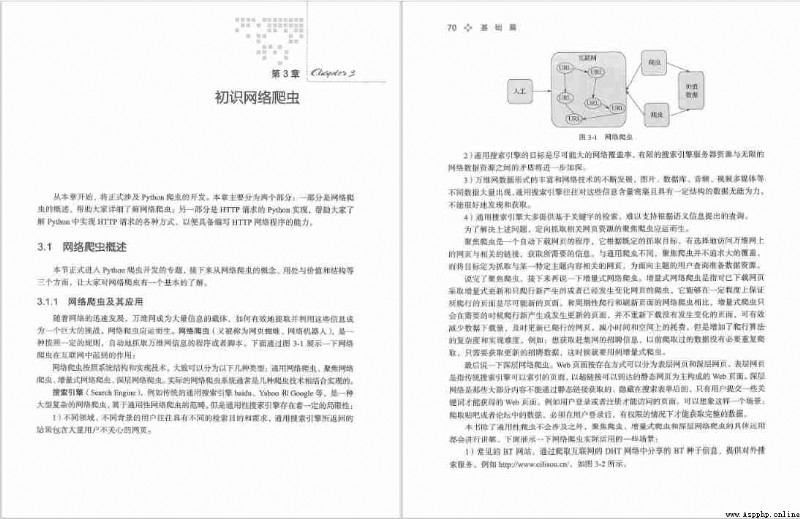

3.1 Overview of web crawler 69

3.1.1 Web crawler and its application 69

3.1.2 Web crawler structure 71

3.2 HTTP Requested Python Realization 72

3.2.1 urllib2/urllib Realization 72

3.2.2 httplib/urllib Realization 76

3.2.3 More human Requests 77

3.3 Summary 82

4.1 First time to know Firebug 83

4.1.1 install Firebug 84

4.1.2 Powerful features 84

4.2 Regular expressions 95

4.2.1 Basic grammar and usage 96

4.2.2 Python And regular 102

4.3 Powerful BeautifulSoup 108

4.3.1 install BeautifulSoup 108

4.3.2 BeautifulSoup Use 109

4.3.3 lxml Of XPath analysis 124

4.4 Summary 126

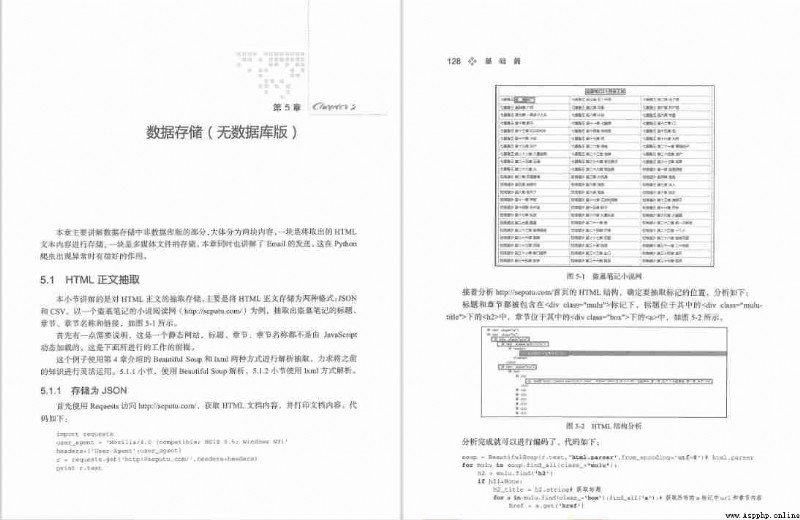

5.1 HTML Text extraction 127

5.1.1 Stored as JSON 127

5.1.2 Stored as CSV 132

5.2 Multimedia file extraction 136

5.3 Email remind 137

5.4 Summary 138

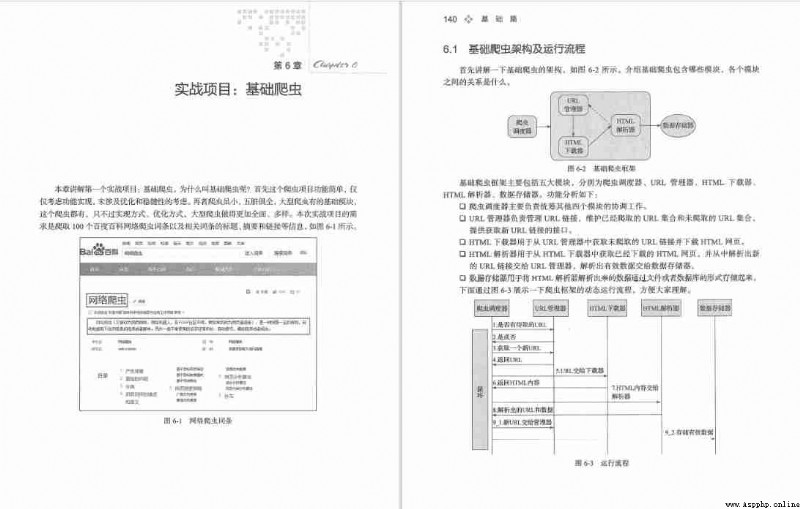

6.1 Basic crawler architecture and operation process 140

6.2 URL Manager 141

6.3 HTML Downloader 142

6.4 HTML Parser 143

6.5 Data storage 145

6.6 Crawler scheduler 146

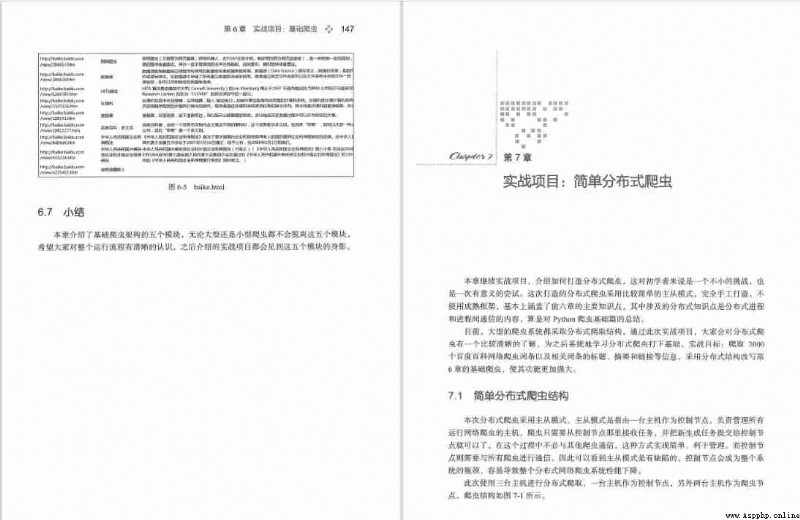

6.7 Summary 147

7.1 Simple distributed crawler structure 148

7.2 The control node 149

7.2.1 URL Manager 149

7.2.2 Data storage 151

7.2.3 Control scheduler 153

7.3 Crawler node 155

7.3.1 HTML Downloader 155

7.3.2 HTML Parser 156

7.3.3 Crawler scheduler 157

7.4 Summary 159

8.1 SQLite 162

8.1.1 install SQLite 162

8.1.2 SQL grammar 163

8.1.3 SQLite Additions and deletions 168

8.1.4 SQLite Business 170

8.1.5 Python operation SQLite 171

8.2 MySQL 174

8.2.1 install MySQL 174

8.2.2 MySQL Basics 177

8.2.3 Python operation MySQL 181

8.3 More suitable for reptiles MongoDB 183

8.3.1 install MongoDB 184

8.3.2 MongoDB Basics 187

8.3.3 Python operation MongoDB 194

8.4 Summary 196

9.1 Ajax And dynamic HTML 197

9.2 Dynamic reptile 1: Crawling through movie reviews 198

9.3 PhantomJS 207

9.3.1 install PhantomJS 207

9.3.2 Quick start 208

9.3.3 Screen capture 211

9.3.4 network monitoring 213

9.3.5 Page Automation 214

9.3.6 Common modules and methods 215

9.4 Selenium 218

9.4.1 install Selenium 219

9.4.2 Quick start 220

9.4.3 Element selection 221

9.4.4 Page operation 222

9.4.5 wait for 225

9.5 Dynamic reptile 2: Where to climb the net 227

9.6 Summary 230

10.1 Website login POST analysis 231

10.1.1 Hide form analysis 231

10.1.2 Encrypted data analysis 234

10.2 Verification code problem 246

10.2.1 IP agent 246

10.2.2 Cookie Sign in 249

10.2.3 Traditional verification code identification 250

10.2.4 Manual coding 251

10.2.5 Slide verification code 252

10.3 www]m]wap 252

10.4 Summary 254

11.1 PC Client packet capture analysis 255

11.1.1 HTTP Analyzer brief introduction 255

11.1.2 Small shrimp music PC End API Practical analysis 257

11.2 App Caught analysis 259

11.2.1 Wireshark brief introduction 259

11.2.2 I listen to books App End API Practical analysis 266

11.3 API Reptiles : Crawling mp3 Resource information 268

11.4 Summary 272

12.1 Scrapy Crawler architecture 273

12.2 install Scrapy 275

12.3 establish cnblogs project 276

12.4 Create a crawler module 277

12.5 Selectors 278

12.5.1 Selector Usage of 278

12.5.2 HTML Parsing implementation 280

12.6 Command line tools 282

12.7 Definition Item 284

12.8 Page turning function 286

12.9 structure Item Pipeline 287

12.9.1 customized Item Pipeline 287

12.9.2 Activate Item Pipeline 288

12.10 Built in data storage 288

12.11 Built in image and file download method 289

12.12 Start the crawler 294

12.13 Strengthen reptiles 297

12.13.1 Debugging method 297

12.13.2 abnormal 299

12.13.3 Control operation state 300

12.14 Summary 301

13.1 Look again Spider 302

13.2 Item Loader 308

13.2.1 Item And Item Loader 308

13.2.2 Input and output processors 309

13.2.3 Item Loader Context 310

13.2.4 Reuse and extend Item Loader 311

13.2.5 Built in processor 312

13.3 Look again Item Pipeline 314

13.4 Request and response 315

13.4.1 Request object 315

13.4.2 Response object 318

13.5 Downloader middleware 320

13.5.1 Activate the downloader middleware 320

13.5.2 Write downloader middleware 321

13.6 Spider middleware 324

13.6.1 Activate Spider middleware 324

13.6.2 To write Spider middleware 325

13.7 Expand 327

13.7.1 Configure extensions 327

13.7.2 Custom extensions 328

13.7.3 Built in extensions 332

13.8 Break through anti reptiles 332

13.8.1 UserAgent pool 333

13.8.2 Ban Cookies 333

13.8.3 Set download delay and automatic speed limit 333

13.8.4 agent IP pool 334

13.8.5 Tor agent 334

13.8.6 Distributed downloader :Crawlera 337

13.8.7 Google cache 338

13.9 Summary 339

14.1 Create a crawler 340

14.2 Definition Item 342

14.3 Create a crawler module 343

14.3.1 Log in to Zhihu 343

14.3.2 Parsing function 345

14.4 Pipeline 351

14.5 Optimization measures 352

14.6 Deploy crawler 353

14.6.1 Scrapyd 354

14.6.2 Scrapyd-client 356

14.7 Summary 357

15.1 De duplication scheme 360

15.2 BloomFilter Algorithm 361

15.2.1 BloomFilter principle 361

15.2.2 Python Realization BloomFilter 363

15.3 Scrapy and BloomFilter 364

15.4 Summary 366

16.1 Redis Basics 367

16.1.1 Redis brief introduction 367

16.1.2 Redis Installation and configuration 368

16.1.3 Redis Data type and operation 372

16.2 Python and Redis 375

16.2.1 Python operation Redis 375

16.2.2 Scrapy Integrate Redis 384

16.3 MongoDB colony 385

16.4 Summary 390

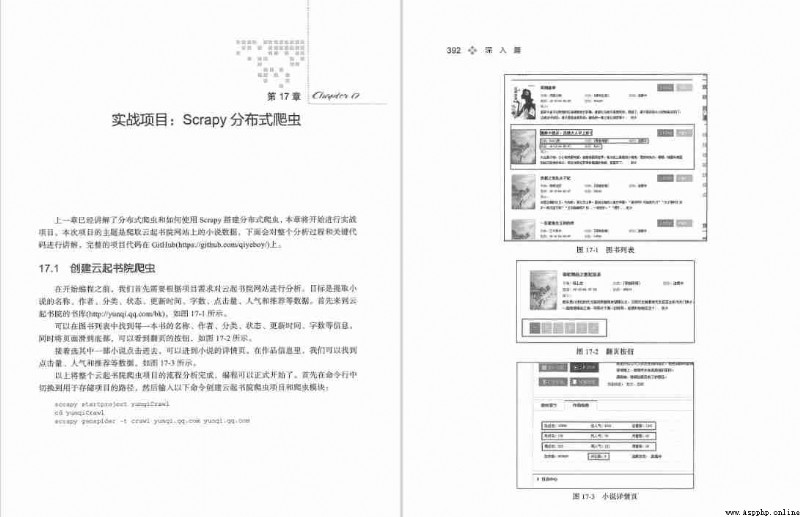

17.1 Create Yunqi academy crawler 391

17.2 Definition Item 393

17.3 Write the crawler module 394

17.4 Pipeline 395

17.5 Dealing with anti reptile mechanisms 397

17.6 De optimize 400

17.7 Summary 401

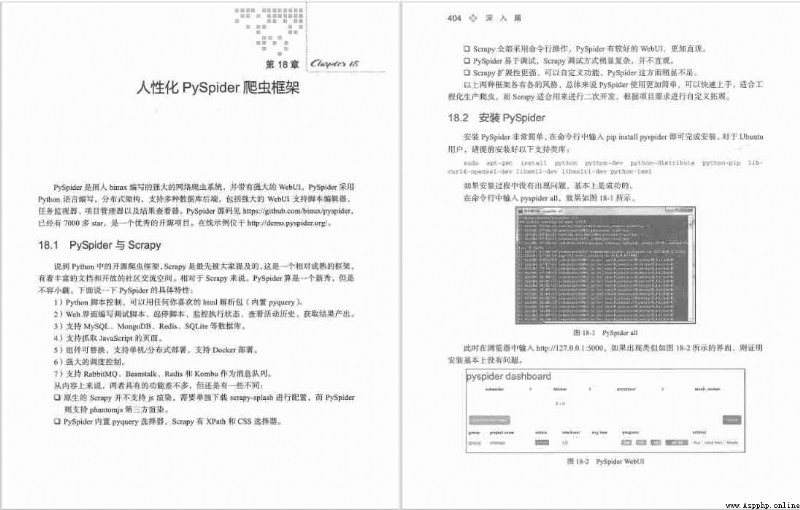

18.1 PySpider And Scrapy 403

18.2 install PySpider 404

18.3 Create a bean crawler 405

18.4 Selectors 409

18.4.1 PyQuery Usage of 409

18.4.2 Parsing data 411

18.5 Ajax and HTTP request 415

18.5.1 Ajax Crawling 415

18.5.2 HTTP Request to implement 417

18.6 PySpider and PhantomJS 417

18.6.1 Use PhantomJS 418

18.6.2 function JavaScript 420

18.7 data storage 420

18.8 PySpider Crawler architecture 422

18.9 Summary 423

For reasons of length , This is not going to unfold one by one , Friends, if you need a full set of 《 Python Reptile development and project practice 》PDF, give the thumbs-up + Just comment on the crawler , I'll always reply !