Catalog

1. Web analytics capture

2. Code writing

Use Python The role query function of production It has been made Nonebot2 plug-in unit , You can get the plug-in package in my group .

1. Web analytics capture

For this kind of Dynamic web pages We go to crawl the web html Code can't get complete data , But you can't use it if you want to make it into a robot plug-in selenium, You can view the requested address api Find the data

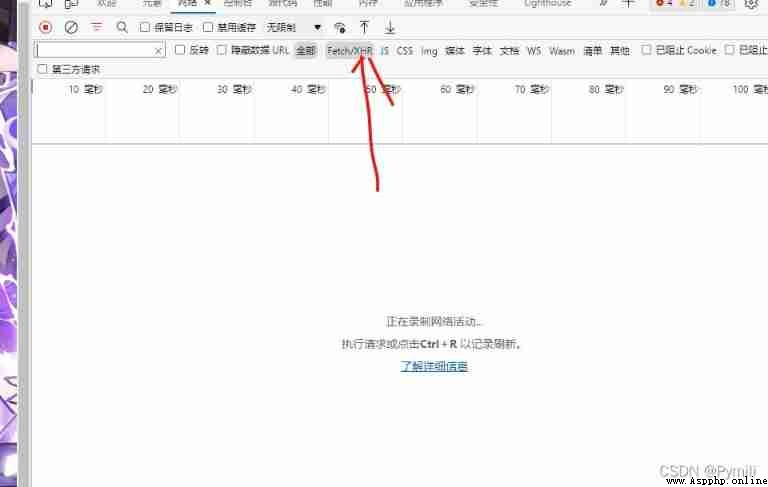

choice xhr Look for documents api

As shown in the figure above, when I click another role , I found a request xhr file

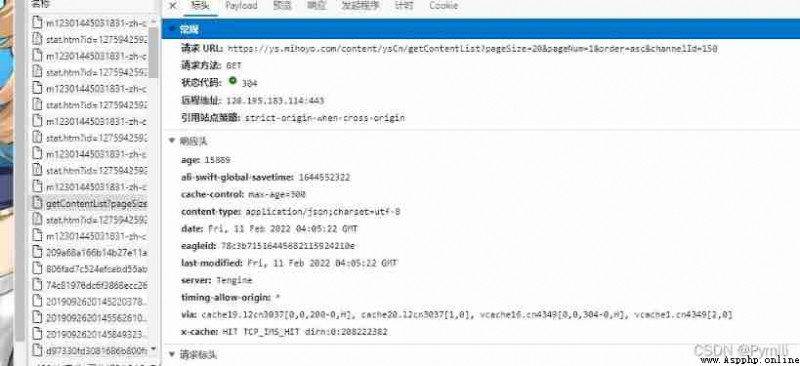

So we can find it with a few more clicks api file

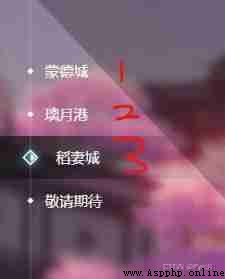

That's the data we need , Inside is the return json Data we can directly crawl these data . I saw it on the web interface 3 Different request addresses in different cities , So we have to climb 3 individual xhr request .

2. Code writing

Basic data ,3 Different links

import requests

from bs4 import BeautifulSoup as be

md_url="https://ys.mihoyo.com/content/ysCn/getContentList?pageSize=20&pageNum=1&order=asc&channelId=150" # Monde

ly_url="https://ys.mihoyo.com/content/ysCn/getContentList?pageSize=20&pageNum=1&order=asc&channelId=151" # Away from the moon

dq_url="https://ys.mihoyo.com/content/ysCn/getContentList?pageSize=20&pageNum=1&order=asc&channelId=324" # Rice wife

Write a function directly to get each api Of json data

def get_json(_url_):

req=requests.get(url=_url_)

if req.status_code == 200:

return req.json()['data']

else:

return NoneThen it is to clean the data , Throw away unused data , Just leave what is useful and what is usable

def clean_data(_data_):

_return_=[]

for key in _data_['list']:

ext=key["ext"]

data={key['title']:{

" role ICON":ext[0]["value"][0]["url"],

" Computer end draw ":ext[1]["value"][0]["url"],

" Mobile phone end painting ":ext[15]["value"][0]["url"],

" Character name ":key['title'],

" Character attributes ":ext[3]["value"][0]["url"],

" Role language ":ext[4]["value"],

" Sound quality 1":ext[5]["value"],

" Sound quality 2":ext[6]["value"],

" brief introduction ":be(ext[7]["value"],"lxml").p.text.strip(),

" Lines ":ext[8]["value"][0]["url"],

" Audio ":{

ext[9]["value"][0]["name"]:ext[9]["value"][0]["url"],

ext[10]["value"][0]["name"]:ext[10]["value"][0]["url"],

ext[11]["value"][0]["name"]:ext[11]["value"][0]["url"],

ext[12]["value"][0]["name"]:ext[12]["value"][0]["url"],

ext[13]["value"][0]["name"]:ext[13]["value"][0]["url"],

ext[14]["value"][0]["name"]:ext[14]["value"][0]["url"],

},

}

}

_return_.append(data[key['title']])

return _return_Later, we will do a function to find the role information, so we need to save the data first ,data[ Character name ]={ Character data }

def data():

_json_={}

for url in [md_url,ly_url,dq_url]:

jsonlist=clean_data(get_json(url))

for json in jsonlist:

_json_[json[' Character name ']]=json

return _json_So we can start from data Function to get the sorted data

Our last step is to find the function

def lookup(name):

json = data()[name]

print(" Find the character :",name)

for key,value in json.items():

if key == " Audio ":

for keys,values in json[key].items():

print(f"{keys}{values}")

else:

print(f"{key}:{value}")

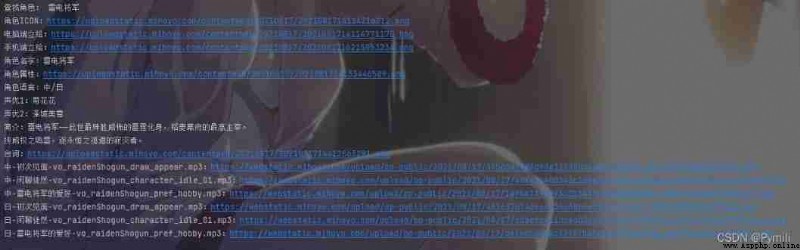

# Usage method lookup(' Character name ')Let's see the effect :

In this way, our crawler will write , Here is the complete code :

import requests

from bs4 import BeautifulSoup as be

md_url="https://ys.mihoyo.com/content/ysCn/getContentList?pageSize=20&pageNum=1&order=asc&channelId=150"

ly_url="https://ys.mihoyo.com/content/ysCn/getContentList?pageSize=20&pageNum=1&order=asc&channelId=151"

dq_url="https://ys.mihoyo.com/content/ysCn/getContentList?pageSize=20&pageNum=1&order=asc&channelId=324"

def get_json(_url_):

req=requests.get(url=_url_)

if req.status_code == 200:

return req.json()['data']

else:

return None

def clean_data(_data_):

_return_=[]

for key in _data_['list']:

ext=key["ext"]

data={key['title']:{

" role ICON":ext[0]["value"][0]["url"],

" Computer end draw ":ext[1]["value"][0]["url"],

" Mobile phone end painting ":ext[15]["value"][0]["url"],

" Character name ":key['title'],

" Character attributes ":ext[3]["value"][0]["url"],

" Role language ":ext[4]["value"],

" Sound quality 1":ext[5]["value"],

" Sound quality 2":ext[6]["value"],

" brief introduction ":be(ext[7]["value"],"lxml").p.text.strip(),

" Lines ":ext[8]["value"][0]["url"],

" Audio ":{

ext[9]["value"][0]["name"]:ext[9]["value"][0]["url"],

ext[10]["value"][0]["name"]:ext[10]["value"][0]["url"],

ext[11]["value"][0]["name"]:ext[11]["value"][0]["url"],

ext[12]["value"][0]["name"]:ext[12]["value"][0]["url"],

ext[13]["value"][0]["name"]:ext[13]["value"][0]["url"],

ext[14]["value"][0]["name"]:ext[14]["value"][0]["url"],

},

}

}

_return_.append(data[key['title']])

return _return_

def data():

_json_={}

for url in [md_url,ly_url,dq_url]:

jsonlist=clean_data(get_json(url))

for json in jsonlist:

_json_[json[' Character name ']]=json

return _json_

def lookup(name):

json = data()[name]

print(" Find the character :",name)

for key,value in json.items():

if key == " Audio ":

for keys,values in json[key].items():

print(f"{keys}:{values}")

else:

print(f"{key}:{value}")

This function has been made into my robot plug-in , You can come to the Group :706128290 To discuss , Download the plug-in package .

I am a PYmili, See you next time !