If we get tflite file , How to be in python Use in ? It can be here tensorflow With the help of library or tflite_runtime With the help of Library

tensorflow There's one in the library lite Sub Library , Is for tflite And designed

Give example code :

import tensorflow as tf

import cv2

import numpy as np

def preprocess(image): # Input image preprocessing

image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

image = cv2.resize(image, (64, 64))

tensor = np.expand_dims(image, axis=[0, -1])

tensor = tensor.astype('float32')

return tensor

# API file :https://www.tensorflow.org/api_docs/python/tf/lite/Interpreter#args_1

emotion_model_tflite = tf.lite.Interpreter("output.tflite") # load tflite Model

emotion_model_tflite.allocate_tensors() # Plan tensor assignments in advance to optimize reasoning

tflife_input_details = emotion_model_tflite.get_input_details() # Get the details of the input node

tflife_output_details = emotion_model_tflite.get_output_details() # Get the details of the output node

# Load and process into input tensor , and keras Reasoning or tensorflow The input tensor of reasoning is the same

img = cv2.imread("1fae49da5f2472cf260e3d0aa08d7e32.jpeg")

input_tensor = preprocess(img)

# Fill in the input tensor

emotion_model_tflite.set_tensor(tflife_input_details[0]['index'], input_tensor)

# Operational reasoning

emotion_model_tflite.invoke()

# Get reasoning results

custom = emotion_model_tflite.get_tensor(tflife_output_details[0]['index'])

print(custom)

See the name and know the meaning ,tflite_runtime Namely tflite Runtime environment Library . because tensorflow After all, it's too big , If we just want to use tflite Model reasoning , Then using this library is a good choice

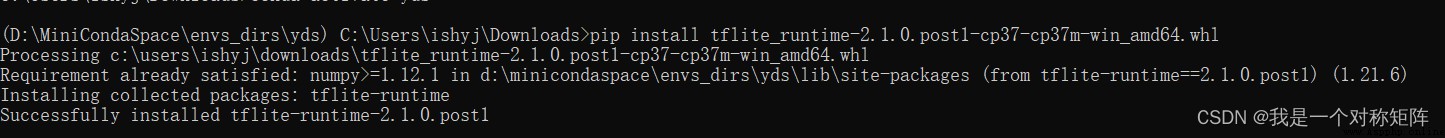

First, in the install TensorFlow Lite Interpreter According to your platform and python edition , Download the corresponding whl file , And then use pip Can be installed :pip install Download the whl File path

Give the code first :

import tflite_runtime.interpreter as tflite # Change one

import cv2

import numpy as np

def preprocess(image):

image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

image = cv2.resize(image, (64, 64))

tensor = np.expand_dims(image, axis=[0, -1])

tensor = tensor.astype('float32')

return tensor

emotion_model_tflite = tflite.Interpreter("output.tflite") # Change two

emotion_model_tflite.allocate_tensors()

tflife_input_details = emotion_model_tflite.get_input_details()

tflife_output_details = emotion_model_tflite.get_output_details()

img = cv2.imread("1fae49da5f2472cf260e3d0aa08d7e32.jpeg")

input_tensor = preprocess(img)

emotion_model_tflite.set_tensor(tflife_input_details[0]['index'], input_tensor)

emotion_model_tflite.invoke()

custom = emotion_model_tflite.get_tensor(tflife_output_details[0]['index'])

print(custom)