Last time __getitem__ Time is rough 、 Plain 、 Simply say what is an iteratable object . Here is a better one found science explain :

PythonObjects that can be iterated in(Iterable)It's not a specific data type , It refers to a that stores elements Container object , And the elements in the container can pass through__iter__( )Method or__getitem__( )Method access .

__iter__ Method is used to make objects available for … in obj Loop traversal ,__getitem__( ) The way to do this is to make the object pass through Instance name [index] To access the elements in the instance . The old ape believes that the purpose of these two methods is Python Implement a general external interface that can access the internal data of the iteratable object .

An iteratable object cannot iterate independently ,Python in , Iteration is through for … in obj To complete . All iteratable objects can be used directly for… in obj Loop access , This statement actually does two things : The first thing is to call __iter__() Get an iterator , The second thing is the loop call __next__().

Common iteratible objects include :

a) Collection data type , Such as list、tuple、dict、set、str etc. ;

b) generator (generator), Including generator and belt yield The generator function of (generator function), The next section is devoted to .

How to judge whether an object is an iterative object ? The specific judgment methods are as follows :

utilize numpy Of iterable Method

from numpy import iterable

print(iterable( Instance name ))

utilize collections Modular Iterable class

from collections import Iterable

isinstance( Instance name , Iterable)

The above contents are from CSDN Of Old ape Python, It's really the work of a master , Speak briefly 、 The key to clarity is to make people understand , bosses !

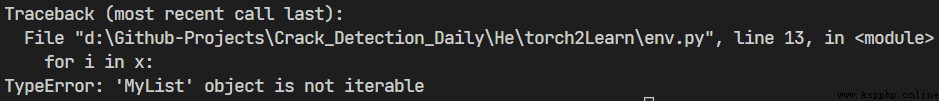

Define any object , Does not define __iter__ Method :

from numpy import iterable

class MyList:

def __init__(self, len: int):

self.list = [i for i in range(len)]

self.length = len

def __repr__(self) -> str:

return f"MyList({

self.length}):{

self.list}"

x = MyList(10)

for i in x:

print(i)

Running results :

Show MyList Instances are not iterative

Definition __iter__ After the method

range(n)from numpy import iterable

class MyList:

def __init__(self, len: int):

self.cursor = -1

self.length = len

def __iter__(self):

return self

def __next__(self):

if self.cursor+1 < self.length:

self.cursor += 1

return self.cursor

else:

exit(1)

def __repr__(self) -> str:

return f"MyList({

self.length})"

x = MyList(10)

print(iterable(x))

for i in x:

print(i)

Output is :

True

0

1

2

3

4

5

6

7

8

9

Use next() Step by step iteration can be seen more clearly :

from numpy import iterable

class MyList:

def __init__(self, len: int):

self.cursor = -1

self.length = len

def __iter__(self):

return self

def __next__(self):

if self.cursor+1 < self.length:

self.cursor += 1

return self.cursor

else:

exit(1)

def __repr__(self) -> str:

return f"MyList({

self.length})"

x = MyList(10)

print(iter(x))

print(next(x))

print(next(x))

print(next(x))

print(next(x))

for i in x:

print(i)

The output is :

MyList(10)

0

1

2

3

4

5

6

7

8

9

Why is it PyTorch The trace of common iterations in ?

Probably because deep learning model training often requires a large data set , Sometimes even with TB(1024GB) To calculate , as everyone knows , Most personal computers have no more than 32GB, One time handle 1TB Such large data is loaded into memory for use , It's obviously unrealistic .

In this case , Put the required data set Batch by batch Loading into memory for use is obviously a better and more realistic solution , This coincides with the concept and positioning of iteratable objects , It can be asserted that , Precisely because Python Iterations are naturally supported , therefore Python No other language can shake its position in the field of deep learning .

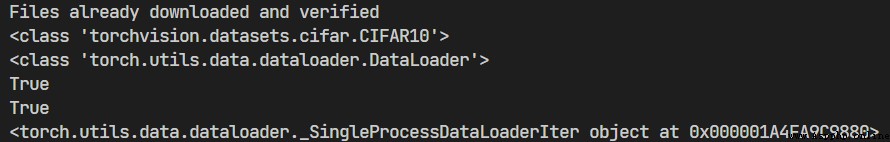

Dataloader Loading instances of data :

from cgi import test

from torch.utils.data import DataLoader

from torchvision.transforms import ToTensor

from torchvision.datasets import CIFAR10

from numpy import iterable

# Use CIFAR10 Reference data set

test_set = CIFAR10(root='./torch2Learn/dataset/cifar10_data',

train=False, download=True, transform=ToTensor())

# Load datasets in batches , Each batch 64 individual

test_loader = DataLoader(dataset=test_set, batch_size=64,

shuffle=True, drop_last=False)

print(type(test_set))

print(type(test_loader))

print(iterable(test_set))

print(iterable(test_loader))

print(iter(test_loader))

for step, item in enumerate(test_loader):

imgs_arr, kinds_arr = item