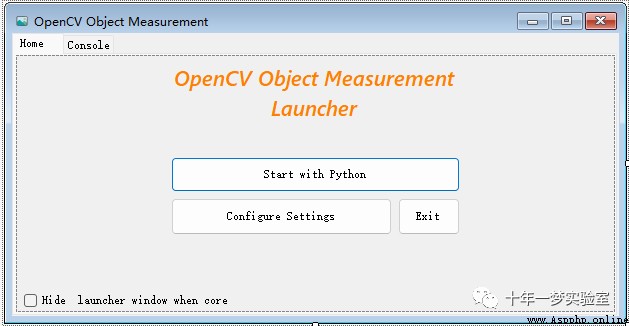

C# Interface

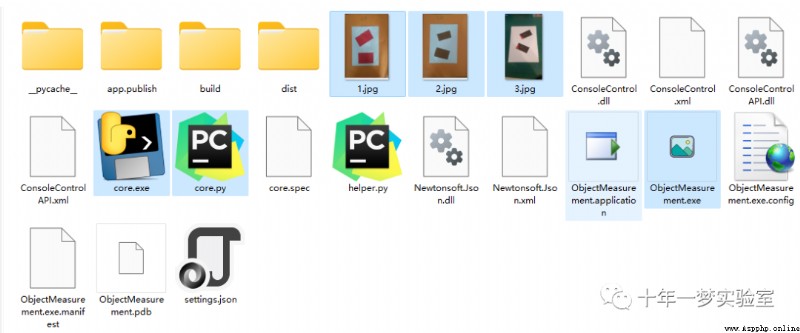

Executable program

Video demo

note :

One 、C# call Python Script program

Controls used ConsoleControl.

corePath = "./core.py";//python Script

corePathExe = "core.exe"; //python Generated executable file

private void startBtn_Click(object sender, EventArgs e)

{

switch (File.Exists(corePathExe))//python There is an executable file ?

{

case true:// There is

if (!File.Exists(corePathExe)) return;

appConsole.StartProcess("cmd", $"/c {corePathExe}");//ConsoleControl Control start-up python process : "core.exe"

break;

case !false:

if (!File.Exists(corePath)) return;

appConsole.StartProcess("cmd", $"/c @python {corePath}");// start-up python Script :"./core.py"

break;

default:

break;

}

/*

ProcessStartInfo startInfo = new ProcessStartInfo();

startInfo.FileName = "python";

startInfo.Arguments = corePath;

startInfo.UseShellExecute = true;

Process.Start(startInfo);

*/

menuCtrl.SelectedIndex = 1;// Set up Tab Control Activity page

if (closeCheck.Checked) this.WindowState = FormWindowState.Minimized;// Check the box , Minimize forms

}Two 、Python Script : Object size detection

2.1 core.py

import cv2

import helper

import json

img_f = open('./settings.json') # Reading configuration

settings = json.load(img_f) # Load configuration parameters

webcam = settings['useWebcam'] # Whether to use webcam

path = settings['imgFilePath'] # Picture path

cap = cv2.VideoCapture(int(settings['webcamIndex']))# Turn on the camera

dashed_gap = int(settings['dashGapScale']) # Dotted line Dash spacing

cap.set(10, 160) #https://blog.csdn.net/qq_43797817/article/details/108096827 10:CV_CAP_PROP_BRIGHTNESS The brightness of the image ( Only for cameras )

resArray = settings['resolution'].split('x') # The resolution of the

#print(int(resArray[0]), int(resArray[1]))

cap.set(3, int(resArray[0])) #3:CV_CAP_PROP_FRAME_WIDTH The width of the frame in the video stream .

cap.set(4, int(resArray[1])) #4:CV_CAP_PROP_FRAME_HEIGHT The height of the frame in the video stream

scale = int(settings['generalScale']) # The proportion : Image pixels And CM The proportion of

wP = 210 * scale #210*3=630

hP = 297 * scale #297*3=891

windowName = settings['windowName'] # Window title

print('Settings loaded.') #

checkPrintLoop = False # Image processing polling did not start

while True:

if checkPrintLoop == False:

print('Image process loop started.')

checkPrintLoop = True

if webcam:# Use a webcam

success, img = cap.read() # Read a frame

imgLast = img # Get the latest frame

else:

img = cv2.imread(path) # Read a frame of image

imgContours, conts = helper.getBorders(img, minArea=50000, filter=4) # Get quadrilateral bounding box

if len(conts) != 0: # Find the object

biggest = conts[0][2] # Fitted quadrilateral

# print(biggest)

imgWarp = helper.warpImg(img, biggest, wP, hP) # Projection maps and scales the image Zoom the image to WP,hP Size

imgContours2, conts2 = helper.getBorders(imgWarp, minArea=2000, filter=4, cThr=[50, 50], draw=False) # Search for rectangular bounding boxes on images mapped to rectangles The area is larger than 2000, quadrilateral

if len(conts) != 0: # Find the boundary quadrilateral

for obj in conts2: # Traverse the found bounding box array : Sort from large to small

#print(obj[2])

#cv2.polylines(imgContours2, helper.getDashedPoint(obj[2]), True, (0, 140, 255), 2)

#helper.drawpoly(imgContours2, [obj[2]], (0, 140, 255), 2)

nPoints = helper.reorder(obj[2]) # Reorder quadrilateral corners

nW = round((helper.findDis(nPoints[0][0]//scale, nPoints[1][0]//scale)/10), 1) # True width dimension Floor removal ( Divide and conquer ) x // y

nH = round((helper.findDis(nPoints[0][0]//scale, nPoints[2][0]//scale)/10), 1) # True height

#objDef1 = (nPoints[0][0][0], nPoints[0][0][1]), (nPoints[1][0][0], nPoints[1][0][1]) #

#objDef2 = (nPoints[0][0][0], nPoints[0][0][1]), (nPoints[2][0][0], nPoints[2][0][1]) #

#print(obj[2][0][0], obj[2][1][0])

# Draw a quadrilateral dotted line Images , The starting point , End , Color , Line width , Default point type , Dash spacing

helper.dashLine(imgContours2, obj[2][0][0], obj[2][1][0], (0, 140, 255), 2, 'dotted', dashed_gap)

helper.dashLine(imgContours2, obj[2][1][0], obj[2][2][0], (0, 140, 255), 2, 'dotted', dashed_gap)

helper.dashLine(imgContours2, obj[2][2][0], obj[2][3][0], (0, 140, 255), 2, 'dotted', dashed_gap)

helper.dashLine(imgContours2, obj[2][3][0], obj[2][0][0], (0, 140, 255), 2, 'dotted', dashed_gap)

#helper.dashLine(imgContours2, objDef2[0], objDef2[1], (0, 140, 255), 2)

#cv2.arrowedLine(imgContours2, objDef1[0], objDef1[1], (255, 0, 255), 3, 8, 0, 0.05)

#cv2.arrowedLine(imgContours2, objDef2[0], objDef2[1], (255, 0, 255), 3, 8, 0, 0.05)

x, y, w, h = obj[3] # Border rectangle

#print(x, y, w, h)

cv2.putText(imgContours2, '{}cm'.format(nW), (x + 30, y - 10), cv2.QT_FONT_NORMAL, 0.5, (0, 0, 0), 1) # Show What's the width cm

cv2.putText(imgContours2, '{}cm'.format(nH), (x - 70, y + h // 2), cv2.QT_FONT_NORMAL, 0.5,(0, 0, 0), 1) # Display height How many? cm

imgLast = imgContours2 # The final image to display : There is dimension information

#cv2.imshow('A4', imgContours2)

img = cv2.resize(img, (0, 0), None, 0.5, 0.5) # Zoom image If dsize Set to 0(None), Then press fx And fy The output image size is obtained by multiplying the original image size

cv2.imshow(windowName, imgLast) # Show

k =cv2.waitKey(0) # Wait indefinitely for the key

if k == 27: break # On the keyboard Esc The key value of the key

if cv2.getWindowProperty(windowName, cv2.WND_PROP_VISIBLE) <= 0:

cv2.destroyAllWindows()

break2.2 helper.py

from operator import index

import cv2

import numpy as np

# The image processing , Find the bounding box

def getBorders(img,cThr=[100,100],showCanny=False,minArea=1000,filter=0,draw =False):

imgGray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) # grayscale

imgBlur = cv2.GaussianBlur(imgGray,(5,5),1) # Gauss filtering

imgCanny = cv2.Canny(imgBlur,cThr[0],cThr[1]) #canny edge detection , cThr: Minimum threshold 、 Maximum

kernel = np.ones((5,5)) # Convolution kernel size

imgDial = cv2.dilate(imgCanny,kernel,iterations=3) # Expansion operation

imgThre = cv2.erode(imgDial,kernel,iterations=2) # Corrosion operation

if showCanny:cv2.imshow('Canny',imgThre) # Show edge detection results , Default false, No display

contours,hiearchy = cv2.findContours(imgThre,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE) # Search for contours

finalCountours = []

for i in contours: # Traverse search results

area = cv2.contourArea(i) # The first i+1 Contour area https://www.jianshu.com/p/6bde79df3f9d

if area > minArea: # Greater than the set area threshold

peri = cv2.arcLength(i,True) # Calculate the perimeter of the contour

approx = cv2.approxPolyDP(i,0.02*peri,True) # Polygon fitting of contour

bbox = cv2.boundingRect(approx) # A rectangular box of polygons boundingRect、minAreaRect Find the smallest positive rectangle of the package outline 、 Minimum diagonal rectangle https://www.cnblogs.com/bjxqmy/p/12347355.html

if filter > 0: # Filter according to the number of edges of the fitted polygon 0: No filtering

if len(approx) == filter:

finalCountours.append([len(approx),area,approx,bbox,i]) # Add to the final boundary result array

else:

finalCountours.append([len(approx),area,approx,bbox,i]) # Number of edges , area , Approximate polygon , Bounding box , outline

finalCountours = sorted(finalCountours,key = lambda x:x[1] ,reverse= True) #reverse = True Descending , reverse = False Ascending ( Default ) Final bounding box array : area Sort

if draw:

for con in finalCountours:

cv2.drawContours(img,con[4],-1,(0,0,255),3) # Draw a red outline ,

return img, finalCountours # Returns the grayscale image ( May have a contour ), Final contour array

# Reorder the fitted quadrilateral 4 The point of

def reorder(myPoints):

#print(myPoints.shape)

myPointsNew = np.zeros_like(myPoints) # Initialization point array

myPoints = myPoints.reshape((4,2)) #4 A little bit x,y

add = myPoints.sum(1) #array.sum(axis =1), Yes array Add each line of

myPointsNew[0] = myPoints[np.argmin(add)] # Top left corner https://blog.csdn.net/qq_37591637/article/details/103385174

myPointsNew[3] = myPoints[np.argmax(add)] # Lower right corner

diff = np.diff(myPoints,axis=1)#y Calculate the number... Along the specified axis N The discrete difference of dimensions The first difference is caused by out[i]=x[i+1]-a[i]

myPointsNew[1]= myPoints[np.argmin(diff)] # Lower left corner

myPointsNew[2] = myPoints[np.argmax(diff)] # Top right corner

return myPointsNew

# Get the corner specified by the sorted index

def getorder(myPoints, index):

#print(myPoints.shape)

myPointsNew = np.zeros_like(myPoints)

myPoints = myPoints.reshape((4,2))

add = myPoints.sum(1)

myPointsNew[0] = myPoints[np.argmin(add)]

myPointsNew[3] = myPoints[np.argmax(add)]

diff = np.diff(myPoints,axis=1)

myPointsNew[1]= myPoints[np.argmin(diff)]

myPointsNew[2] = myPoints[np.argmax(diff)]

return myPointsNew[index]

# Compress image , Approximate quadrilateral projection mapping , Intercept most

def warpImg(img,points,w,h,pad=20):

# print(points)

points = reorder(points) # Reorder the four corners

pts1 = np.float32(points) # Floating point array : Reorder the fitted image corners

pts2 = np.float32([[0,0],[w,0],[0,h],[w,h]]) # Transformed image vertex

matrix = cv2.getPerspectiveTransform(pts1,pts2) # Get projection mapping (Projective Mapping) Perspective transformation (Perspective Transformation) matrix

imgWarp = cv2.warpPerspective(img,matrix,(w,h)) # Projection mapping

imgWarp = imgWarp[pad:imgWarp.shape[0]-pad,pad:imgWarp.shape[1]-pad] # Get rid of pad Border filling

return imgWarp

# Calculate the distance between two points

def findDis(pts1,pts2):

return ((pts2[0]-pts1[0])**2 + (pts2[1]-pts1[1])**2)**0.5

# Is it divisible

def checkDivide(num, num2):

boolDef = (num % num2) == 0

return (boolDef)

# obtain points Points specified in the index

def getPoint(points, _index):

newPoints = []

for _point in points:

newPoints.append(_point)

_lastValue = newPoints[_index]

return _lastValue

# Draw broken lines A straight line

def dashLine(img,pt1,pt2,color,thickness=1,style='dotted',gap=20):

dist =((pt1[0]-pt2[0])**2+(pt1[1]-pt2[1])**2)**.5

#dist = dist * 3

pts= []

for i in np.arange(0,dist,gap):

r=i/dist

x=int((pt1[0]*(1-r)+pt2[0]*r)+.5)

y=int((pt1[1]*(1-r)+pt2[1]*r)+.5)

p = (x,y)

pts.append(p)

if style=='dotted':

for p in pts:

cv2.circle(img,p,thickness,color,-1)

else:

s=pts[0]

e=pts[0]

i=0

for p in pts:

s=e

e=p

if i%2==1:

cv2.line(img,s,e,color,thickness)

i+=1

# Draw polygon -

def drawpoly(img,pts,color,thickness=1,style='dotted',):

s=pts[0]

e=pts[0]

pts.append(pts.pop(0))

i=0

for p in pts:

if p==e:continue

s=e # The starting point

if p==p[len(p)-1]:e=pts[0]

e=p # to update end spot

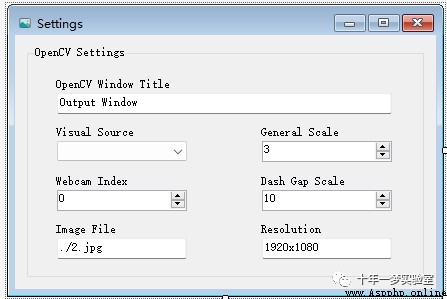

dashLine(img,s,e,color,thickness,style)3、 ... and 、json Parameters C# Read and write operations

setting.json file

{"useWebcam":false,"webcamIndex":0,"imgFilePath":"./3.jpg","generalScale":3,"dashGapScale":10,"resolution":"1920x1080","windowName":"Output Window"}json Configuration class

using System.Text;

using Newtonsoft.Json;

using System.IO;

using System.Collections.Generic;

namespace ObjectMeasurement

{

public class CoreSettings

{

// Property configuration class

private class CoreProperties// Configuration class

{

public bool useWebcam { get; set; }// Use a webcam

public int webcamIndex { get; set; }// Camera index

public string imgFilePath { get; set; }// Picture path

public int generalScale { get; set; }// The proportion

public int dashGapScale { get; set; }//

public string resolution { get; set; }// The resolution of the

public string windowName { get; set; }// Window title

}

// Private variables

private bool a_useWebcam { get; set; }

private int a_webcamIndex { get; set; }

private string a_imgFilePath { get; set; }

private int a_generalScale { get; set; }

private int a_dashGapScale { get; set; }

private string a_resolution { get; set; }

private string a_windowName { get; set; }

// Public attributes

public bool useWebcam { get; private set; }

public int webcamIndex { get; private set; }

public string imgFilePath { get; private set; }

public int generalScale { get; private set; }

public int dashGapScale { get; private set; }

public string resolution { get; private set; }

public string windowName { get; private set; }

private string jsonPath { get; set; }// Serialized string

// Constructors 1: Load serialization string

public CoreSettings(string _jsonPath)

{

jsonPath = _jsonPath;

LoadJson();// Load serialization string , Deserialization , Set configuration

}

// Constructors 2: Load parameters

public void Configure(bool _useWebcam, int _webcamIndex,

string _imgFilePath, int _generalScale,

int _dashGapScale, string _resolution, string _windowName)

{

useWebcam = _useWebcam;

webcamIndex = _webcamIndex;

imgFilePath = _imgFilePath;

generalScale = _generalScale;

dashGapScale = _dashGapScale;

resolution = _resolution;

windowName = _windowName;

//

a_useWebcam = _useWebcam;

a_webcamIndex = _webcamIndex;

a_imgFilePath = _imgFilePath;

a_generalScale = _generalScale;

a_dashGapScale = _dashGapScale;

a_resolution = _resolution;

a_windowName = _windowName;

}

// load json character string

public void LoadJson()

{

string json = File.ReadAllText(jsonPath);

CoreProperties loadedSettings = JsonConvert.DeserializeObject<CoreProperties>(json);// Deserialization

Configure(loadedSettings.useWebcam, loadedSettings.webcamIndex,

loadedSettings.imgFilePath, loadedSettings.generalScale, loadedSettings.dashGapScale,

loadedSettings.resolution, loadedSettings.windowName);// To configure

}

// Save serialized objects

public void Save()

{

CoreProperties properties = new CoreProperties

{

useWebcam = a_useWebcam,

webcamIndex = a_webcamIndex,

imgFilePath = a_imgFilePath,

generalScale = a_generalScale,

dashGapScale = a_dashGapScale,

resolution = a_resolution,

windowName = a_windowName

};

string writeJson = JsonConvert.SerializeObject(properties).ToString();// Configure class serialization

/*

Dictionary<string, string> replacePairs = new Dictionary<string, string>();

replacePairs.Add("_useWebcam", "useWebcam");

replacePairs.Add("_webcamIndex", "webcamIndex");

replacePairs.Add("_imgFilePath", "imgFilePath");

replacePairs.Add("_generalScale", "generalScale");

replacePairs.Add("_dashGapScale", "dashGapScale");

replacePairs.Add("_resolution", "resolution");

replacePairs.Add("_windowName", "windowName");

replacePairs.Add("_", "");

foreach (KeyValuePair<string, string> item in replacePairs)

{

string key = item.Key;

writeJson.Replace(key.ToString(), item.Value.ToString());

}

*/

// System.Windows.Forms.MessageBox.Show(JsonConvert.SerializeObject(properties));

File.WriteAllText(jsonPath, JsonConvert.SerializeObject(properties));// Save the serialized configuration

}

}

}json Parameter loading and saving

// When you close a window , Read parameter setting configuration

private void SettingsForm_FormClosing(object sender, FormClosingEventArgs e)

{

bool _useWebcam = settings_visualSource.SelectedItem.ToString() == "Web Camera";

int _webcamIndex = Convert.ToInt32(settings_WebcamIndex.Value);

string _imgFilePath = settings_ImageFile.Text.ToString();

int _generalScale = Convert.ToInt32(settings_GeneralScale.Value);

int _dashGapScale = Convert.ToInt32(settings_DashGapScale.Value);

string _resolution = settings_Resolution.Text.ToString();

string _windowName = settings_WindowTitle.Text.ToString();

MainForm.coreSettings.Configure(_useWebcam, _webcamIndex, _imgFilePath, _generalScale, _dashGapScale, _resolution, _windowName);

MainForm.coreSettings.Save();

}

private void SettingsForm_Load(object sender, EventArgs e)

{

CoreSettings core = MainForm.coreSettings;

core.LoadJson();

if (core.useWebcam)

{

settings_visualSource.SelectedIndex = 0;

}

else

{

settings_visualSource.SelectedIndex = 1;

}

settings_WebcamIndex.Value = core.webcamIndex;

settings_ImageFile.Text = core.imgFilePath;

settings_GeneralScale.Value = core.generalScale;

settings_DashGapScale.Value = core.dashGapScale;

settings_Resolution.Text = core.resolution;

settings_WindowTitle.Text = core.windowName;

} Reference resources :

https://blog.csdn.net/u010636181/article/details/80659700