Want to get close 15 Days Shanghai weather data , And draw a line chart , Reptilian xpath and re To solve the needs of data acquisition ,pylab To solve the need of drawing line chart .

️ Tips : Reptiles cannot be used as illegal activities , Set the sleep time when crawling , Do not over crawl , Causing server downtime , Be legally liable !!!

The goal is to acquire the city of Shanghai 15 High and low temperature data of the day , And draw a line chart

️ The data is rendered in server mode , The weather temperature data is directly in html Package in the page , You can use xpath perhaps re Locate and obtain data .

however 7 Within days and 8-15 There are two different pages of day data , So you need to crawl the data twice

import requests

from lxml import etree

from pylab import * # Support Chinese

# Set up crawling website url

base_url = "http://www.weather.com.cn/weather/101020100.shtml"

# requests Crawl code

resp = requests.get(url=base_url)

# XPATH analysis

html = etree.HTML(resp.text)

# Get the weather li, stay li It contains all the daily weather data , Include date / The weather / The temperature / Cities and so on

lis = html.xpath('//*[@id="7d"]/ul/li')

# Date of creation 、 The highest temperature 、 An array of lowest temperatures , In order to add the crawled data to the array later , Furthermore, the array is further used as plot Draw a line chart

days = []

lows = []

highs = []

# Yes 7 The weather is li Traversal , To get high and low temperatures and dates

for li in lis:

print(" Crawling closer 7 God ···")

# obtain 7 It's hot

high = li.xpath("./p[2]/span/text()")[0]

# obtain 7 Day low temperature

low = li.xpath("./p[2]/i/text()")[0][0:2]

# obtain 7 Day date

day = li.xpath("./h1/text()")[0][0:2]

# hold 7 Day date 、 The high temperature 、 Add low temperature to the array

days.append(day)

lows.append((int)(low))

highs.append((int)(high))

# Set dormancy 1 second

time.sleep(1)

# Set up 8-15 Days of url

base_url = "http://www.weather.com.cn/weather15d/101020100.shtml"

# requests To climb 8-15 Day page code

resp = requests.get(url=base_url)

# Set encoding

resp.encoding = 'utf-8'

# XPATH analysis

html = etree.HTML(resp.text)

# Get 8-15 The daily weather in Tianyuan code li

lis = html.xpath('//*[@id="15d"]/ul/li')

# Yes 8-15 Days of the weather li Traversal , To get high and low temperatures and dates

for li in lis:

print(" Crawling closer 8-15 God ···")

# obtain 8-15 It's hot

high = li.xpath("./span[@class='tem']/em/text()")[0][:2]

# obtain 8-15 Day low temperature

low = li.xpath("./span[@class='tem']/text()")[0][1:3]

# obtain 8-15 Day date

day = li.xpath("./span[@class='time']/text()")[0][3:5]

# hold 8-15 Day date 、 The high temperature 、 Add low temperature to the array

days.append(day)

lows.append((int)(low))

highs.append((int)(high))

# Set dormancy 1 second

time.sleep(1)

# Crawling 15 Date of day 、 The high and low temperatures are over

# Print 15 Daily information

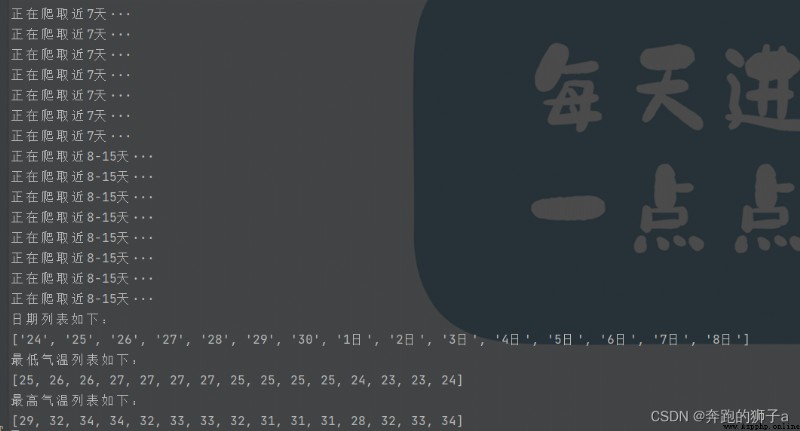

print(" The list of dates is as follows :")

print(days)

print(" The list of minimum temperatures is as follows :")

print(lows)

print(" The maximum temperature is listed below :")

print(highs)

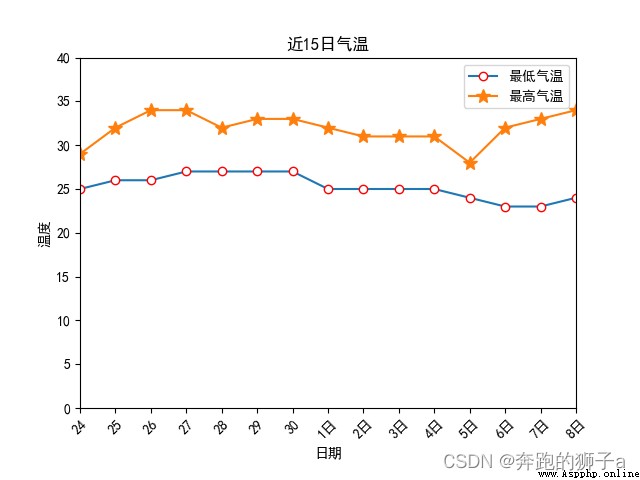

# The code below draws a line chart of high and low temperatures

# Set the font

mpl.rcParams['font.sans-serif'] = ['SimHei']

# Set up x Shaft length

x = range(len(days))

# Limit the range of the longitudinal axis

plt.ylim(0, 40)

# low temperature 、 High temperature data loading , Set graphic representation , Set the explanation

plt.plot(x, lows, marker='o', mec='r', mfc='w', label=u' Minimum temperature ')

plt.plot(x, highs, marker='*', ms=10, label=u' The highest temperature ')

# Let the legend work

plt.legend()

plt.xticks(x, days, rotation=45)

plt.margins(0)

plt.subplots_adjust(bottom=0.15)

# X Axis labels

plt.xlabel(u" date ")

# Y Axis labels

plt.ylabel(" temperature ")

# title

plt.title(" near 15 Daily temperature ")

# The legend shows

plt.show()

The output of the program is as follows

The output line chart is as follows

The basic steps of a reptile :

1. Check whether there is anti climbing , Set the normal reverse crawl ,User-Agent and referer Are the most common anti climbing methods

2. utilize xpath and re Technology positioning , Get the desired data after positioning

3. utilize file File operations are written to text

4. Pay attention to the settings time Sleep