Catalog

Mask wearing face detection and mask wearing recognition ( contain Python Android Source code )

1. Identification method of wearing mask

(1) Wearing mask recognition method based on multi category target detection

(2) Based on face detection + Classification and identification method of wearing masks

2. Mask wearing face data set

3. Face detection with mask

4. Wear mask recognition model training

(1) Prepare the data

(2) Wearing a mask classification model training (Pytorch)

(3) Visualize the training process

(4) Wear a mask to identify the effect

5. Wear a mask recognition model Android Deploy

(1) take Pytorch Model transformation ONNX Model

(2) take ONNX The model is converted to TNN Model

(3) Android Wear mask identification on the end deployment

6. Project source download

The current epidemic situation is repeated , One of the most effective ways to prevent and control novel coronavirus is to wear masks , Therefore, it is of great significance to study the detection and recognition of wearing masks . Epidemic prevention and control , Everyone is responsible. , As a program dog , Share with me the method of face detection and face recognition with masks developed by me . At present, the project develops the mask wearing recognition (face-mask recognition) The accuracy of is quite high , stay resnet50, Up to 99% The accuracy of , Even with lightweight versions MobileNet-v2, The accuracy can also be as high as 98.18% about .

This project will teach you how to train a classification and recognition model of wearing masks , Including how to convert to ONNX,TNN Model , And migrate to Android Deploy on , To realize the recognition of wearing a mask Android Demo APP . The data and source code are ready , Get ready to board ~

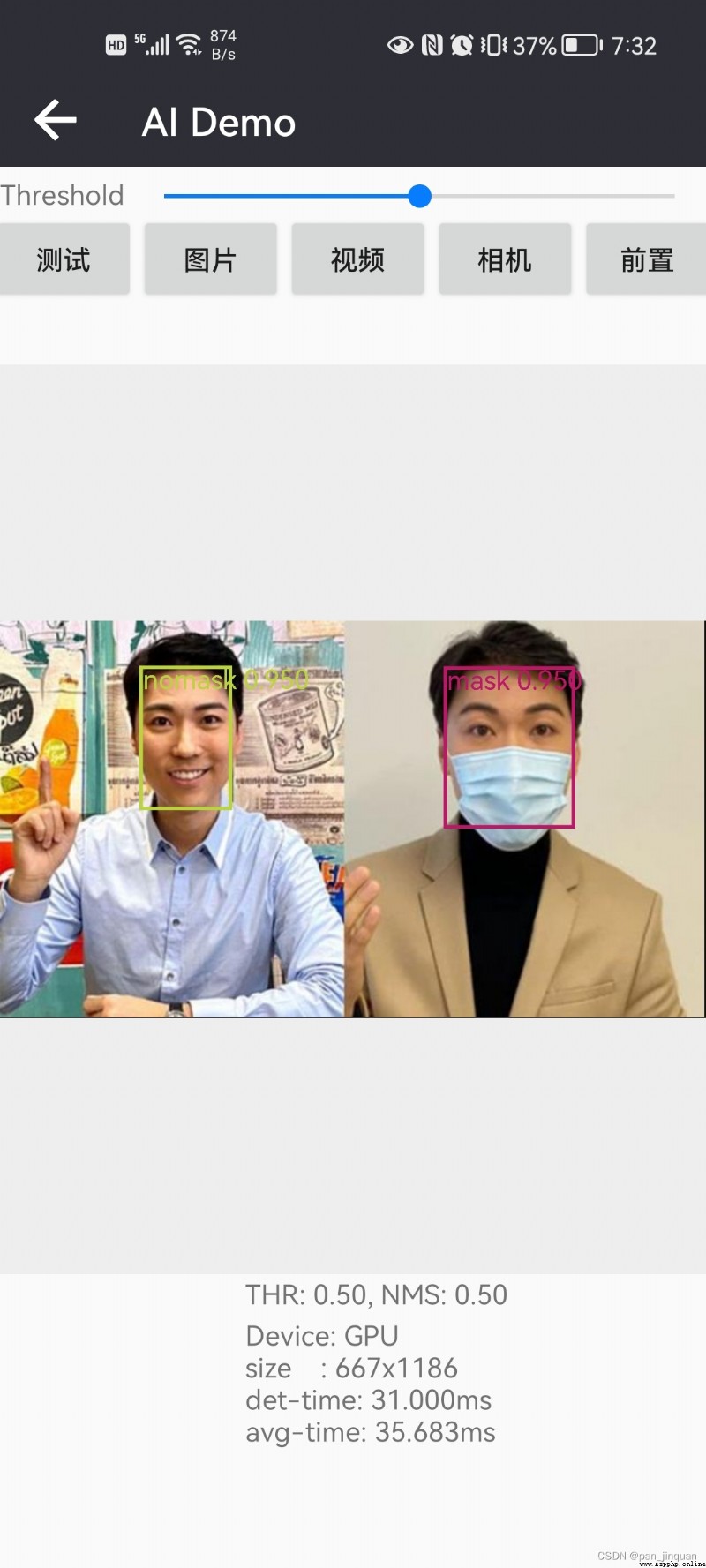

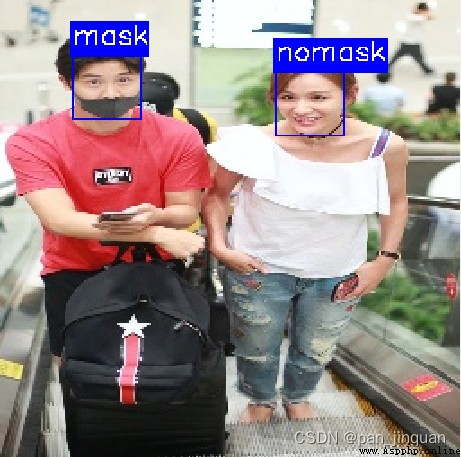

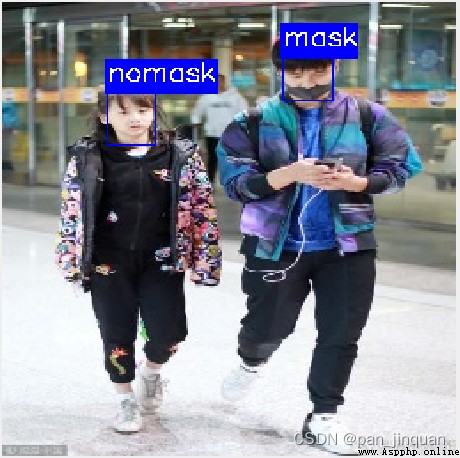

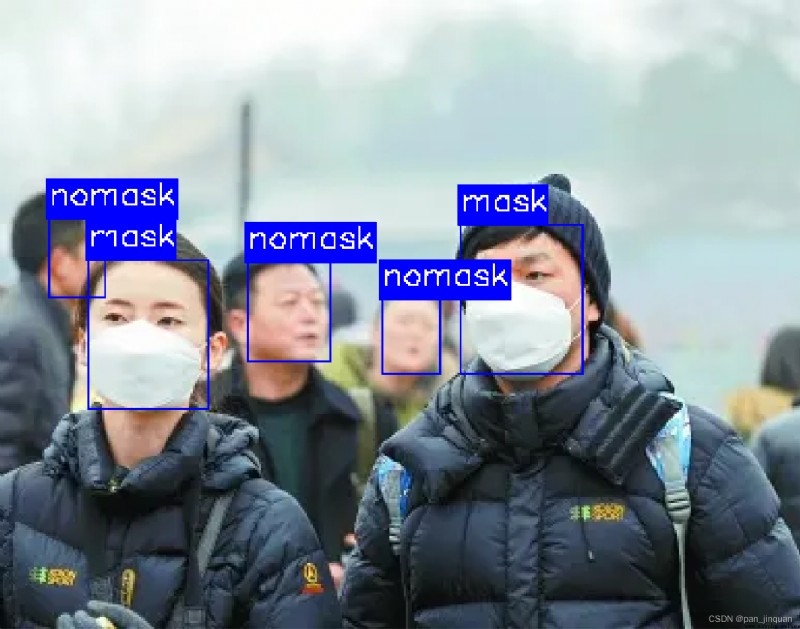

Demo Effect display :

Picture test Video test

- Mask wearing face detection and mask wearing recognition Android Demo APP Free physical examination :https://download.csdn.net/download/guyuealian/85771596

- Face detection and face recognition with masks ( Contain data , Training code ,Android Source code ): Mask wearing face detection and mask wearing recognition ( contain Python Android Source code )

The whole project , The main contents supported are :

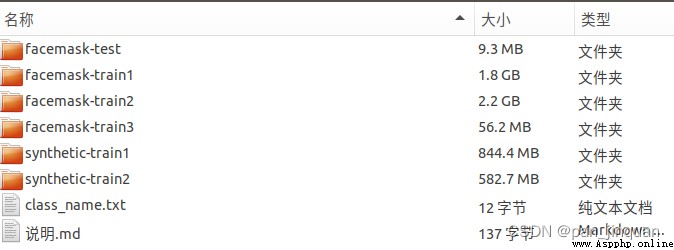

- contain 5 Data sets : facemask-train1, facemask-train2,facemask-train3, synthetic-train1,synthetic-train2 ,facemask-test , There are about 50000+ The data of :

- Generate mask wearing face code : python create_facemask.py

- Face detection with mask

- Support wearing mask recognition :mask( Wear a mask ) and nomask( No mask )

- Provide face mask identification Python Demo Source code , On ordinary computers CPU/GPU It can detect and recognize in real time

- Provide face mask identification Android Demo Source code , On ordinary mobile phones CPU/GPU It can detect and recognize in real time , about 30ms about

Wearing mask recognition method based on multi category target detection , One step in place , Put on a mask (nomask) And wearing a mask (mask) The two categories are trained as two target detection categories

- advantage : Direct end-to-end training , The task is simple , Fast

- shortcoming : The face frame needs to be marked manually mask and nomask, It takes a lot of time ; In case of insufficient training data , It is prone to false detection

The method , First, a general face detection model is used , Face detection , Then crop the face area , Train a mask wearing classifier , Face classification and recognition ( Not wearing masks and wearing masks )

- advantage : There is no need to label face frame data , You can synthesize face data with masks by yourself , Low labor cost ; High precision , The classification model can be lightweight

- shortcoming : Two models need to be deployed ( Face detection model and face mask classification model ), The more faces , The slower the speed

Considering the cost of data annotation , The second method is adopted in this project , That is, face based detection + Classification and identification method of wearing masks

Most of the face data on the Internet are faces without masks , It can not be directly used in face mask recognition . In view of this , We can consider our own synthesis / Generate face data with masks , The following is my collection and collation of face data sets with masks and synthetic data sets , There are about 50000+ The data of :

Original picture Generate face with mask

Face data and generation methods for wearing masks , Please refer to my blog for detailed instructions 《 Mask wearing face data set and mask wearing face generation method 》

Data sets explain facemask-train1Generally, we understand that face detection refers to face detection without occlusion or with only a little occlusion , When the face is wearing a mask , The detection effect is bound to become worse , However, it is time-consuming and laborious to label a large number of face data sets with face masks . So my method is :

First in WiderFace Face data set , Training face detection ; And then in facemask-train1 Data sets finetune Face detection model , After this method training , The effect of wearing a mask will be much better .

Of course , Even if the open source face detection algorithm is used , Face detection with mask , In fact, the effect will not be too bad , For example, use FaceBox,MTCNN Check the picture with mask , The effect is OK , However, the face detection frame is often incomplete , There are problems such as lack , It has a certain impact on the subsequent recognition of wearing masks .

About the method of face detection , You can refer to my other blog :

Fast and good , Pedestrian detection, face detection and face key point detection (C++/Android Source code )_pan_jinquan The blog of -CSDN Blog Considering the needs of human face detection , I have developed a lightweight , High precision , Real time face / Human detection Android Demo, The main supported functions are as follows : Support face detection algorithm model support face detection and face key point detection (5 Personal face key points ) The algorithm model supports human detection ( Pedestrian detection ) The algorithm model supports the simultaneous detection of face and human body. All algorithm models use C++ Development , The reasoning framework adopts TNN,Android adopt JNI Interface to call algorithms ; All the algorithm models can be used in common Android Mobile phones run in real time , In general Android mobile phone ,CPU and GPU Can achieve the effect of real-time detection (CPU about 25 Millisecond or so ,GPU about 1 https://panjinquan.blog.csdn.net/article/details/125348189

https://panjinquan.blog.csdn.net/article/details/125348189

This project will teach you how to train a classification and recognition model of wearing masks , Including how to convert to ONNX,TNN Model , And migrate to

Android Deploy on , To realize the recognition of wearing a mask Android APP Demo

The basic structure of the whole project is as follows :

.

├── classifier # Training model related tools

├── configs # Training profile

├── data # Training data

├── libs

│ ├── convert # Convert the model to ONNX Tools

│ ├── facemask # Mask wearing face data generation tool

│ ├── light_detector # Face detection

│ ├── create_facemask.py # Face data generation with masks demo

│ ├── detector.py # Face detection demo

│ └── README.md

├── demo.py # Face recognition with masks demo

├── README.md # Project engineering description document

├── requirements.txt # Project dependent packages

└── train.py # Training documents All in all 5 Data sets , Include facemask-train1, facemask-train2,facemask-train3,synthetic-train1,synthetic-train2 ,facemask-test , There are about 50000+ The data of .

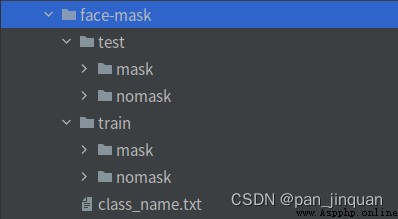

Of course , You can also use your own data set , The data structure is as follows , among mask The catalogue stores the pictures of faces wearing masks , and nomask The catalog stores face images without masks .

I am in 《Pytorch Basic training library Pytorch-Base-Trainer( Support model pruning Distributed training )》 On this basis, the training and testing of wearing masks and not wearing masks are realized , The whole training code is very simple to operate , Users only need to put data of the same category in the same directory , And fill in the corresponding data path , You can start training .

The training framework uses Pytorch, The whole training code mainly supports :

- Currently supported backbone Yes :googlenet,resnet[18,34,50], ,mobilenet_v2 etc. , other backbone You can customize it

- The training parameters can be obtained by (configs/config.yaml) Configuration file settings

The training parameters are described as follows :

# Set up the training dataset , Support multiple training datasets

train_data:

- 'dataset/face_mask/facemask-train1/crops'

- 'dataset/face_mask/facemask-train2/crops'

- 'dataset/face_mask/facemask-train3/crops'

- 'dataset/face_mask/synthetic-train1/crops'

- 'dataset/face_mask/synthetic-train1/crops'

# Set up test data set

test_data: 'dataset/face_mask/facemask-test/crops'

class_name: 'dataset/face_mask/class_name.txt' # Category label

train_transform: "train" # Data enhancement methods used in training

test_transform: "val" # Test the data enhancement methods used

work_dir: "work_space/" # The directory where the output model is saved

net_type: "mobilenet_v2" # Backbone network , Support :resnet18,mobilenet_v2,googlenet

resample: True # Sample equalization

width_mult: 1.0

input_size: [ 128,128 ]

rgb_mean: [ 0.5, 0.5, 0.5 ] # for normalize inputs to [-1, 1],Sequence of means for each channel.

rgb_std: [ 0.5, 0.5, 0.5 ] # for normalize,Sequence of standard deviations for each channel.

batch_size: 64

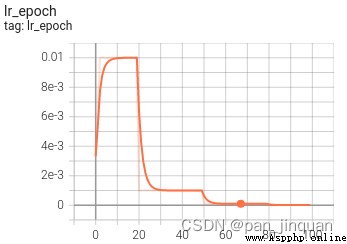

lr: 0.01 # Initial learning rate

optim_type: "SGD" # Choose the optimizer ,SGD,Adam

loss_type: "LabelSmoothing" # Choose the loss function : Support CrossEntropyLoss,LabelSmoothing

momentum: 0.9 # SGD momentum

num_epochs: 100 # Number of training cycles

num_warn_up: 3 # warn-up frequency

num_workers: 8 # Number of loading data worker processes

weight_decay: 0.0005 # weight_decay, Default 5e-4

scheduler: "multi-step" # Learning rate adjustment strategies

milestones: [ 20,50,80 ] # How to reduce the learning rate

gpu_id: [ 0 ] # GPU ID

log_freq: 50 # LOG Print frequency

progress: True # Whether to display the progress bar

pretrained: False # Whether to use pretrained Model

finetune: False # Whether to carry out finetuneStart training :

python train.py -c configs/config.yaml

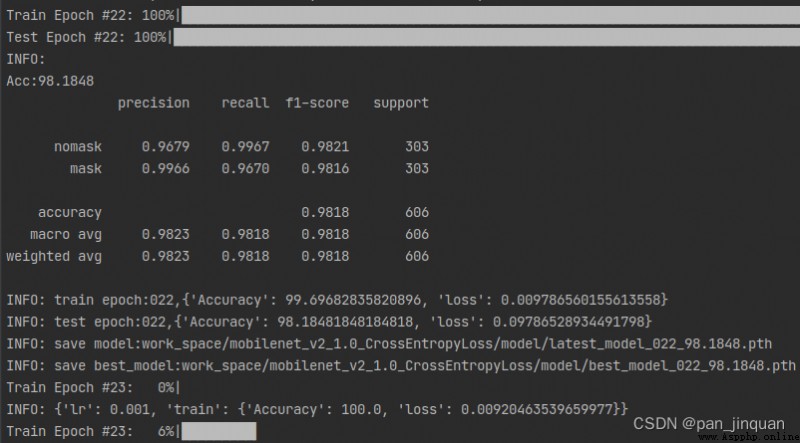

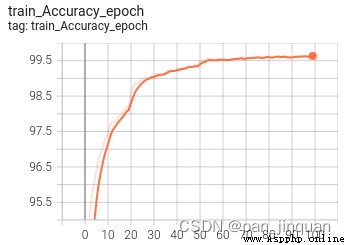

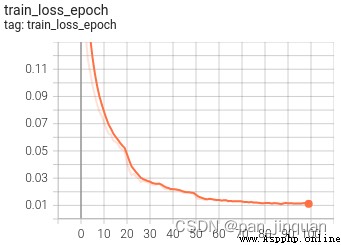

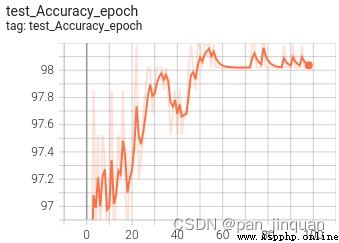

After training , Training set Accuracy stay 99% above , Test set Accuracy stay 98% about

The training process visualization tool uses Tensorboard, Usage method :

# The basic method

tensorboard --logdir=path/to/log/

# for example

tensorboard --logdir=work_space/mobilenet_v2_1.0_CrossEntropyLoss/log

Visualization

Train well Pytorch After the model , You can convert the model to ONNX Model , Facilitate subsequent model deployment

python libs/convert/convert_torch_to_onnx.py"""

This code is used to convert the pytorch model into an onnx format model.

"""

import sys

import os

sys.path.insert(0, os.getcwd())

import torch.onnx

import onnx

from classifier.models.build_models import get_models

from basetrainer.utils import torch_tools

def build_net(model_file, net_type, input_size, num_classes, width_mult=1.0):

"""

:param model_file: Model file

:param net_type: The model name

:param input_size: Model input size

:param num_classes: Number of categories

:param width_mult:

:return:

"""

model = get_models(net_type, input_size, num_classes, width_mult=width_mult, is_train=False, pretrained=False)

state_dict = torch_tools.load_state_dict(model_file)

model.load_state_dict(state_dict)

return model

def convert2onnx(model_file, net_type, input_size, num_classes, width_mult=1.0, device="cpu", onnx_type="default"):

model = build_net(model_file, net_type, input_size, num_classes, width_mult=width_mult)

model = model.to(device)

model.eval()

model_name = os.path.basename(model_file)[:-len(".pth")] + ".onnx"

onnx_path = os.path.join(os.path.dirname(model_file), model_name)

# dummy_input = torch.randn(1, 3, 240, 320).to("cuda")

dummy_input = torch.randn(1, 3, input_size[1], input_size[0]).to(device)

# torch.onnx.export(model, dummy_input, onnx_path, verbose=False,

# input_names=['input'],output_names=['scores', 'boxes'])

do_constant_folding = True

if onnx_type == "default":

torch.onnx.export(model, dummy_input, onnx_path, verbose=False, export_params=True,

do_constant_folding=do_constant_folding,

input_names=['input'],

output_names=['output'])

elif onnx_type == "det":

torch.onnx.export(model,

dummy_input,

onnx_path,

do_constant_folding=do_constant_folding,

export_params=True,

verbose=False,

input_names=['input'],

output_names=['scores', 'boxes', 'ldmks'])

elif onnx_type == "kp":

torch.onnx.export(model,

dummy_input,

onnx_path,

do_constant_folding=do_constant_folding,

export_params=True,

verbose=False,

input_names=['input'],

output_names=['output'])

onnx_model = onnx.load(onnx_path)

onnx.checker.check_model(onnx_model)

print(onnx_path)

if __name__ == "__main__":

net_type = "mobilenet_v2"

width_mult = 1.0

input_size = [128, 128]

num_classes = 2

model_file = "work_space/mobilenet_v2_1.0_CrossEntropyLoss/model/best_model_022_98.1848.pth"

convert2onnx(model_file, net_type, input_size, num_classes, width_mult=width_mult)

at present CNN Models can be deployed in many ways , May adopt TNN,MNN,NCNN, as well as TensorRT And other deployment tools , I use TNN Conduct Android End to end deployment :

take ONNX The model is converted to TNN Model , Please refer to TNN Official statement :

TNN/onnx2tnn.md at master · Tencent/TNN · GitHub

The project has achieved Android Version of wearing mask identification Demo, The deployment framework adopts TNN, Multithreading support CPU and GPU Speed up reasoning , It can be processed in real time on ordinary mobile phones . Wear a mask to identify Android Source code , The core algorithms are C++ Realization , The upper level passes through JNI Interface call .

If you want to be here Android Demo Deploy your own trained classification model , You can train well Pytorch Model transformation ONNX , To convert TNN Model , And then put TNN Model can replace your model .

package com.cv.tnn.model;

import android.graphics.Bitmap;

public class Detector {

static {

System.loadLibrary("tnn_wrapper");

}

/***

* Initialize face detection and mask wearing recognition model

* @param face_model: Face detection model ( No suffix )

* @param class_model: Wear a mask recognition model ( No suffix )

* @param root: The root directory of the model file , Put it in assets Under the folder

* @param model_type: Model type

* @param num_thread: Number of open threads

* @param useGPU: Confidence of key points , Coordinates less than the value will be set to -1

*/

public static native void init(String face_model, String class_model, String root, int model_type, int num_thread, boolean useGPU);

/***

* Face detection and face mask recognition

* @param bitmap Images (bitmap),ARGB_8888 Format

* @param score_thresh: Confidence threshold

* @param iou_thresh: IOU threshold

* @return

*/

public static native FrameInfo[] detect(Bitmap bitmap, float score_thresh, float iou_thresh);

}

The whole project source code contains :

- contain 5 Data sets : facemask-train1, facemask-train2,facemask-train3, synthetic-train1,synthetic-train2 ,facemask-test , There are about 50000+ The data of :

- Generate mask wearing face code : python create_facemask.py

- Wear mask classification model training and test code Pytorch edition , test Demo On ordinary computers CPU/GPU It can detect and recognize in real time

- Wear a mask to identify Android Demo Source code , Support CPU and GPU, It can be detected and recognized in real time on ordinary mobile phones , about 30ms about