Preface

Scene one : Data does not need to be written frequently mysql

Scene two : Data is incremental , Need to automate and write frequently mysql

summary

PrefacePython Read data is automatically written MySQL database , This requirement is very common in the work , Mainly involves python Operating the database , Read / write updates, etc , The database may be mongodb、 es, Their treatment ideas are similar , You only need to change the syntax of operating the database . This article will give you a systematic share of how to write tens of millions of data into mysql, It is divided into two scenarios , Two ways .

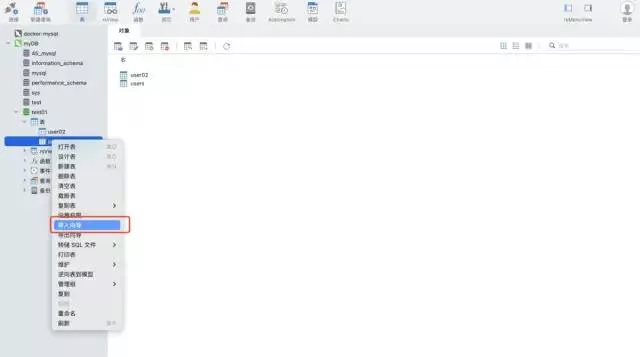

Scene one : Data does not need to be written frequently mysqlUse navicat The Import Wizard function of the tool . Support multiple file formats , You can automatically create a table according to the file fields , You can also insert data into an existing table , It's very fast and convenient .

Test data :csv Format , about 1200 Line ten thousand

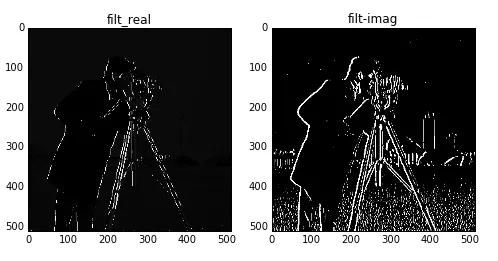

import pandas as pddata = pd.read_csv('./tianchi_mobile_recommend_train_user.csv')data.shapePrint the results :

Mode one :python pymysql library

install pymysql command :

pip install pymysqlCode implementation :

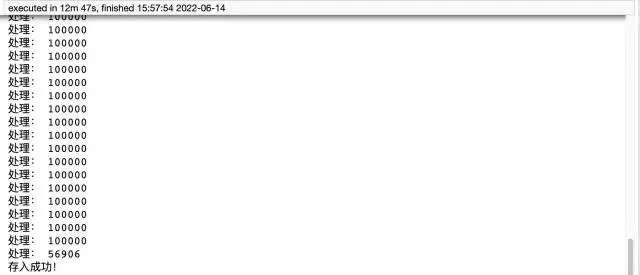

import pymysql# Database connection information conn = pymysql.connect( host='127.0.0.1', user='root', passwd='wangyuqing', db='test01', port = 3306, charset="utf8")# Block processing big_size = 100000# Block traversal writes to mysqlwith pd.read_csv('./tianchi_mobile_recommend_train_user.csv',chunksize=big_size) as reader: for df in reader: datas = [] print(' Handle :',len(df))# print(df) for i ,j in df.iterrows(): data = (j['user_id'],j['item_id'],j['behavior_type'], j['item_category'],j['time']) datas.append(data) _values = ",".join(['%s', ] * 5) sql = """insert into users(user_id,item_id,behavior_type ,item_category,time) values(%s)""" % _values cursor = conn.cursor() cursor.executemany(sql,datas) conn.commit() # Close the service conn.close()cursor.close()print(' Deposit successful !')

Mode two :pandas sqlalchemy:pandas Need to introduce sqlalchemy To support sql, stay sqlalchemy With the support of , It can realize the query of all common database types 、 Update and other operations .

Code implementation :

from sqlalchemy import create_engineengine = create_engine('mysql+pymysql://root:[email protected]:3306/test01')data = pd.read_csv('./tianchi_mobile_recommend_train_user.csv')data.to_sql('user02',engine,chunksize=100000,index=None)print(' Deposit successful !') summary pymysql The method takes 12 branch 47 second , It takes a long time , A lot of code , and pandas It takes only five lines of code to implement this requirement , It only took 4 About minutes . Finally add , Method 1 needs to create a table in advance , Mode 2 does not require . So I recommend you to use the second method , It is convenient and efficient . If you still feel that your speed is slow , Consider joining multiple processes 、 Multithreading .

The most complete three store data in MySQL Database method :

Direct deposit , utilize navicat Import Wizard function of

Python pymysql

Pandas sqlalchemy

This is about Python Read tens of millions of data and write it automatically MySQL That's all for the database article , More about Python Read the data content, please search the previous articles of the software development network or continue to browse the relevant articles below. I hope you can support the software development network in the future !