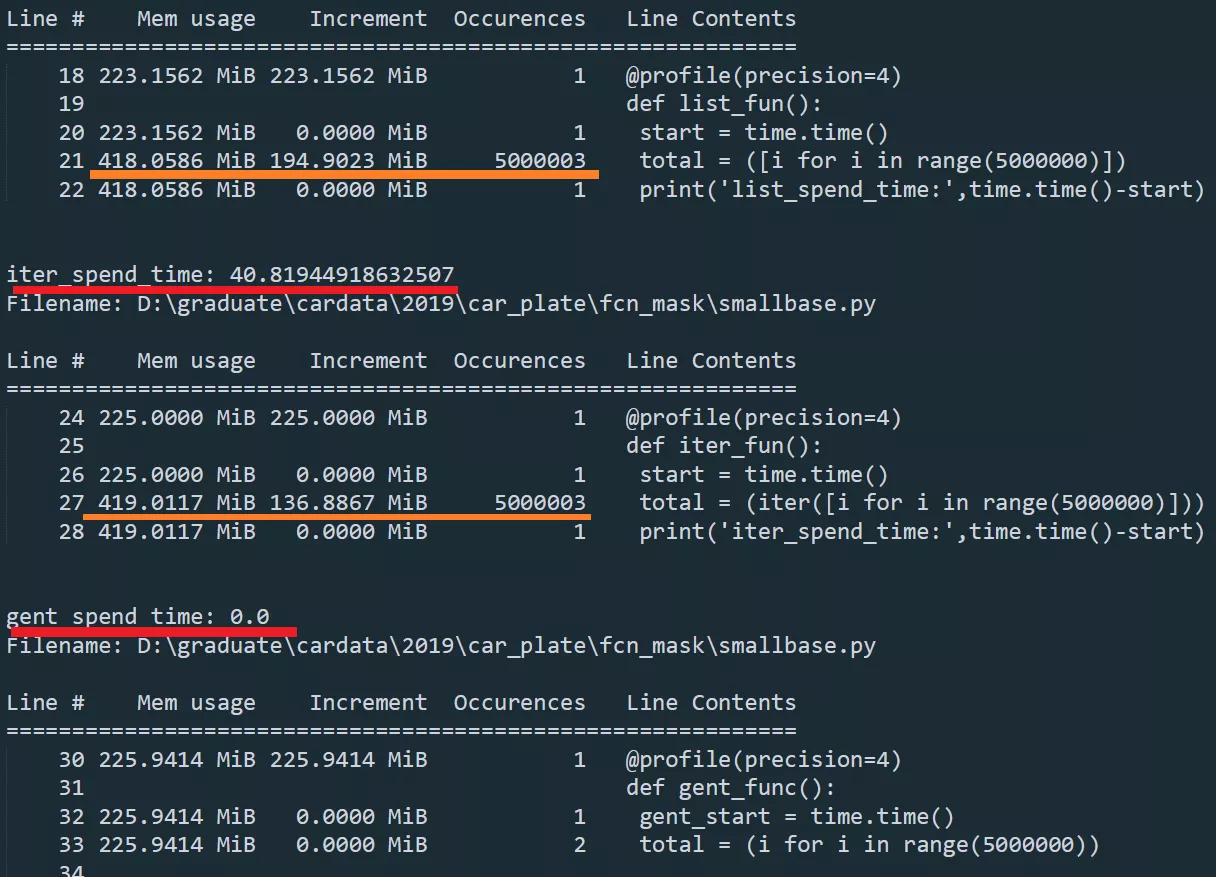

When not calculating , Generators and list Space occupation

import timefrom memory_profiler import [email protected](precision=4)def list_fun():start = time.time()total = ([i for i in range(5000000)])print('iter_spend_time:',time.time()-start)@profile(precision=4)def gent_func():gent_start = time.time()total = (i for i in range(5000000))print('gent_spend_time:',time.time()-gent_start)iter_fun()gent_func()

Show the meaning of the results : First column Represents the line number of the analyzed code , Second column (Mem usage ) Indicates that after the line is executed Python Memory usage of the interpreter . The third column ( The incremental ) Indicates the memory difference between the current row and the last row . The last column ( Row content ) Print the analyzed code .

analysis : Without calculation , list list And iterators take up space , But for the The generator does not take up space

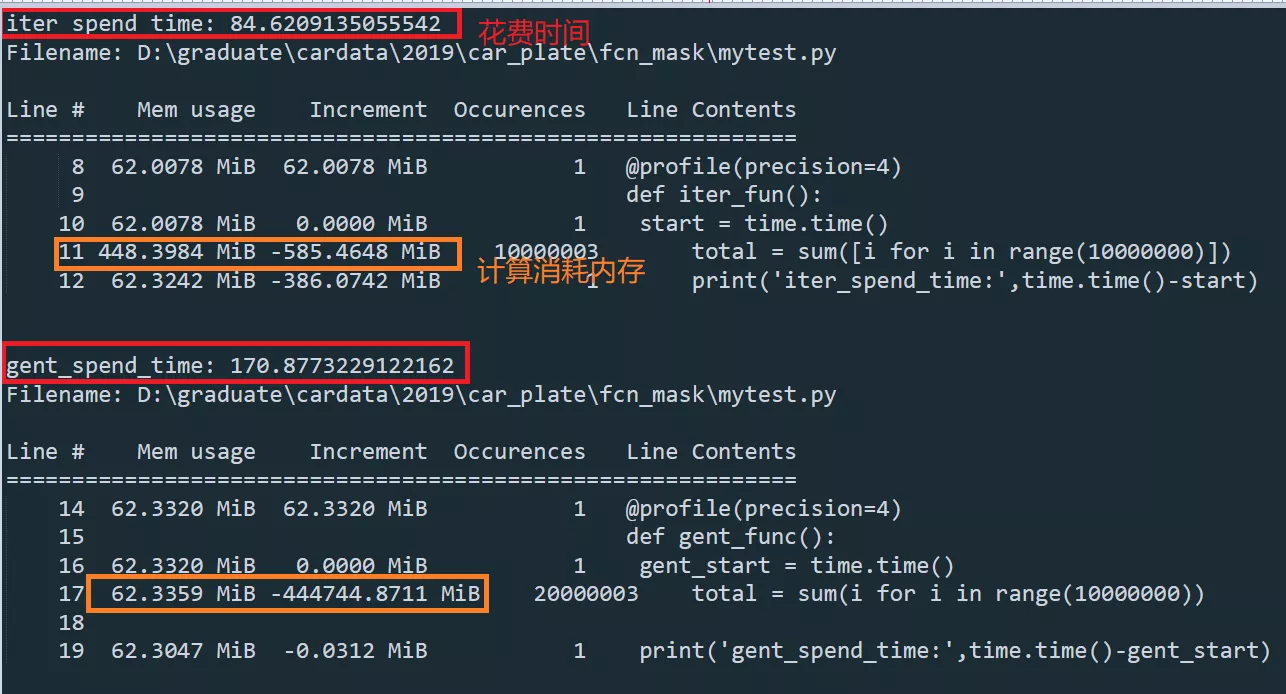

When calculation is required ,list And the time and memory consumption of the generator

Use sum Built in functions ,list Sum with generator 10000000 Data ,list Large memory consumption , generator cost About the time list Twice as many

import timefrom memory_profiler import [email protected](precision=4)def iter_fun():start = time.time()total = sum([i for i in range(10000000)])print('iter_spend_time:',time.time()-start)@profile(precision=4)def gent_func():gent_start = time.time()total = sum(i for i in range(10000000))print('gent_spend_time:',time.time()-gent_start)iter_fun()gent_func()

comparative analysis , If you need to use the data iteratively , The generator method takes a long time , but Memory usage is still low , Because when using generators , The memory only stores the data calculated in each iteration . When analyzing the reasons, I personally think , During the iterative calculation of the generator , In iterating over data and computing directly and continuously transform , Compared with the The iterator object saves all the data in memory first ( Although it takes up memory , But reading is faster than iterating again ), therefore , Generator comparison takes time , But it takes up less memory .

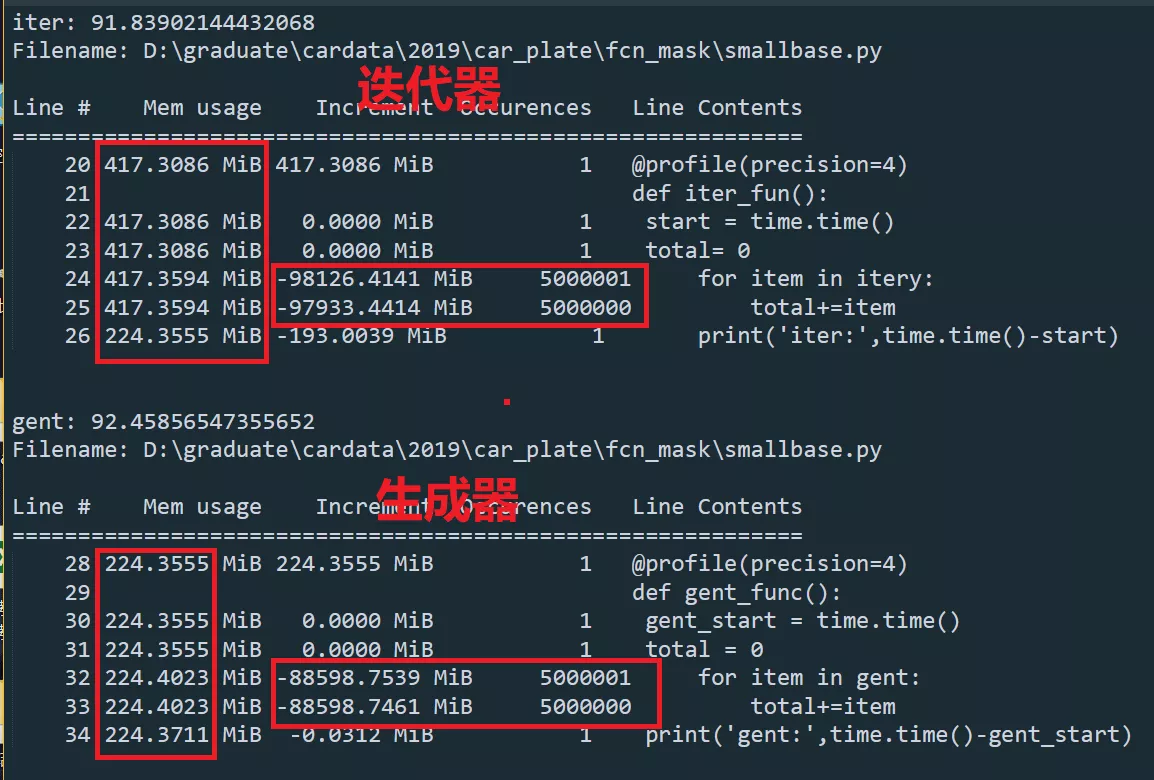

Record data cyclic summation 500000 Data , Iterator and generator loop to get

summary : Almost at the same time , Iterators take up a lot of memory

import timefrom memory_profiler import profileitery = iter([i for i in range(5000000)])gent = (i for i in range(5000000))@profile(precision=4)def iter_fun():start = time.time()total= 0for item in itery:total+=itemprint('iter:',time.time()-start)@profile(precision=4)def gent_func():gent_start = time.time()total = 0for item in gent:total+=itemprint('gent:',time.time()-gent_start)iter_fun()gent_func()

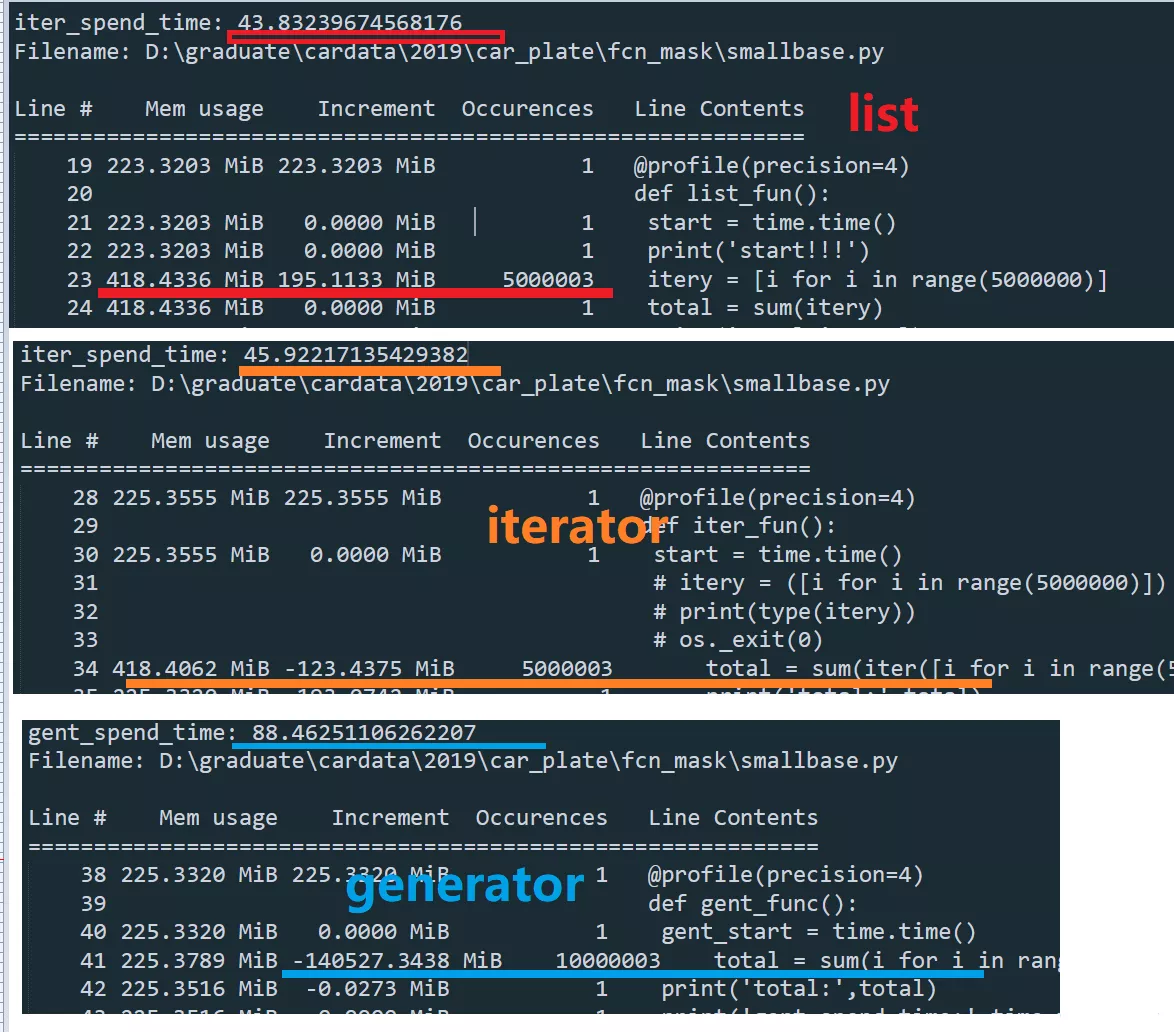

list, Iterators and generators work together sum Calculation 5000000 Data time comparison

summary :list+sum And iterators +sum The calculation time is about the same , but generator +sum Calculated duration Almost twice as long ,

import timefrom memory_profiler import [email protected](precision=4)def list_fun():start = time.time()print('start!!!')list_data = [i for i in range(5000000)]total = sum(list_data)print('iter_spend_time:',time.time()-start)@profile(precision=4)def iter_fun():start = time.time()total = 0total = sum(iter([i for i in range(5000000)]))print('total:',total)print('iter_spend_time:',time.time()-start)@profile(precision=4)def gent_func():gent_start = time.time()total = sum(i for i in range(5000000))print('total:',total)print('gent_spend_time:',time.time()-gent_start)list_fun()iter_fun()gent_func()

This is about python memory_profiler This is the end of the article on the time analysis of the memory consumption of Library generators and iterators , More about python Of memory_profiler Please search the previous articles of software development network or continue to browse the relevant articles below. I hope you will support software development network more in the future !