In understanding Python After multithreading and multiprocessing of concurrent programming , Let's take a look at the asyncio The asynchronous IO Programming -- coroutines

01

A brief introduction to the project

coroutines (Coroutine) Also called tasklet 、 fibers , A contract is not a process or thread , The execution process is similar to Python Function call ,Python Of asyncio Asynchrony of module implementation IO In the programming framework , A coroutine is a async Call of asynchronous function defined by keyword ;

A process contains multiple threads , Similar to a human tissue, there are many kinds of cells working , Again , A program can contain multiple collaborations . Multiple threads are relatively independent , Thread switching is controlled by the system . Again , Multiple processes are also relatively independent , But its switching is controlled by the program itself .

02

A simple example

Let's use a simple example to understand the coroutine , First look at the following code :

import time

def display(num):

time.sleep(1)

print(num)

for num in range(10):

display(num)

It's easy to understand , The program will output 0 To 9 The number of , every other 1 Output a number in seconds , Therefore, the execution of the whole program needs about 10 second Time . It is worth noting that , Because there is no use of multithreading or multiprocessing ( Concurrent ), There is only one execution unit in the program ( There is only one thread in the perform ), and time.sleep(1) The hibernation operation of will cause the entire thread to stall 1 Second ,

For the code above , In this period of time CPU It is idle without doing anything .

Let's take a look at what happens when we use a coroutine :

import asyncio

async def display(num): # Use before function async keyword , Becomes an asynchronous function await asyncio.sleep(1)

print(num)

Asynchronous functions are different from ordinary functions , Call a normal function to get the return value , Calling an asynchronous function will result in a coroutine object . We need to put the collaboration object into an event loop to achieve the effect of collaboration with other collaboration objects , Because the event loop is responsible for handling subprocesses Operation of sequence switching .

Simply put, let the blocked subroutine give up CPU To executable subroutines .

03

Basic concepts

asynchronous IO It means that the program initiates a IO operation ( Block waiting ) after , Don't have to etc. IO End of operation , You can continue with other operations ; Do something else , When IO At the end of the operation , You will be informed , And then go ahead and do it . asynchronous IO Programming is a way to achieve concurrency , Apply to IO Intensive task

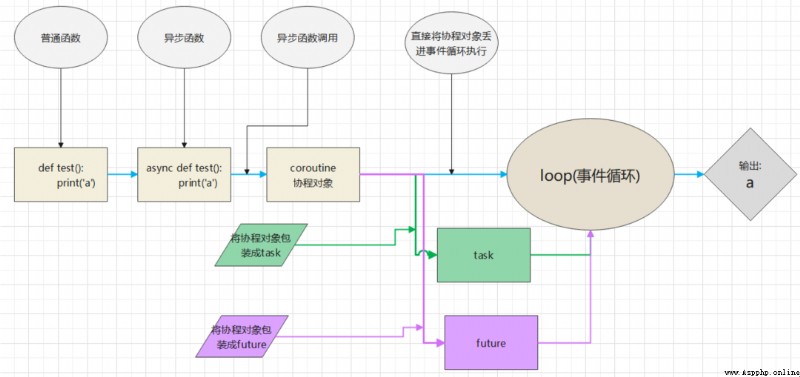

Python modular asyncio Provides an asynchronous programming framework , The overall flow chart is as follows :

The following describes each function from the code level

async: Define a method ( function ), This method will not be executed immediately in subsequent calls, but will return a coroutine object ;

async def test(): print('hello asynchronous ')

test() # Calling an asynchronous function

Output :RuntimeWarning: coroutine 'test' was never awaited

coroutine: Coroutine object , You can also add a coroutine object to a time loop , It will be called by the event loop ;

async def test():

print('hello asynchronous ')

c = test() # Calling an asynchronous function , Get the collaboration object -->c

print(c)

Output :<coroutine object test at 0x0000023FD05AA360>

event_loop: The event loop , It's like an infinite loop , You can add some functions to this event , The function does not execute immediately , But when certain conditions are met , The function will be executed in a loop ;

async def test():

print('hello asynchronous ')

c = test() # Calling an asynchronous function , Get the collaboration object -->c

loop = asyncio.get_event_loop() # Create an event loop

loop.run_until_complete(c) # Throw the coroutine object into the loop , And execute the asynchronous function internal code

Output :hello asynchronous

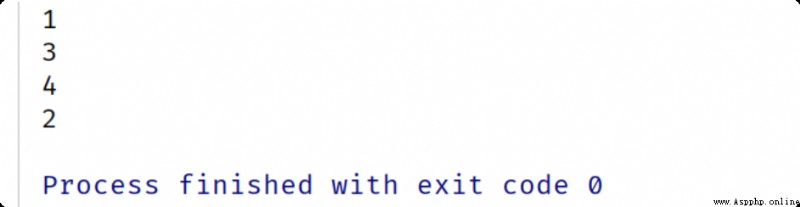

await: Used to suspend the execution of a blocking method ;

import asyncio

def running1():

async def test1():

print('1')

await test2()

print('2')

async def test2():

print('3')

print('4')

loop = asyncio.get_event_loop()

loop.run_until_complete(test1())

if __name__ == '__main__':

running1()

Output :

task: Mission , Further encapsulation of the collaboration object , Contains the status of the task ;

async def test():

print('hello asynchronous ')

c = test() # Calling an asynchronous function , Get the collaboration object -->c

loop = asyncio.get_event_loop() # Create an event loop

task = loop.create_task(c) # establish task Mission

print(task)

loop.run_until_complete(task) # Perform tasks

Output :

<Task pending coro=<test() running at D: /xxxx.py>> # task

hello asynchronous # Asynchronous functions are executed as internal code

future: Represents tasks that will or will not be performed in the future , Actually and task There is no essential difference ; There is no code presentation here ;

First create a function using the general method :

def func(url):

print(f' On right {url} Initiate request :')

print(f' request {url} success !')

func('www.baidu.com')

The results are shown below :

On right www.baidu.com Initiate request :

request www.baidu.com success 04

Basic operation

adopt async Keyword defines an asynchronous function , Calling an asynchronous function returns a coroutine object .

Asynchronous functions are suspended during function execution , To execute other asynchronous functions , Wait for pending conditions (time.sleep(n)) After disappearing , Come back and execute , Then let's modify the above code :

async def func(url):

print(f' On right {url} Initiate request :')

print(f' request {url} success !')

func('www.baidu.com')

give the result as follows :

RuntimeWarning: coroutine 'func' was never awaited

This is what I mentioned earlier , Use async Keyword makes the function call get a coroutine object , The process cannot run directly , Need to Add to the event loop , The latter calls the coroutine when appropriate ;

task A task object is a further encapsulation of a collaboration object ;

import asyncio

async def func(url):

print(f' On right {url} Initiate request :')

print(f' request {url} success !')

c = func('www.baidu.com') # The function call is written as an object --> c

loop = asyncio.get_event_loop() # Create a time cycle object

task = loop.create_task(c)

loop.run_until_complete(task) # Register and start

print(task)

give the result as follows :

On right www.baidu.com Initiate request :

request www.baidu.com success !

<Task finished coro=<func() done, defined at D:/data_/test.py:10> result=None>

We mentioned earlier future and task There is no essential difference

async def func(url):

print(f' On right {url} Initiate request :')

print(f' request {url} success !')

c = func('www.baidu.com') # The function call is written as an object --> c

loop = asyncio.get_event_loop() # Create a time cycle object

future_task = asyncio.ensure_future(c)

print(future_task,' unexecuted ')

loop.run_until_complete(future_task) # Register and start

print(future_task,' performed ')

give the result as follows :

<Task pending coro=<func() running at D:/data/test.py:10>> unexecuted

On right www.baidu.com Initiate request :

request www.baidu.com success !

<Task finished coro=<func() done, defined at D:/data/test.py:10> result=None> performed

In asynchronous functions , have access to await keyword , For time-consuming operations ( For example, network request 、 File reading, etc IO operation ) Suspend , For example, asynchronous programs need to wait for a long time when they reach a certain step , Just hang this , To execute other asynchronous functions

import asyncio, time

async def do_some_work(n): # Use async Keyword defines an asynchronous function

print(' wait for :{} second '.format(n))

await asyncio.sleep(n) # Sleep for a while

return '{} Seconds later, return to end the operation '.format(n)

start_time = time.time() # Starting time

coro = do_some_work(2)

loop = asyncio.get_event_loop() # Create an event loop object

loop.run_until_complete(coro)

print(' The elapsed time : ', time.time() - start_time)

The operation results are as follows :

wait for :2 second

The elapsed time : 2.00131201744079605

Multitasking process

Mission (Task) Object is used to encapsulate the collaboration object , Save the status of the running process , Use run_until_complete() Method to register the task to the event loop ;

If we want to use multitasking , Then we need to register multiple task lists at the same time , have access to run_until_complete(asyncio.wait(tasks)),

there tasks, Represents a task sequence ( It's usually a list )

Registering multiple tasks can also use run_until_complete(asyncio. gather(*tasks))

import asyncio, time

async def do_some_work(i, n): # Use async Keyword defines an asynchronous function

print(' Mission {} wait for : {} second '.format(i, n))

await asyncio.sleep(n) # Sleep for a while

return ' Mission {} stay {} Seconds later, return to end the operation '.format(i, n)

start_time = time.time() # Starting time

tasks = [asyncio.ensure_future(do_some_work(1, 2)),

asyncio.ensure_future(do_some_work(2, 1)),

asyncio.ensure_future(do_some_work(3, 3))]

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.wait(tasks))

for task in tasks:

print(' Task execution results : ', task.result())

print(' The elapsed time : ', time.time() - start_time)

The operation results are as follows :

Mission 1 wait for : 2 second

Mission 2 wait for : 1 second

Mission 3 wait for : 3 second

Task execution results : Mission 1 stay 2 Seconds later, return to end the operation Task execution results : Mission 2 stay 1 Seconds later, return to end the operation Task execution results : Mission 3 stay 3 Seconds later, return to end the operation The elapsed time : 3.0028676986694336

06

actual combat | Crawling LOL The skin

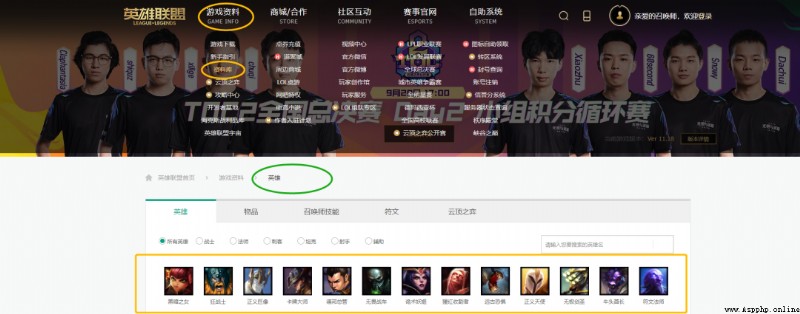

First, open the official website :

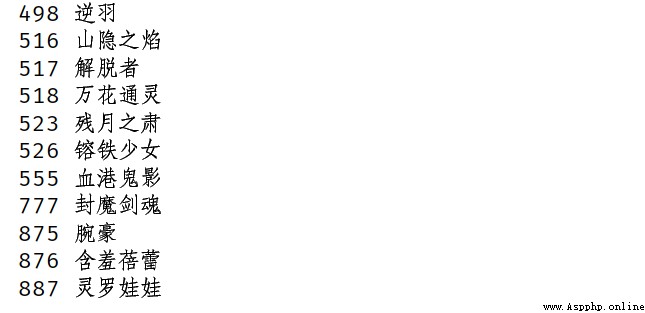

You can see the list of Heroes , I won't show it in detail here , We know that a hero has many skins , Our goal is to crawl all the skin of every hero , Save to the corresponding folder ;

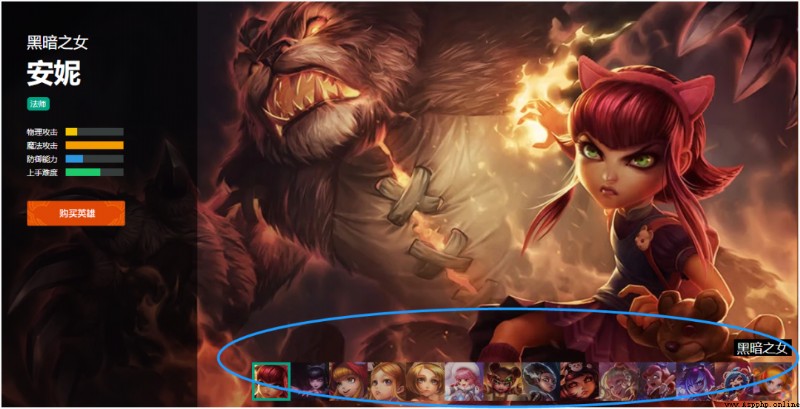

Open a hero's skin page , As shown below :

The daughter of darkness , The rabbit below corresponds to the skin of the Cain brothers , And then by looking at network Find the corresponding skin data in js In the document ;

Then we found the hero skin store url The law of linking :

url1 = 'https://game.gtimg.cn/images/lol/act/img/js/hero/1.js'

url2 = 'https://game.gtimg.cn/images/lol/act/img/js/hero/2.js'

url3 = 'https://game.gtimg.cn/images/lol/act/img/js/hero/3.js'

We found that only id Parameters are dynamically constructed , The rule is :

'https://game.gtimg.cn/images/lol/act/img/js/hero/{}.js'.format(i)

But this id Only the first ones are in order , Find the corresponding hero on the page showing all heroes id,

Here we intercept the last few heroes id, So you have to crawl all over , It needs to be set first id, Because the previous ones are in order , Here we climb Take before 20 The skin of a hero ;

The hero in front id Are all available in order range(1,21), Dynamic construction url;

def get_page():

page_urls = []

for i in range(1,21):

url = 'https://game.gtimg.cn/images/lol/act/img/js/hero/{}.js'.format(i)

print(url)

page_urls.append(url)

return page_urls

And analyze the web page to get the skin image url Address :

def get_img():

img_urls = []

page_urls = get_page()

for page_url in page_urls:

res = requests.get(page_url, headers=headers)

result = res.content.decode('utf-8')

res_dict = json.loads(result)

skins = res_dict["skins"]

for hero in skins:

item = {}

item['name'] = hero["heroName"]

item['skin_name'] = hero["name"]

if hero["mainImg"] == '':

continue

item['imgLink'] = hero["mainImg"]

print(item)

img_urls.append(item)

return img_urls

explain :

res_dict = json.loads(result) : What will be obtained json Format string converted to dictionary format ;

heroName: Hero's name ( This must be the same , It is convenient for us to create a folder according to the hero name later );

name: It means complete name , Including skin name ( This must be different ) yes , we have 'mainImg' It's empty. , We need to make a judgment ;

Here we create a folder based on the hero's name , Then pay attention to the naming of the pictures , Don't forget it /, The directory structure is established

The main function :

def main():

loop = asyncio.get_event_loop()

img_urls = get_img() print(len(img_urls))

tasks = [save_img(img[0], img[1]) for img in enumerate(img_urls)]

try:

loop.run_until_complete(asyncio.wait(tasks))

finally:

loop.close()

if __name__ == '__main__':

start = time.time()

main()

end = time.time()

print(end - start)

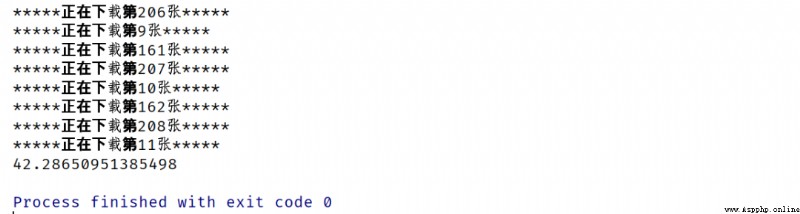

Running results :

download 233 The picture cost 42s, You can see the speed is OK , The file directory results are as follows :

After asynchronously crawling pictures , We need to use requests To perform synchronous data crawling , Compare efficiency , So in the original code On the basis of the above, we will modify , I'll skip it here , It's all the same , This is to replace the event loop in a part with a loop :

img_urls = get_img()

print(len(img_urls))

for i,img_url in enumerate(img_urls):

save_img(i,img_url)

We can see , The speed of using CO process is faster than requests Soon some .

The above is the whole content of this paper , Interested readers can type the code by themselves ~

Baidu security verification

Data standard preprocessing collection_ Python machine learning sklearn Library

Data standard preprocessing collection_ Python machine learning sklearn Library

List of articles Data acquisi

Does not exist! Python says that a browser that doesnt give data doesnt exist!

Does not exist! Python says that a browser that doesnt give data doesnt exist!

Sometimes our code is always c