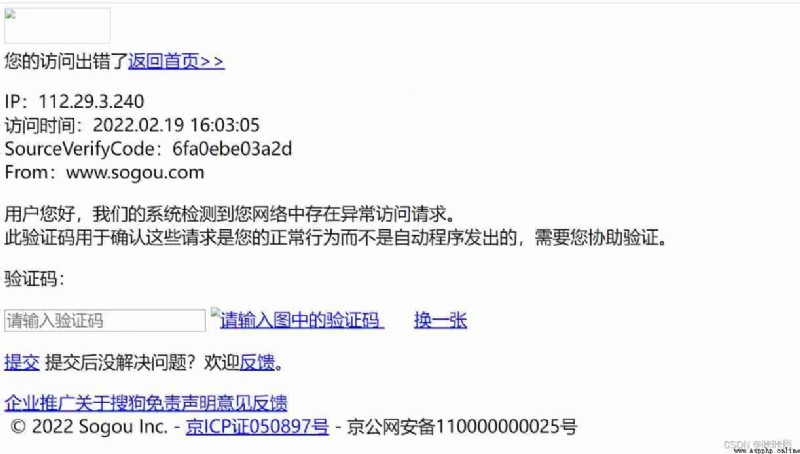

Case study 1: Crawl the search results corresponding to the specified terms of Sogou ( Simple web page collector )

import requests

url = "https://www.sogou.com/web"

# Handle URL Parameters carried :

kw =input(" Enter the keyword to search ")

param={

'query':kw

}

# For the specified url Of initiated requests url It carries parameters , The parameters are processed in the request process

response = requests.get(url=url,params=param)

page_text = response.text

fileName =kw+'.html'

with open(fileName,'w',encoding='utf-8') as fp:

fp.write(page_text)

print(fileName+" Saved successfully !!!")

Anti creeping :#UA camouflage

#UA camouflage

#User-Agent

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36"

}response = requests.get(url=url,params=param,headers=headers)

Case study 2: Crack Baidu translation

import requests

import json

#1. Appoint url

post_url ="https://fanyi.baidu.com/langdetect"

#2.UA camouflage

headers ={

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36"

}

#3.post Request parameter processing ( Same as get Request the same )

data = {

# 'from': 'en',

# 'to': 'zh',

'query': 'dog',

}

#4. Request to send

response = requests.post(url=post_url,data=data,headers=headers)

#5. Get response data .json() Method returns obj( If the confirmation response data is json Type of , Just can use json())

dic_obj = response.json()

print(dic_obj)

# For persistent storage

fp =open('./dog.json','w',encoding='utf-8')

json.dump(dic_obj,fp=fp,ensure_ascii=False)

fp.close()

print("over")Case study 3: Climb to the ranking list of Douban films https://movie.douban.com/ Movie details in

#-*- coding = utf-8 -*-

#@Time : 2022/2/19 17:33

#@File : requests Douban movie of actual combat .py

#@software : PyCharm

import requests

import json

headers ={ "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36"

}

url = "https://movie.douban.com/j/chart/top_list"

param ={

'type': '24',

'interval_id': '100:90',

'action':'',

'start': '40',

'limit': '20'

}

response = requests.get(url=url,params=param,headers=headers)

list_data = response.json()

fp =open('./douban.json','w',encoding='utf-8')

json.dump(list_data,fp=fp,ensure_ascii=False)

fp.close()

print("over")

Case study 4: Search KFC restaurant http://www.kfc.com.cn/kfccda/index.aspx The number of restaurants in the designated location in

#-*- coding = utf-8 -*-

#@Time : 2022/2/19 18:01

#@File : requests KFC in action .py

#@software : PyCharm

import requests

import json

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36"

}

url = "http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=keyword"

kw = input(" Please enter the name of the city to query ")

param ={

'cname':'',

'pid': '',

'keyword': kw,

'pageIndex': '1',

'pageSize': '10'

}

response = requests.get(url=url,params=param,headers=headers)

page_text = response.text

with open('./kfc.text','w',encoding='utf-8') as fp:

fp.write(page_text)

print("over")Case study 5: Based on the relevant data of cosmetics production license of the people's Republic of China in the State Drug Administration

http://scxk.nmpa.gov.cn:81/xk/

#-*- coding = utf-8 -*-

#@Time : 2022/2/19 19:08

#@File : requests The relevant data of the State Food and Drug Administration in actual combat are crawled .py

#@software : PyCharm

import requests

import json

# Batch access to information from different enterprises id value

if __name__ =="__main__":

id_list = [] # Storage enterprise id

all_data_list = [] # Store all enterprise details

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36"

}

url = "http://scxk.nmpa.gov.cn:81/xk/itownet/portalAction.do?method=getXkzsList"

# Parameter encapsulation

for page in range(1,6):

page =str(page)

data ={

'on': 'true',

'page': page,

'pageSize': '15',

'productName': '',

'conditionType': '1',

'applyname': '',

'applysn': '',

}

json_ids = requests.post(url=url,headers=headers,data=data).json()

for dic in json_ids['list']:

id_list.append(dic['ID'])

# Details page url All domain names are the same , Only the parameters carried are different

# Get enterprise details

for id in id_list:

data_xq ={

"id": id

}

url_xq = "http://scxk.nmpa.gov.cn:81/xk/itownet/portalAction.do?method=getXkzsById"

json_xq = requests.post(url=url_xq,headers=headers,data=data_xq).json()

print(json_xq)

all_data_list.append(json_xq)

# fp = open('.json', 'w', encoding='utf-8')

# json.dump(json_xq, fp=fp, ensure_ascii=False)

# fp.close()

# Persistent storage

fp =open('./allData.json','w',encoding='utf-8')

json.dump(all_data_list,fp =fp,ensure_ascii=False)

print("ov")