operating system :Ubuntu 20.04

Spark edition :3.2.1

Hadoop edition :3.3.1

Python edition :3.8.10

Java edition :1.8.202

from pyspark import SparkConf, SparkContext

conf = SparkConf().setAppName("WordCount").setMaster("local")

sc = SparkContext(conf=conf)

inputFile = "hdfs://localhost:9000/user/way/word.txt"

textFile = sc.textFile(inputFile)

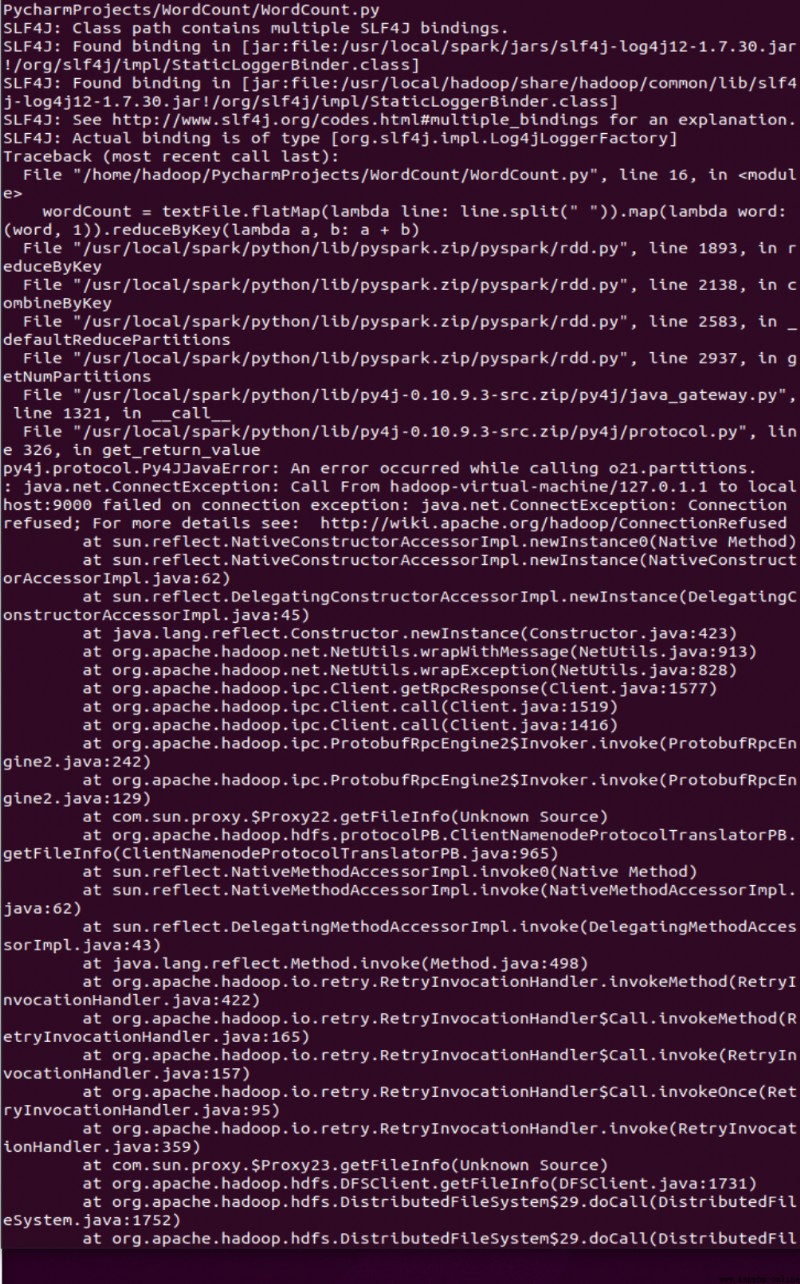

wordCount = textFile.flatMap(lambda line : line.split(" ")).map(lambda word : (word, 1)).reduceByKey(lambda a, b : a + b)

wordCount.foreach(print)

Process finished with exit code 1

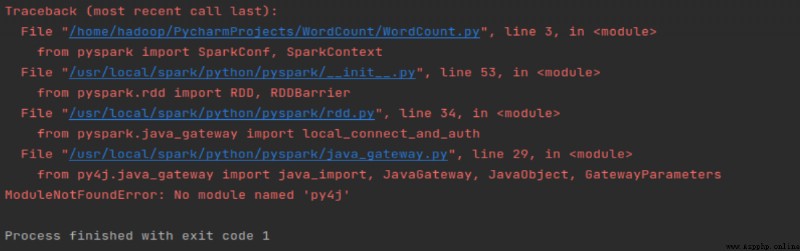

I thought it was py4j There is a problem with the file directory , It turns out that it's not ; Later on pycharm The error is caused by importing the package file. It may be caused by version compatibility

Normal operation code