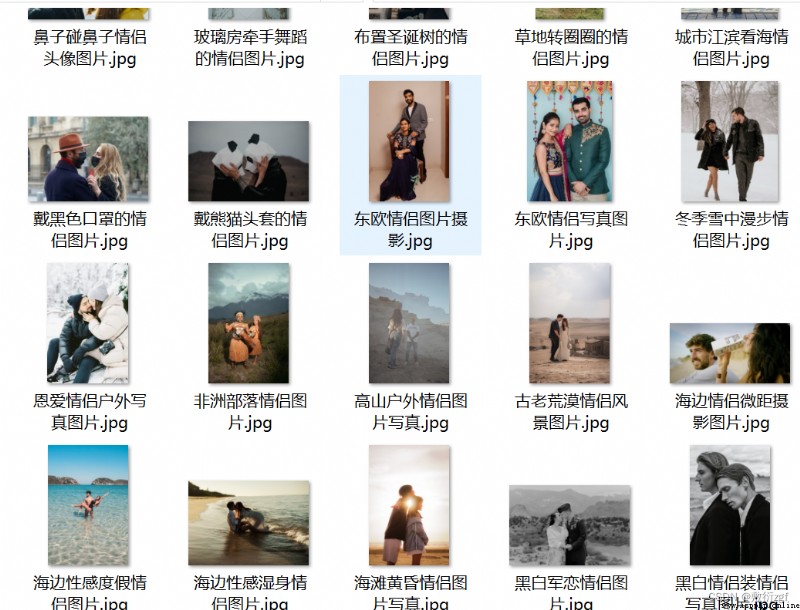

# demand Download the pictures on the first ten pages

# first page :https://sc.chinaz.com/tupian/qinglvtupian.html

# The second page :https://sc.chinaz.com/tupian/qinglvtupian_2.html

import urllib.request

from lxml import etree

def creat_request(page):

if(page == 1):

url = 'https://sc.chinaz.com/tupian/qinglvtupian.html'

else:

url = 'https://sc.chinaz.com/tupian/qinglvtupian_' + str(page) + '.html'

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

request = urllib.request.Request(url=url,headers=headers)

return request

def get_content(request):

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

return content

def down_load(content):

# Download the pictures

tree = etree.HTML(content)

name_list = tree.xpath("//div[@id='container']//a/img/@alt")

# Generally, websites involving pictures will have lazy loading

src_list = tree.xpath("//div[@id='container']//a/img/@src")

# print(len(name_list),len(src_list))

for i in range(len(name_list)):

name = name_list[i]

src = src_list[i]

url = 'https:' + src

url = url.replace('_s','') # Link to _s Delete pictures that can be downloaded to HD

urllib.request.urlretrieve(url = url,filename='./file/' + name + '.jpg')

if __name__ == '__main__':

start_page = int(input(' Please enter the starting page number '))

end_page = int(input(' Please enter the end page number '))

for page in range (start_page,end_page+1):

# (1) Request object customization

request = creat_request(page)

# (2) Get web source

content = get_content(request)

# (3) download

down_load(content)

1. install JsonPath

pip install jsonpath -i https://pypi.douban.com/simple

2. analysis json Case study

json data

{

"store": {

"book": [

{

"category": " Fix true ",

"author": " Six ways ",

"title": " How bad guys are made ",

"price": 8.95

},

{

"category": " Fix true ",

"author": " I eat tomatoes ",

"title": " Devour the stars ",

"price": 9.96

},

{

"category": " Fix true ",

"author": " Three little Tang family ",

"title": " Doulo land ",

"price": 9.45

},

{

"category": " Fix true ",

"author": " The third uncle of Nanpai ",

"title": " Xincheng substation ",

"price": 8.72

}

],

"bicycle": {

"color": " black ",

"price": 19.95

}

}

}

(1) Get all the authors in the bookstore

import json

import jsonpath

obj = json.load(open('file/jsonpath.json','r',encoding='utf-8'))

# All the authors of the bookstore book[*] All books book[1] The first book

author_list = jsonpath.jsonpath(obj,'$.store.book[*].author')

print(author_list)

(2) Get all the authors

# All the authors

author_list = jsonpath.jsonpath(obj,'$..author')

print(author_list)

(3)store All the elements below

# store All the elements below

tag_list = jsonpath.jsonpath(obj,'$.store.*')

print(tag_list)

(4)store All the money below

# store All the money below

price_list = jsonpath.jsonpath(obj,'$.store..price')

print(price_list)

(5) The third book

# The third book

book = jsonpath.jsonpath(obj,'$..book[2]')

print(book)

(6) The last book

# The last book

book = jsonpath.jsonpath(obj,'$..book[(@.length - 1)]')

print(book)

(7) The first two books

# The first two books

book_list = jsonpath.jsonpath(obj,'$..book[0,1]') # Equivalent to the next expression

book_list = jsonpath.jsonpath(obj,'$..book[:2]')

print(book_list)

(8) Filter out the version number Conditional filtering requires adding a question mark before the parentheses

# Filter out the version number Conditional filtering requires adding a question mark before the parentheses

book_list = jsonpath.jsonpath(obj,'$..book[?(@.isbn)]')

print(book_list)

(9) That book is more than 9 element

# That book is more than 9 element

book_list = jsonpath.jsonpath(obj,'$..book[?(@.price>9.8)]')

print(book_list)

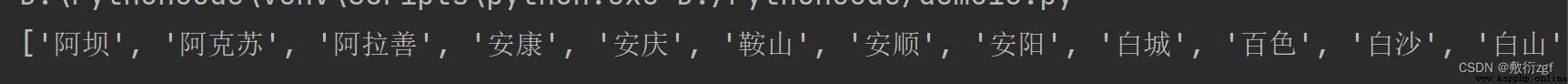

import urllib.request

url = 'http://dianying.taobao.com/cityAction.json?activityId&_ksTS=1656042784573_63&jsoncallback=jsonp64&action=cityAction&n_s=new&event_submit_doGetAllRegion=true'

headers ={

# ':authority': ' dianying.taobao.com',

# ':method': ' GET',

# ':path': ' /cityAction.json?activityId&_ksTS=1656042784573_63&jsoncallback=jsonp64&action=cityAction&n_s=new&event_submit_doGetAllRegion=true',

# ':scheme': ' https',

'accept':'*/*',

# 'accept-encoding': ' gzip, deflate, br',

'accept-language':'zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'cookie':'cna=zIsvG8QofGgCAXAc0HQF5jMC;ariaDefaultTheme=undefined;xlly_s=1;t=9ac1f71719420207d1f87d27eb676a4c;cookie2=1780e3cc3bb6e7514cd141e9f837bf83;v=0;_tb_token_=fb13e3ee13e77;_m_h5_tk=e38bb4bac8606d14f4e3e90d0499f94a_1656050157762;_m_h5_tk_enc=76f353efff1883eec471912a42ecc783;tfstk=cfOCBVmvikqQn3HzTegZLGZ2rC15Z9ic58j9RcpoM5T02MTCixVVccfNfkfPyN1..;l=eBMAoWzqL6O1ZKDhBOfwhurza77OGIRAguPzaNbMiOCP_gCp5jA1W6b5MMT9CnGVhsT2R3uKagAwBeYBqI2jjqjqnZ2bjbkmn;isg=BMrKoAG5nLBFjxABGbp50JlLG7Bsu04VAQVWEFQDG52oB2rBPEsiJUJxF3Pb98at',

'referer':'https://www.taobao.com/',

'sec-ch-ua': ' "Not A;Brand";v="99", "Chromium";v="102", "Microsoft Edge";v="102"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-dest': 'script',

'sec-fetch-mode': 'no-cors',

'sec-fetch-site': 'same-site',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44',

}

request = urllib.request.Request(url=url,headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

content = content.split('(')[1].split(')')[0]

with open('./file/jsonpath Analyze the ticket washing .json','w',encoding='utf-8')as fp:

fp.write(content)

import json

import jsonpath

obj = json.load(open('./file/jsonpath Analyze the ticket washing .json','r',encoding='utf-8'))

cith_list = jsonpath.jsonpath(obj,'$..regionName')

print(cith_list)

abbreviation :bs4

What is it :BeautifulSoup and lxml equally , It's a html Parser , Its main function is to parse and extract data

advantage : User friendly interface design , Easy to use

shortcoming : There is no efficiency lxml high

【 Use steps 】

pip install bs4 -i https://pypi.douban.com/simplefrom bs4 import BeautifulSoupsoup = BeautifulSoup(response.read().decode(),'lxml')soup = BeautifulSoup(open('1.html'),'lxml')Case study :

# 1. By parsing local files , master bs4 Basic grammar of

# The default encoding format of the open file is gbk, So you need to specify the encoding format when you open the file

soup = BeautifulSoup(open('file/bs4 Parsing local files .html',encoding='utf-8'),'lxml')

# 1. Find the node according to the tag name

print(soup.a) # Find the first qualified data

print(soup.a.attrs) # Gets the properties and property values of the tag

# bs4 Some functions of

# (1)find

# The first qualified data is returned

print(soup.find('a'))

# according to title Find the corresponding label object

print(soup.find('a',title='a2'))

# according to class Find the corresponding label object class Underline is required under

print(soup.find('a',class_ = 'a1'))

# (2)find_all It returns a list , And returned all a label

print(soup.find_all('a'))

# If you want to get the data of multiple tags , Need to be in find_all Add list elements to the parameters of

print(soup.find_all(['a','span']))

# limitc Find the first few data

print(soup.find_all('li',limit=2))

# (3)select ( recommend )

# select Method returns a list , And return multiple data

print(soup.select('a'))

# Can pass . representative class, Call this operation a class selector

print(soup.select('.a1'))

print(soup.select('#l1'))

# Attribute selector Find the li There are tags in the tag. id The label of

print(soup.select('li[id]'))

# Find the li In the label id = l2 The label of

print(soup.select('li[id="l2"]'))

# Hierarchy selector

# Descendant selector find div Below li

print(soup.select('div li'))

# Descendant selector The first level sub tag of a tag stay bs4 You can add a space or not

print(soup.select('div > ul > li'))

# find a and li All objects of the tag

print(soup.select('a,li'))

Node information Get node content

obj = soup.select('#d1')[0]

# If there is only content in the label object , be string and get_text() You can get the content

# If there are labels in the label object besides the content , be string Data not available , and get_text() Data can be obtained

# In general , Recommended get_text()

print(obj.string)

print(obj.get_text())

# Properties of a node

# Why add [0], because select Back to the list . The list is not select Attribute

obj = soup.select('#p1')[0]

# name It's the name of the label

print(obj.name)

# Return the property value as a dictionary

print(obj.attrs)

# Get the properties of the node

obj = soup.select('#p1')[0]

print(obj.attrs.get('class'))

print(obj.get('class'))

print(obj['class'])

XPath Plug in open shortcut key :ctrl+shift+X

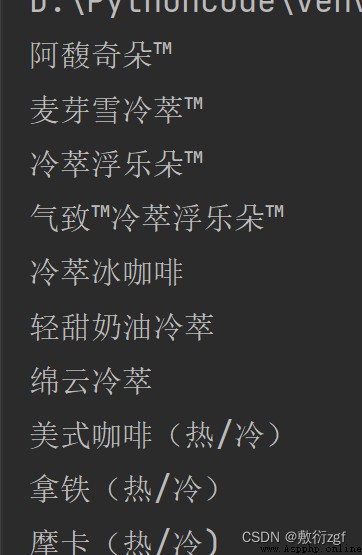

import urllib.request

url = 'https://www.starbucks.com.cn/menu/'

response = urllib.request.urlopen(url)

content = response.read().decode('utf-8')

from bs4 import BeautifulSoup

soup = BeautifulSoup(content,'lxml')

# //ul[@class='grid padded-3 product']//strong/text()

name_list = soup.select('ul[class = "grid padded-3 product"] strong')

for name in name_list :

print(name.get_text())