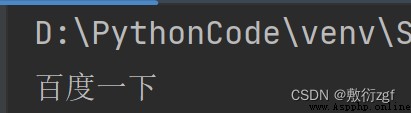

一、ajax請求豆瓣電影第一頁

# get請求

# 獲取豆瓣電影的第一頁數據並保存

import urllib.request

url = 'https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&start=0&limit=20'

headers = {

'user-agent' : 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

# 請求對象的定制

request =urllib.request.Request(url=url,headers=headers)

# 獲取響應的數據

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

# print(content)

# 數據下載到本地

# open方法默認情況下使用gbk編碼,若想保存漢字,則需要在open方法中指定編碼格式為utf-8

# fp = open('douban.json','w',encoding='utf-8')

# fp.write(content)

fp = open('douban.json','w',encoding='utf-8')

fp.write(content)

# 這兩行等價於

with open('douban1.json','w',encoding='utf-8') as fp:

fp.write(content)

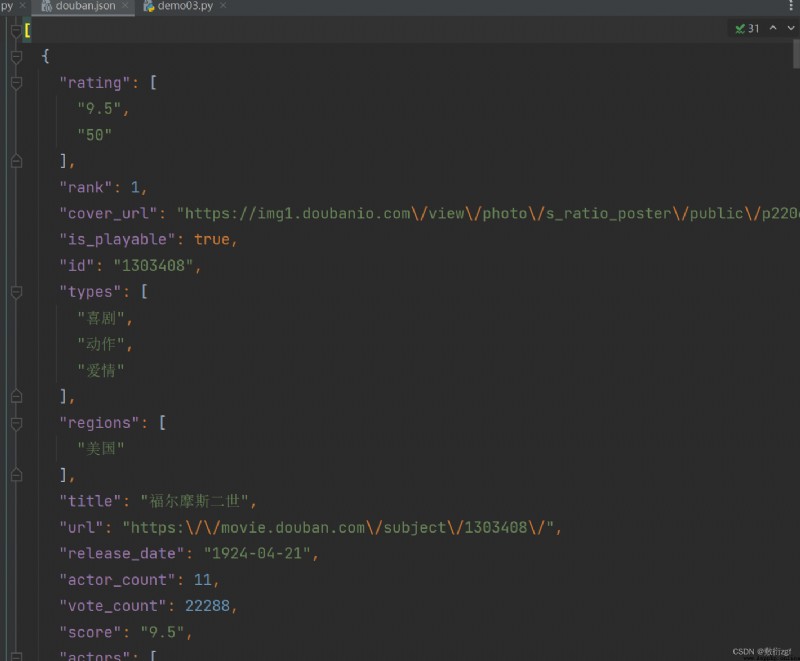

二、ajax請求豆瓣電影前十頁

# 豆瓣電影前十頁

# https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&

# start=0&limit=20

# https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&

# start=20&limit=20

# https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&

# start=40&limit=20

# page 1 2 3 4

# start 0 20 40 60 start = (page - 1) * 20

import urllib.parse

def create_request(page):

base_url = 'https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&'

data = {

'start' : (page - 1)*20,

'limit' : 20 ,

}

data = urllib.parse.urlencode(data)

url = base_url + data

print(url)

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

# 1.請求對象的定制

# request = urllib.request.Request()

# 程序入口

if __name__ == '__main__':

start_page = int(input('請輸入起始頁碼'))

end_page = int(input('請輸入結束頁碼'))

for page in range (start_page , end_page + 1):

# 每一頁都有請求對象的定制

create_request(page)

# print(page)

完整案例:

import urllib.parse

import urllib.request

def create_request(page):

base_url = 'https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&'

data = {

'start' : (page - 1)*20,

'limit' : 20 ,

}

data = urllib.parse.urlencode(data)

url = base_url + data

print(url)

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

# 1.請求對象的定制

request = urllib.request.Request(url = url , headers = headers)

return request

def get_content(request):

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

return content

def down_load(page,content):

with open('douban_' + str(page)+'.json' ,'w',encoding='utf-8') as fp:

fp.write(content)

# 程序入口

if __name__ == '__main__':

start_page = int(input('請輸入起始頁碼'))

end_page = int(input('請輸入結束頁碼'))

for page in range (start_page , end_page + 1):

# 每一頁都有請求對象的定制

request = create_request(page)

# 2.獲取響應的數據

content = get_content(request)

# 3.下載數據

down_load(page,content)

# print(page)

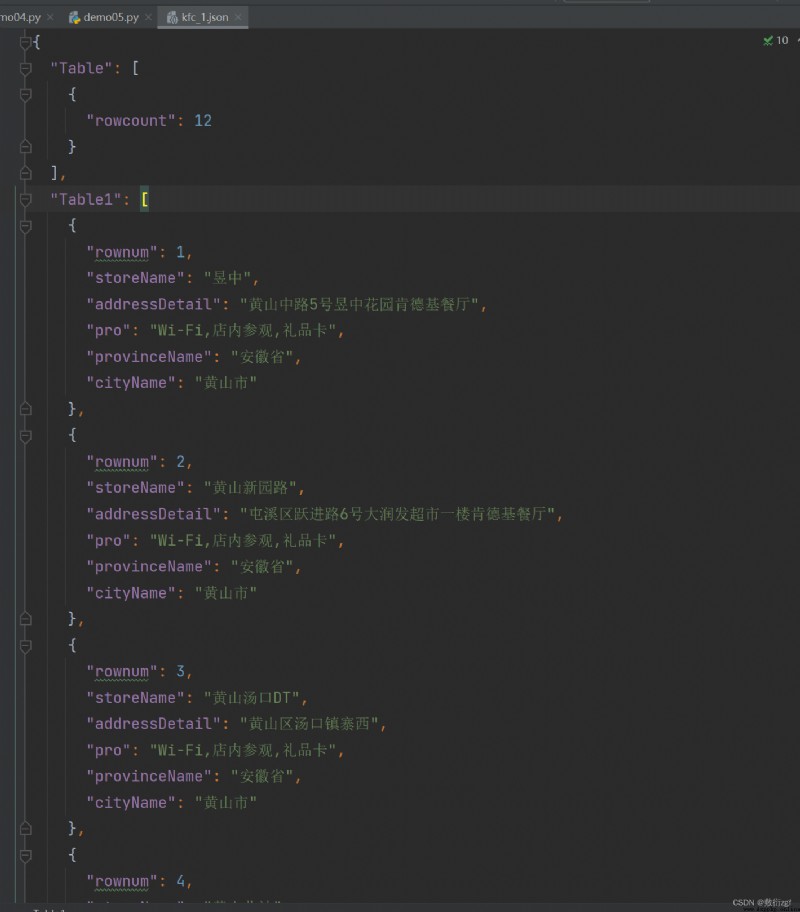

三、ajax的post請求肯德基官網

# 第一頁

# https://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname

# post

# cname: 黃山

# pid:

# pageIndex: 1

# pageSize: 10

# 第二頁

# https://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname

# post

# cname: 黃山

# pid:

# pageIndex: 2

# pageSize: 10

import urllib.request

import urllib.parse

def creat_request(page):

base_url = 'https://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname'

data = {

'cname': '黃山',

'pid': '',

'pageIndex': page,

'pageSize': '10'

}

data = urllib.parse.urlencode(data).encode('utf-8')

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

request = urllib.request.Request(url=base_url,headers=headers,data=data)

return request

def get_content(request):

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

return content

def down_load(page,content):

with open('kfc_'+str(page)+'.json','w',encoding='utf-8') as fp:

fp.write(content)

if __name__ == '__main__':

start_page = int(input('請輸入起始頁'))

end_page = int(input('請輸入結束頁'))

for page in range(start_page,end_page + 1):

# 請求對象定制

request = creat_request(page)

# 獲取網頁源碼

content = get_content(request)

# 下載

down_load(page,content)

四、urllib異常

URLError\HTTPError

import urllib.request

import urllib.error

url = 'https://blog.csdn.net/sulixu/article/details/1198189491'

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

try:

request = urllib.request.Request(url=url ,headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(content)

except urllib.error.HTTPError:

print('系統正在升級')

except urllib.error.URLError:

print('系統還在升級...')

五、微博的cookie登錄

# 個人信息頁面是utf-8,但還是報編碼錯誤,由於是沒有進入到個人信息頁面,網頁攔截到登錄頁面

# 而登錄頁面並非utf-8編碼

import urllib.request

url = 'https://weibo.com/u/6574284471'

headers = {

# ':authority':' weibo.com',

# ':method':' GET',

# ':path':' /u/6574284471',

# ':scheme':' https',

'accept':' text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

# 'accept-encoding':' gzip, deflate, br',

'accept-language':' zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

'cache-control':' max-age=0',

'cookie: XSRF-TOKEN=6ma7fyurg-D7srMvPHSBXnd7; PC_TOKEN=c80929a33d; SUB=_2A25Pt6gfDeRhGeBL7FYT-CrIzD2IHXVsxJ7XrDV8PUNbmtANLU_ikW9NRsq_VXzy15yBjKrXXuLy01cvv2Vl9GaI; SUBP=0033WrSXqPxfM725Ws9jqgMF55529P9D9WWh0duRqerzYUFYCVXfeaq95JpX5KzhUgL.FoqfS0BE1hBXS022dJLoIp-LxKqL1K-LBoMLxKnLBK2L12xA9cqt; ALF=1687489486; SSOLoginState=1655953487; _s_tentry=weibo.com; Apache=4088119873839.28.1655954158255; SINAGLOBAL=4088119873839.28.1655954158255; ULV=1655954158291:1:1:1:4088119873839.28.1655954158255':'; WBPSESS=jKyskQ8JC9Xst5B1mV_fu6PgU8yZ2Wz8GqZ7KvsizlaQYIWJEyF7NSFv2ZP4uCpwz4tKG2BL44ACE6phIx2TUnD3W1v9mxLa_MQC4u4f2UaPhXf55kpgp85_A2VrDQjuAtgDgiAhD-DP14cuzq0UDA==',

#referer 判斷當前路徑是不是由上一個路徑進來的,一般情況下,制作用於圖片防盜鏈

'referer: https':'//weibo.com/newlogin?tabtype=weibo&gid=102803&openLoginLayer=0&url=https%3A%2F%2Fweibo.com%2F',

'sec-ch-ua':' " Not A;Brand";v="99", "Chromium";v="102", "Microsoft Edge";v="102"',

'sec-ch-ua-mobile':' ?0',

'sec-ch-ua-platform':' "Windows"',

'sec-fetch-dest':' document',

'sec-fetch-mode':' navigate',

'sec-fetch-site':' same-origin',

'sec-fetch-user':' ?1',

'upgrade-insecure-requests':' 1',

'user-agent':' Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44',

}

# 請求對象的定制

request = urllib.request.Request(url=url,headers=headers)

# 模擬浏覽器向服務器發送請求

response = urllib.request.urlopen((request))

# 獲取響應的數據

content = response.read().decode('utf-8')

# print(content)

# 將數據保存到本地

with open('file/weibo.html','w',encoding='utf-8') as fp:

fp.write(content)

六、Handler處理器的基本使用

作用:

urllib.request.urlopen(url)—>無法定制請求頭

request = urllib.request.Request(url=url,headers=headers,data=data)—>可以定制請求頭

Handler—>定制更高級的請求頭(隨著業務邏輯的拓展,請求對象的定制已經滿足不了我們的需求,例如:動態Cookie和代理不能使用請求對象的定制i)

# 需求:使用handler訪問百度獲取網頁源碼

import urllib.request

url = 'http://www.baidu.com'

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

request = urllib.request.Request(url=url,headers=headers)

# handler build_opener open

# (1) 獲取handler對象

handler = urllib.request.HTTPHandler()

# (2) 獲取opener對象

opener = urllib.request.build_opener(handler)

# (3) 調用open方法

response = opener = open(request)

content = response.read().decode('utf-8')

print(content)

七、代理服務器

在快代理https://free.kuaidaili.com/free/獲取免費IP和端口號

import urllib.request

url = 'http://www.baidu.com/s?wd=ip'

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

request = urllib.request.Request(url=url,headers=headers)

# response = urllib.request.urlopen(request)

# handler builder_open open

posix = {

'http': '103.37.141.69:80'

}

handler = urllib.request.ProxyHandler(proxies = posix)

opener = urllib.request.build_opener(handler)

response = opener.open(request)

content = response.read().decode('utf-8')

with open('file/daili.html','w',encoding='utf-8') as fp:

fp.write(content)

八、代理池

import urllib.request

import random

url = 'http://www.baidu.com/s?wd=ip'

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

proxies_pool = [

{

'http': '103.37.141.69:8011' },

{

'http': '103.37.141.69:8022' },

{

'http': '103.37.141.69:8033' }

]

proxies = random.choice(proxies_pool) //隨機選擇IP地址

# print(proxies)

request = urllib.request.Request(url=url,headers=headers)

handler = urllib.request.ProxyHandler(proxies = proxies)

opener = urllib.request.build_opener(handler)

response = opener.open(request)

content = response.read().decode('utf-8')

with open('file/daili.html','w',encoding='utf-8') as fp:

fp.write(content)

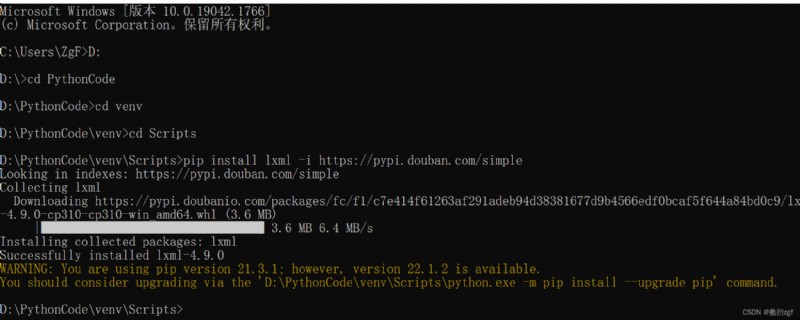

九、xpath插件

1.安裝xpath插件:https://www.aliyundrive.com/s/YCtumb2D2J3 提取碼: o4t2

2.安裝lxml庫

pip install lxml -i https://pypi.douban.com/simple

3.案例解析xpath

①解析本地文件 etree.parse

## 解析xpath 幫助用戶獲取網頁部分源碼的一種方式

from lxml import etree

# 一、解析本地文件 etree.parse

tree = etree.parse('file/xpath解析本地文件.html')

# print(tree)

# tree.xpath('xpath路徑')

# 1.查找ul下面的li

# //:查找所有子孫節點,不考慮層級關系

# /:找直接子節點

# li_list = tree.xpath('//body/ul/li')

# print(li_list)

# 判斷列表長度

# print(len(li_list))

# 2.查找所有有id屬性的li標簽

# li_list = tree.xpath('//ul/li[@id]')

# text()可以獲取標簽中的內容

# li_list = tree.xpath('//ul/li[@id]/text()')

# 查找id為l1的li標簽 注意添加引號

# li_list = tree.xpath('//ul/li[@id="l1"]/text()')

# 查找到id為l1的li標簽的class的屬性值

# li_list = tree.xpath('//ul/li[@id="l1"]/@class')

# 模糊查詢 id中包含l的標簽

# li_list = tree.xpath('//ul/li[contains(@id,"l")]/text()')

# 查詢id的至以l開頭的li標簽

# li_list = tree.xpath('//ul/li[starts-with(@id,"l")]/text()')

# 查詢id為l1和class為c1的 邏輯運算

li_list = tree.xpath('//ul/li[@id="l1" and @class="c1"]/text()')

li_list = tree.xpath('//ul/li[@id="l1"]/text() | //ul/li[@id="l2"]/text() ')

print(len(li_list))

print(li_list)

②服務器響應的數據 response.read().decode(‘utf-8’) etree.HTML()

import urllib.request

url = 'http://www.baidu.com/'

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.124 Safari/537.36 Edg/102.0.1245.44'

}

# 請求對象的定制

request = urllib.request.Request(url=url,headers=headers)

# 模擬浏覽器訪問服務器

response = urllib.request.urlopen(request)

# 獲取網頁源碼

content = response.read().decode('utf-8')

# 解析網頁源碼 來獲取想要的數據

from lxml import etree

# 解析服務器響應的文件

tree = etree.HTML(content)

# 獲取想要的數據 xpath的返回值是一個列表類型的數據

result = tree.xpath('//input[@id ="su"]/@value')[0]

print(result)