NeuralNetwork-Numpy

Code download link : The code download

Please refer to main.ipynb

net.py

conv.py

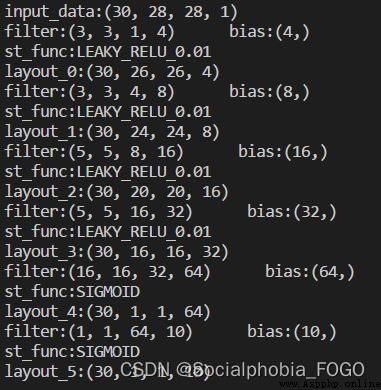

Defining network

from net import ConvNet

net = ConvNet()

if not net.load(MODEL_PATH):

net.addConvLayout([3,3,1,4],bias = True,padding='VAILD',init_type=init_type,st_func='LEAKY_RELU_0.01')

net.addConvLayout([3,3,4,8],bias = True,padding='VAILD',init_type=init_type,st_func='LEAKY_RELU_0.01')

net.addConvLayout([5,5,8,16],bias = True,padding='VAILD',init_type=init_type,st_func='LEAKY_RELU_0.01')

net.addConvLayout([5,5,16,32],bias = True,padding='VAILD',init_type=init_type,st_func='LEAKY_RELU_0.01')

net.addConvLayout([16,16,32,64],bias = True,padding='VAILD',st_func='SIGMOID',init_type=init_type)

net.addConvLayout([1,1,64,10],bias = True,padding='VAILD',st_func='SIGMOID',init_type=init_type)

addData(): Add data

addConvLayout(): Add a layer of network at the end of the current network

Support convolution layer , Fully connected layer ,Batch Normalization layer

The activation function supports sigmoid,leaky_relu_alpha,alpha It can be any value

regress(): Return to

Support MSE And cross entropy Support multiple optimizers (SGD,Nesterov,RMSProp)

count(): Calculate the output of each layer

save(): Save weights

load(): Weight reading

print(net) Output network structure

notes : Required before input addData(), and count once

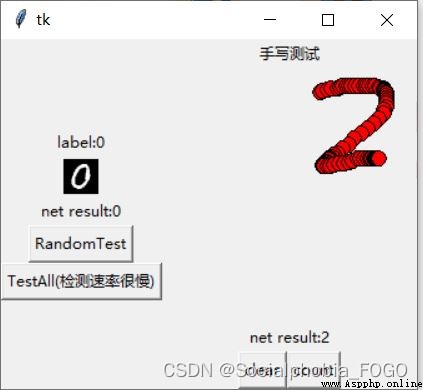

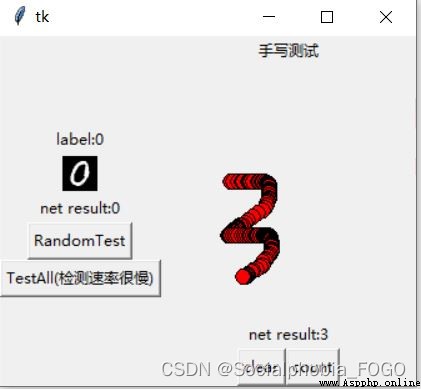

function mnist_visual_test.py Testable mnist Data sets

model Inside the folder is mnist Training model , The latest model is only in batch_size=30 Training under the circumstances of 400 Time , Our test accuracy on the test set has reached :95.38%. The model structure used is shown in the figure above ,4 Convolution layer +2 Layer full connection layer .

function main.ipynb Conduct personalized testing