More details :Python Li for shape() The understanding of the

In the author debug Deep learning of relevant code , It's easy to show up shape() Something in this form , Used to inform the form of output data , because shape() There are different numbers in , Often shape(?,64,512) Such data exist , So I checked some information on the Internet , Make a more understandable explanation :

import numpy as np

a = np.array([[[1,2,3],[4,5,6]]])

print(a.shape)

(1, 2, 3)

Indicates that the array has 1 individual , yes 2 That's ok 3 Array of columns . every last shape The number in corresponds to a pair of brackets in the array , The first number 1 Represents the outermost bracket . And so on , Numbers 2 Indicates the second level of brackets , Numbers 3 Brackets indicating the innermost layer . If you define array When the outermost bracket is removed, the output is shape by (2, 3).

A few brackets are a few dimensional array , namely shape There are several numbers in the result .

So in the above , There are three pairs of brackets , yes 3 Dimension group ,shape() There is 3 Number .

a = np.array([1,2]) #a.shape value (2,), It means one-dimensional array , Array has 2 Elements .

b = np.array([[1],[2]]) #b.shape The value is (2,1), It means a two-dimensional array , Each row has 1 Elements .

c = np.array([[1,2]]) #c.shape The value is (1,2), It means a two-dimensional array , Each row has 2 Elements .

And in the debug Relevant procedures , There may be shape(?,2,3) This means that each array is 2 That's ok 3 Column , This is the front. “?” It represents the number of batches , if 1 Then there are 1 individual , by 2 There are two , But in debug I didn't know how many , So “?” The form shows .

from keras.models import Input,Model

from keras.layers import Dense,Conv2D,TimeDistributed

input_ = Input(shape=(12,32,32,3))

out = TimeDistributed(Conv2D(filters=32,kernel_size=(3,3),padding='same'))(input_)

model = Model(inputs=input_,outputs=out)

model.summary()

And here ,shape() There are four numbers in . first 12 Represents time series ,32,32,3 It means high , wide , The channel number . Convolution operation uses TimeDistributed It's quite similar to this 12 Time series share a convolution layer parameter information , No matter what the time series value is , The total number of parameters is still certain . There are altogether 896 Parameters , Convolution kernel weights Yes 3×3×3×32=864 individual , Convolution kernel bias Yes 32 individual .

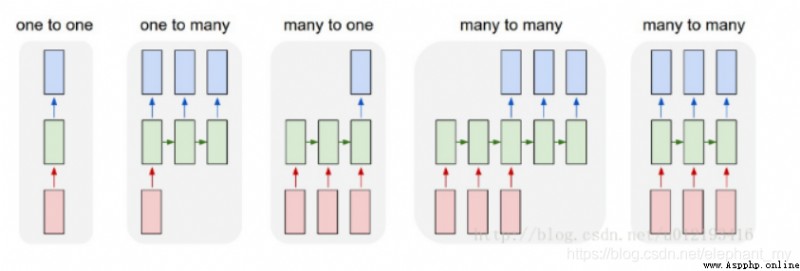

About TimeDistributed There is a popular example to explain :

Consider a batch 32 Samples , Each of these samples is a sample made up of 16 Composed of four dimensions 10 A sequence of vectors . The layer's batch input shape then (32, 10, 16).

It's understandable , The input data is a characteristic equation ,X1+X2+…+X10=Y, From a matrix point of view , Take out the unknowns , Namely 10 Vector , Each vector has 16 Dimensions , this 16 The first dimension is evaluation Y Of 16 Feature directions .

TimeDistributed The function of the layer is to Dense Layer is applied here 10 On a specific vector , For each vector, a Dense operation , Suppose the following code :

model = Sequential()model.add(TimeDistributed(Dense(8), input_shape=(10, 16)))

Output or 10 Vector , But the dimension of the output is determined by 16 Turned into 8, That is to say (32,10,8).

TimeDistributed The layer gives the model a one to many , Many to many ability , The dimension of the model is added .