1.移植目標

將H.264解碼器移植到OPhone操作系統之上(NDK+C),並寫一個測試程序(OPhoneSDK+Java)測試解碼庫是否正常運行,下面是解碼時的截圖:

OPhone的模擬器和Mobile的模擬器一樣是模擬ARM指令的,不像Symbian模擬器一樣執行的是本地代碼,所以在模擬器上模擬出來的效率會比真實手機上的效率要低,之前這款解碼器已經優化到在nokia 6600(相當低端的一款手機,CPU主頻才120Hz)上做到在線播放。

2.面向人群

本文面向有一定的手機應用開發經驗(例如:S60/Mobile/MTK)和有一定的跨手機平台移植經驗的人員,幫助她們了解一個企業的核心庫(C/C++)是怎麼移植到OPhone之上的。

3.假定前提

1)熟悉Java/C/C++語言;

2)熟悉Java的JNI技術;

3)有一定的跨手機平台移植經驗;

4)有一套可供移植的源代碼庫,這裡以H.264解碼庫為例,為了保護我們的知識版權,這裡只能夠公開頭文件:

#ifndef __H264DECODE_H__

#define __H264DECODE_H__

#if defined(__SYMBIAN32__) //S602rd/3rd/UIQ

#include <e32base.h>

#include <libc"stdio.h>

#include <libc"stdlib.h>

#include <libc"string.h>

#else //Windows/Mobile/MTK/OPhone

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#endif

class H264Decode

{

public:

/***************************************************************************/

/* 構造解碼器 */

/* @return H264Decode解碼器實例 */

/***************************************************************************/

static H264Decode *H264DecodeConstruct();

/***************************************************************************/

/* 解碼一幀 */

/* @pInBuffer 指向H264的視頻流 */

/* @iInSize H264視頻流的大小 */

/* @pOutBuffer 解碼後的視頻視頻 */

/* @iOutSize 解碼後的視頻大小 */

/* @return 已解碼的H264視頻流的尺寸 */

/***************************************************************************/

int DecodeOneFrame(unsigned char *pInBuffer,unsigned int iInSize,unsigned char *pOutBuffer,unsigned int &iOutSize);

~H264Decode();

};

#endif // __H264DECODE_H__

你不用熟悉OPhone平台,一切從零開始,因為在此之前,我也不熟悉。

4.開發環境(請參考: http://www.ophonesdn.com/documentation/)

5.移植過程

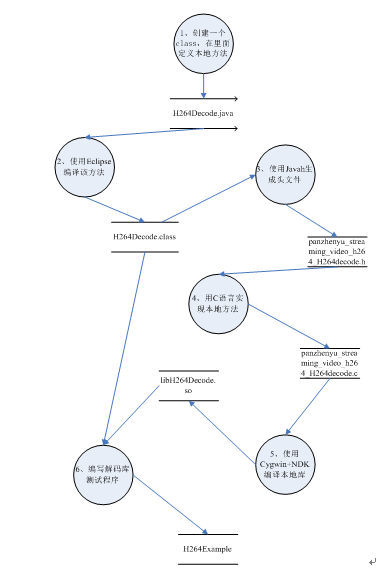

5.1 移植流程

5.2 封裝Java接口

在“假定前提”中提到了要移植的函數,接下來會編寫這些 函數的Java Native Interface。

package ophone.streaming.video.h264;

import java.nio.ByteBuffer;

public class H264decode {

//H264解碼庫指針,因為Java沒有指針一說,所以這裡用一個32位的數來存放指針的值

private long H264decode = 0;

static{

System.loadLibrary("H264Decode");

}

public H264decode() {

this.H264decode = Initialize();

}

public void Cleanup() {

Destroy(H264decode);

}

public int DecodeOneFrame(ByteBuffer pInBuffer,ByteBuffer pOutBuffer) {

return DecodeOneFrame(H264decode, pInBuffer, pOutBuffer);

}

private native static int DecodeOneFrame(long H264decode,ByteBuffer pInBuffer,ByteBuffer pOutBuffer);

private native static long Initialize();

private native static void Destroy(long H264decode);

}

這塊沒什麼好說的,就是按照H264解碼庫的函數,封裝的一層接口,如果你熟悉Java JNI,會發現原來是這麼類似。這裡插入一句:我一直認為技術都是相通的,底層的技術就那麼幾種,學懂了,其它技術都是一通百通。

5.3 使用C實現本地方法

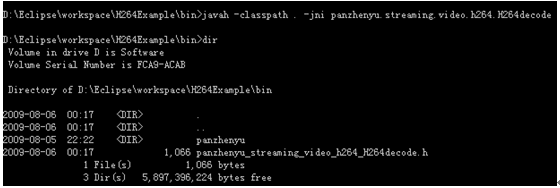

5.3.1生成頭文件

使用javah命令生成JNI頭文件,這裡需要注意是class路徑不是源代碼的路徑,並且要加上包名:

這裡生成了一個ophone_streaming_video_h264_H264decode.h,我們打開來看看:

#include <jni.h>

#ifndef _Included_ophone_streaming_video_h264_H264decode

#define _Included_ophone_streaming_video_h264_H264decode

#ifdef __cplusplus

extern "C" {

#endif

JNIEXPORT jint JNICALL Java_ophone_streaming_video_h264_H264decode_DecodeOneFrame

(JNIEnv *, jclass, jlong, jobject, jobject);

JNIEXPORT jlong JNICALL Java_ophone_streaming_video_h264_H264decode_Initialize

(JNIEnv *, jclass);

JNIEXPORT void JNICALL Java_ophone_streaming_video_h264_H264decode_Destroy

(JNIEnv *, jclass, jlong);

#ifdef __cplusplus

}

#endif

#endif

5.3.2 實現本地方法

之前已經生成了JNI頭文件,接下來只需要實現這個頭文件的幾個導出函數,這裡以H264解碼器的實現為例:

#include "ophone_streaming_video_h264_H264decode.h"

#include "H264Decode.h"

JNIEXPORT jint JNICALL Java_ophone_streaming_video_h264_H264decode_DecodeOneFrame

(JNIEnv * env, jclass obj, jlong decode, jobject pInBuffer, jobject pOutBuffer) {

H264Decode *pDecode = (H264Decode *)decode;

unsigned char *In = NULL;unsigned char *Out = NULL;

unsigned int InPosition = 0;unsigned int InRemaining = 0;unsigned int InSize = 0;

unsigned int OutSize = 0;

jint DecodeSize = -1;

jbyte *InJbyte = 0;

jbyte *OutJbyte = 0;

jbyteArray InByteArrary = 0;

jbyteArray OutByteArrary = 0;

//獲取Input/Out ByteBuffer相關屬性

{

//Input

{

jclass ByteBufferClass = env->GetObjectClass(pInBuffer);

jmethodID PositionMethodId = env->GetMethodID(ByteBufferClass,"position","()I");

jmethodID RemainingMethodId = env->GetMethodID(ByteBufferClass,"remaining","()I");

jmethodID ArraryMethodId = env->GetMethodID(ByteBufferClass,"array","()[B");

InPosition = env->CallIntMethod(pInBuffer,PositionMethodId);

InRemaining = env->CallIntMethod(pInBuffer,RemainingMethodId);

InSize = InPosition + InRemaining;

InByteArrary = (jbyteArray)env->CallObjectMethod(pInBuffer,ArraryMethodId);

InJbyte = env->GetByteArrayElements(InByteArrary,0);

In = (unsigned char*)InJbyte + InPosition;

}

//Output

{

jclass ByteBufferClass = env->GetObjectClass(pOutBuffer);

jmethodID ArraryMethodId = env->GetMethodID(ByteBufferClass,"array","()[B");

jmethodID ClearMethodId = env->GetMethodID(ByteBufferClass,"clear","()Ljava/nio/Buffer;");

//清理輸出緩存區

env->CallObjectMethod(pOutBuffer,ClearMethodId);

OutByteArrary = (jbyteArray)env->CallObjectMethod(pOutBuffer,ArraryMethodId);

OutJbyte = env->GetByteArrayElements(OutByteArrary,0);

Out = (unsigned char*)OutJbyte;

}

}

//解碼

DecodeSize = pDecode->DecodeOneFrame(In,InRemaining,Out,OutSize);

//設置Input/Output ByteBuffer相關屬性

{

//Input

{

jclass ByteBufferClass = env->GetObjectClass(pInBuffer);

jmethodID SetPositionMethodId = env->GetMethodID(ByteBufferClass,"position","(I)Ljava/nio/Buffer;");

//設置輸入緩沖區偏移

env->CallObjectMethod(pInBuffer,SetPositionMethodId,InPosition + DecodeSize);

}

//Output

{

jclass ByteBufferClass = env->GetObjectClass(pOutBuffer);

jmethodID SetPositionMethodId = env->GetMethodID(ByteBufferClass,"position","(I)Ljava/nio/Buffer;");

//設置輸出緩沖區偏移

env->CallObjectMethod(pOutBuffer,SetPositionMethodId,OutSize);

}

}

//清理

env->ReleaseByteArrayElements(InByteArrary,InJbyte,0);

env->ReleaseByteArrayElements(OutByteArrary,OutJbyte,0);

return DecodeSize;

}

JNIEXPORT jlong JNICALL Java_ophone_streaming_video_h264_H264decode_Initialize

(JNIEnv * env, jclass obj) {

H264Decode *pDecode = H264Decode::H264DecodeConstruct();

return (jlong)pDecode;

}

JNIEXPORT void JNICALL Java_ophone_streaming_video_h264_H264decode_Destroy

(JNIEnv * env, jclass obj, jlong decode) {

H264Decode *pDecode = (H264Decode *)decode;

if (pDecode)

{

delete pDecode;

pDecode = NULL;

}

}

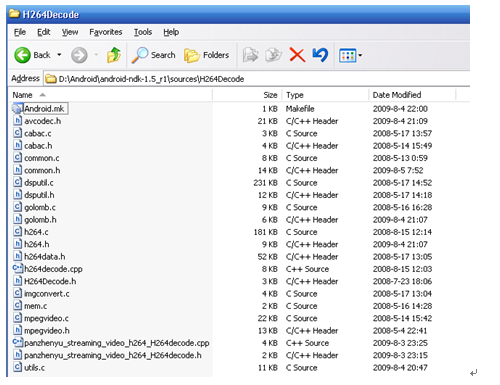

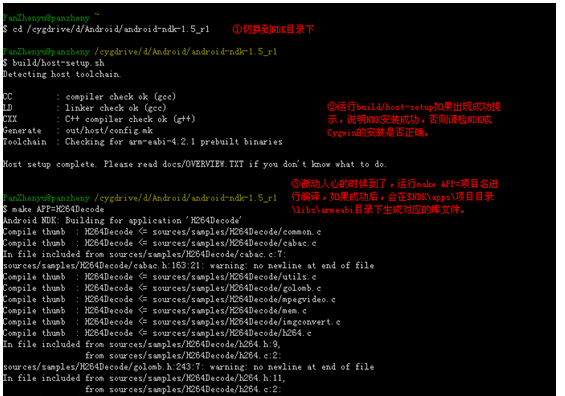

5.3.3 編譯本地方法

接下來,只需要把用C實現的本地方法編譯為動態鏈接庫,如果之前你用於移植的那個庫曾經移植到Symbian上過,那麼編譯會相當簡單,因為NDK的編譯器和Symbian的編譯器一樣,都是采用GCC做交叉編譯器。

首先,需要在$NDK"apps目錄下,創建一個項目目錄,這裡創建了一個H264Decode目錄,在H264Decode目錄中,創建一個Android.mk文件:

APP_PROJECT_PATH := $(call my-dir)

APP_MODULES := H264Decode

接下來,需要在$NDK"source目錄下,創建源代碼目錄(這裡的目錄名要和上面創建的項目目錄文件名相同),這裡創建一個H264Decode目錄,然後把之前生成的JNI頭文件和你實現的本地方法相關頭文件和源代碼,都拷貝到 這個目錄下面。

然後,我們編輯Android.mk文件:

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

LOCAL_MODULE := H264Decode

LOCAL_SRC_FILES := common.c cabac.c utils.c golomb.c mpegvideo.c mem.c imgconvert.c h264decode.cpp h264.c dsputil.c ophone_streaming_video_h264_H264decode.cpp

include $(BUILD_SHARED_LIBRARY)

關於Android.mk文件中,各個字段的解釋,可以參考$NDK"doc下的《OPHONE-MK.TXT》和《OVERVIEW.TXT》,裡面有詳細的介紹。

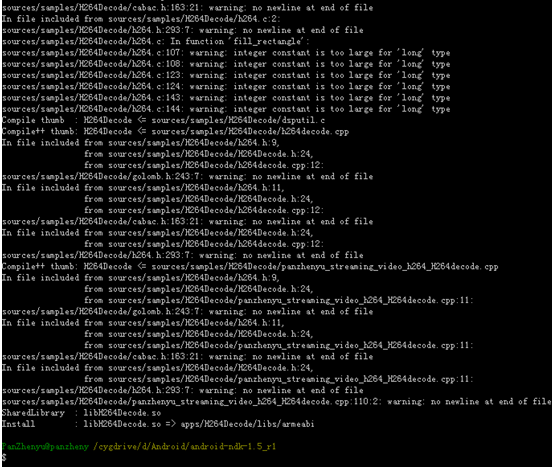

最後,我們啟動Cygwin,開始編譯:

如果你看到了Install:**,這說明你的庫已經編譯好了。

FAQ 2:

如果編譯遇到下面錯誤,怎麼辦?

error: redefinition of typedef 'int8_t'

需要注釋掉你的代碼中“typedef signed char int8_t;”,如果你的代碼之前是已經移植到了Mobile/Symbian上的話,很有可能遇到這個問題。

5.4 編寫庫測試程序

用Eclipse創建一個OPhone工程,在入口類中輸入如下代碼:

/**

* @author ophone

* @email 3751624@qq.com

*/

package ophone.streaming.video.h264;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStream;

import java.nio.ByteBuffer;

import OPhone.app.Activity;

import OPhone.graphics.BitmapFactory;

import OPhone.os.Bundle;

import OPhone.os.Handler;

import OPhone.os.Message;

import OPhone.widget.ImageView;

import OPhone.widget.TextView;

public class H264Example extends Activity {

private static final int VideoWidth = 352;

private static final int VideoHeight = 288;

private ImageView ImageLayout = null;

private TextView FPSLayout = null;

private H264decode Decode = null;

private Handler H = null;

private byte[] Buffer = null;

private int DecodeCount = 0;

private long StartTime = 0;

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

ImageLayout = (ImageView) findViewById(R.id.ImageView);

FPSLayout = (TextView) findViewById(R.id.TextView);

Decode = new H264decode();

StartTime = System.currentTimeMillis();

new Thread(new Runnable(){

public void run() {

StartDecode();

}

}).start();

H = new Handler(){

public void handleMessage(Message msg) {

ImageLayout.invalidate();

ImageLayout.setImageBitmap(BitmapFactory.decodeByteArray(Buffer, 0, Buffer.length));

long Time = (System.currentTimeMillis()-StartTime)/1000;

if(Time > 0){

FPSLayout.setText("花費

BMPImage是一個工具類,主要用於把RGB序列,轉換為BMP圖象用於顯示:

@author ophone

* @email 3751624@qq.com

*/

package ophone.streaming.video.h264;

import java.nio.ByteBuffer;

public class BMPImage {

// --- 私有常量

private final static int BITMAPFILEHEADER_SIZE = 14;

private final static int BITMAPINFOHEADER_SIZE = 40;

// --- 位圖文件標頭

private byte bfType[] = { 'B', 'M' };

private int bfSize = 0;

private int bfReserved1 = 0;

private int bfReserved2 = 0;

private int bfOffBits = BITMAPFILEHEADER_SIZE + BITMAPINFOHEADER_SIZE;

// --- 位圖信息標頭

private int biSize = BITMAPINFOHEADER_SIZE;

private int biWidth = 176;

private int biHeight = 144;

private int biPlanes = 1;

private int biBitCount = 24;

private int biCompression = 0;

private int biSizeImage = biWidth*biHeight*3;

private int biXPelsPerMeter = 0x0;

private int biYPelsPerMeter = 0x0;

private int biClrUsed = 0;

private int biClrImportant = 0;

ByteBuffer bmpBuffer = null;

public BMPImage(byte[] Data,int Width,int Height){

biWidth = Width;

biHeight = Height;

biSizeImage = biWidth*biHeight*3;

bfSize = BITMAPFILEHEADER_SIZE + BITMAPINFOHEADER_SIZE + biWidth*biHeight*3;

bmpBuffer = ByteBuffer.allocate(BITMAPFILEHEADER_SIZE + BITMAPINFOHEADER_SIZE + biWidth*biHeight*3);

writeBitmapFileHeader();

writeBitmapInfoHeader();

bmpBuffer.put(Data);

}

public byte[] getByte(){

return bmpBuffer.array();

}

private byte[] intToWord(int parValue) {

byte retValue[] = new byte[2];

retValue[0] = (byte) (parValue & 0x00FF);

retValue[1] = (byte) ((parValue >> 8) & 0x00FF);

return (retValue);

}

private byte[] intToDWord(int parValue) {

byte retValue[] = new byte[4];

retValue[0] = (byte) (parValue & 0x00FF);

retValue[1] = (byte) ((parValue >> 8) & 0x000000FF);

retValue[2] = (byte) ((parValue >> 16) & 0x000000FF);

retValue[3] = (byte) ((parValue >> 24) & 0x000000FF);

return (retValue);

}

private void writeBitmapFileHeader () {

bmpBuffer.put(bfType);

bmpBuffer.put(intToDWord (bfSize));

bmpBuffer.put(intToWord (bfReserved1));

bmpBuffer.put(intToWord (bfReserved2));

bmpBuffer.put(intToDWord (bfOffBits));

}

private void writeBitmapInfoHeader () {

bmpBuffer.put(intToDWord (biSize));

bmpBuffer.put(intToDWord (biWidth));

bmpBuffer.put(intToDWord (biHeight));

bmpBuffer.put(intToWord (biPlanes));

bmpBuffer.put(intToWord (biBitCount));

bmpBuffer.put(intToDWord (biCompression));

bmpBuffer.put(intToDWord (biSizeImage));

bmpBuffer.put(intToDWord (biXPelsPerMeter));

bmpBuffer.put(intToDWord (biYPelsPerMeter));

bmpBuffer.put(intToDWord (biClrUsed));

bmpBuffer.put(intToDWord (biClrImportant));

}

}

測試程序完整工程在此暫不提供。

5.5 集成測試

集成測試有兩點需要注意,在運行程序前,需要把動態庫復制到模擬器的/system/lib目錄下面,還需要把需要解碼的視頻傳到模擬器的/tmp目錄下。

這裡要明確的是,OPhone和Symbian的模擬器都做的太不人性化了,Symbian復制一個文件到模擬器中,要進一堆很深的目錄,OPhone的更惱火,需要敲命令把文件傳遞到模擬器裡,說實話,僅在這點上,Mobile的模擬器做的還是非常人性化的。

命令:

PATH=D:"OPhone"OPhone SDK"tools"

adb.exe remount

adb.exe push D:"Eclipse"workspace"H264Example"libs"armeabi"libH264Decode.so /system/lib

adb.exe push D:"Eclipse"workspace"H264Example"Demo.264 /tmp

pause

這裡解釋一下abd push命令:

adb push <本地文件路徑> <遠程文件路徑> - 復制文件或者目錄到模擬器

在Eclipse中,啟動庫測試程序,得到畫面如下:

FAQ 3:

模擬器黑屏怎麼辦?

這可能是由於模擬器啟動速度比較慢所引起的,所以需要多等一會。希望下個版本能夠改進。