RAC增加節點:

環境:

OS:OEL5.6

RAC:10.2.0.1.0

原有rac1,rac2兩個節點,現在要增加rac3節點:

操作過程:

修改三個節點上的/etc/hosts文件

192.168.90.2 rac1

192.168.90.5 rac2

192.168.90.6 rac3

192.168.91.3 rac1-priv

192.168.91.6 rac2-priv

192.168.91.7 rac3-priv

192.168.90.3 rac1-vip

192.168.90.4 rac2-vip

192.168.90.7 rac3-vip

rac3節點上安裝需要的rpm包,本實驗環境使用的是OEL系統,所以使用oracle-validated。

[root@rac3 ~]# mount /dev/cdrom /mnt

mount: block device /dev/cdrom is write-protected, mounting read-only

[root@rac3 ~]# vi /etc/yum.repos.d/public-yum-el5.repo

[oel5]

name = Enterprise Linux 5.6 DVD

baseurl=file:///mnt/Server/

gpgcheck=0

enabled=1

[root@rac3 ~]# yum install oracle-validated

Loaded plugins: rhnplugin, security

This system is not registered with ULN.

ULN support will be disabled.

......略

安裝ASMLib包:

[root@rac3 ~]# cd /mnt/Server

[root@rac3 Server]# rpm -ivh oracleasm-support-2.1.4-1.el5.i386.rpm

warning: oracleasm-support-2.1.4-1.el5.i386.rpm: Header V3 DSA signature: NOKEY, key ID 1e5e0159

Preparing... ########################################### [100%]

1:oracleasm-support ########################################### [100%]

[root@rac3 Server]# rpm -ivh oracleasm-2.6.18-238.el5-2.0.5-1.el5.i686.rpm

warning: oracleasm-2.6.18-238.el5-2.0.5-1.el5.i686.rpm: Header V3 DSA signature: NOKEY, key ID 1e5e0159

Preparing... ########################################### [100%]

1:oracleasm-2.6.18-238.el########################################### [100%]

配置3節點和1,2節點間的互信:

3節點:

[oracle@rac3 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is:

18:d5:6a:94:4c:12:90:8a:19:ca:96:4d:97:cc:fe:f0 oracle@rac3

1節點:

[oracle@rac1 .ssh]$ ssh 192.168.90.6 cat ~/.ssh/*.pub >> authorized_keys

oracle@192.168.90.6's password:

[oracle@rac1 .ssh]$ scp authorized_keys 192.168.90.6:~/.ssh/.

oracle@192.168.90.6's password:

authorized_keys 100% 1780 1.7KB/s 00:00

[oracle@rac1 .ssh]$ scp authorized_keys 192.168.90.5:~/.ssh/.

authorized_keys 100% 1780 1.7KB/s 00:00

各個節點執行以下腳本測試oracle用戶的互信:

ssh rac1 date

ssh rac2 date

ssh rac3 date

ssh rac1-priv date

ssh rac2-priv date

ssh rac3-priv date

配置時間同步:

[root@rac3 Server]# ntpdate 192.168.90.2

22 Aug 10:45:31 ntpdate[9511]: adjust time server 192.168.90.2 offset -0.109663 sec

[root@rac3 Server]# crontab -e

* * * * * /usr/sbin/ntpdate 192.168.90.2 >> /ntp.log

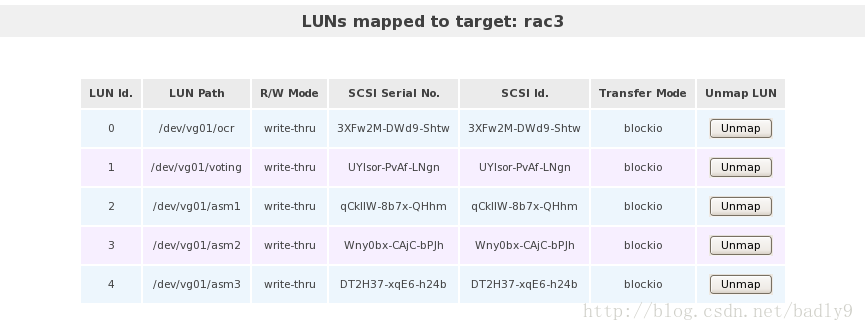

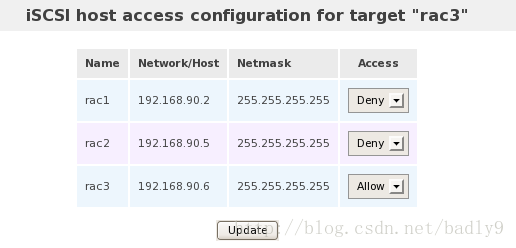

由於使用的是openfiler作為存儲,這裡添加openfiler配置過程:

然後到rac3節點上配置:

[root@rac3 ~]# vi /etc/iscsi/initiatorname.iscsi

#InitiatorName=iqn.1988-12.com.oracle:48354521e5f2

InitiatorName=rac3

[root@rac3 ~]# iscsiadm -m discovery -t st -p 192.168.90.8

192.168.90.8:3260,1 rac3

[root@rac3 ~]# service iscsi restart

Stopping iSCSI daemon:

iscsid is stopped [ OK ]

Starting iSCSI daemon: [ OK ]

[ OK ]

Setting up iSCSI targets: Logging in to [iface: default, target: rac3, portal: 192.168.90.8,3260]

Login to [iface: default, target: rac3, portal: 192.168.90.8,3260] successful.

[ OK ]

然後看一下磁盤是否掛載成功:

[root@rac3 ~]# fdisk -l

Disk /dev/sda: 42.9 GB, 42949672960 bytes

255 heads, 63 sectors/track, 5221 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 5221 41833260 8e Linux LVM

Disk /dev/dm-0: 39.5 GB, 39594229760 bytes

255 heads, 63 sectors/track, 4813 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk /dev/dm-0 doesn't contain a valid partition table

Disk /dev/dm-1: 3221 MB, 3221225472 bytes

255 heads, 63 sectors/track, 391 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk /dev/dm-1 doesn't contain a valid partition table

Disk /dev/sdb: 1073 MB, 1073741824 bytes

34 heads, 61 sectors/track, 1011 cylinders

Units = cylinders of 2074 * 512 = 1061888 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 1011 1048376+ 83 Linux

Disk /dev/sdc: 1073 MB, 1073741824 bytes

34 heads, 61 sectors/track, 1011 cylinders

Units = cylinders of 2074 * 512 = 1061888 bytes

Device Boot Start End Blocks Id System

/dev/sdc1 1 1011 1048376+ 83 Linux

Disk /dev/sdd: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Device Boot Start End Blocks Id System

/dev/sdd1 1 10240 10485744 83 Linux

Disk /dev/sde: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Device Boot Start End Blocks Id System

/dev/sde1 1 10240 10485744 83 Linux

Disk /dev/sdf: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Device Boot Start End Blocks Id System

/dev/sdf1 1 10240 10485744 83 Linux

掛載成功之後,配置設備固定化:

[root@rac3 ~]# raw /dev/raw/raw1 /dev/sdb1

/dev/raw/raw1: bound to major 8, minor 17

[root@rac3 ~]# raw /dev/raw/raw2 /dev/sdc1

/dev/raw/raw2: bound to major 8, minor 33

[root@rac3 ~]# raw /dev/raw/raw3 /dev/sdd1

/dev/raw/raw3: bound to major 8, minor 49

[root@rac3 ~]# raw /dev/raw/raw4 /dev/sde1

/dev/raw/raw4: bound to major 8, minor 65

[root@rac3 ~]# raw /dev/raw/raw5 /dev/sdf1

/dev/raw/raw5: bound to major 8, minor 81

[root@rac3 ~]# vi /etc/udev/rules.d/60-raw.rules

增加以下內容:

ACTION=="add", KERNEL=="/dev/sdb1", RUN+="/bin/raw /dev/raw/raw1 %N"

ACTION=="add", ENV{MAJOR}=="8", ENV{MINOR}=="17", RUN+="/bin/raw /dev/raw/raw1 %M %m"

ACTION=="add", KERNEL=="/dev/sdc1", RUN+="/bin/raw /dev/raw/raw2 %N"

ACTION=="add", ENV{MAJOR}=="8", ENV{MINOR}=="33", RUN+="/bin/raw /dev/raw/raw2 %M %m"

ACTION=="add", KERNEL=="/dev/sdd1", RUN+="/bin/raw /dev/raw/raw3 %N"

ACTION=="add", ENV{MAJOR}=="8", ENV{MINOR}=="49", RUN+="/bin/raw /dev/raw/raw3 %M %m"

ACTION=="add", KERNEL=="/dev/sde1", RUN+="/bin/raw /dev/raw/raw4 %N"

ACTION=="add", ENV{MAJOR}=="8", ENV{MINOR}=="65", RUN+="/bin/raw /dev/raw/raw4 %M %m"

ACTION=="add", KERNEL=="/dev/sdf1", RUN+="/bin/raw /dev/raw/raw5 %N"

ACTION=="add", ENV{MAJOR}=="8", ENV{MINOR}=="81", RUN+="/bin/raw /dev/raw/raw5 %M %m"

KERNEL=="raw[1-5]", OWNER="oracle", GROUP="oinstall", MODE="640"

ASMLib配置:

[root@rac3 ~]# /etc/init.d/oracleasm configure

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting <ENTER> without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: oracle

Default group to own the driver interface []: dba

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

Initializing the Oracle ASMLib driver: [ OK ]

Scanning the system for Oracle ASMLib disks: [ OK ]

[root@rac3 ~]# /etc/init.d/oracleasm listdisks

ASM01

ASM02

ASM03

rac3節點上創建/u01目錄,並授予oracle:

[root@rac3 ~]# mkdir /u01

[root@rac3 ~]# chown oracle:oinstall /u01

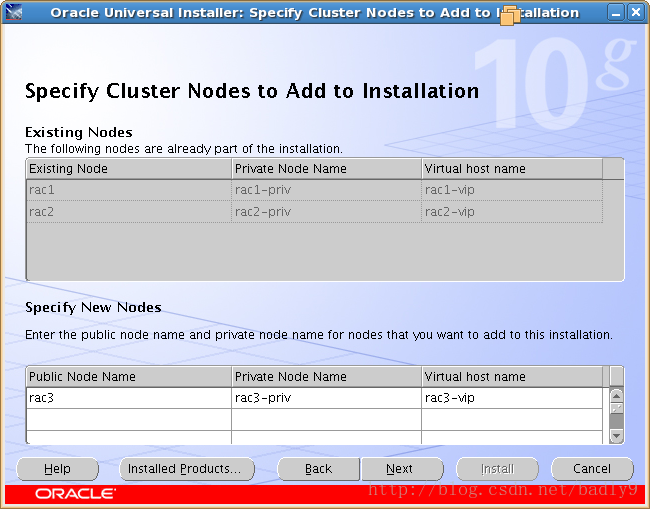

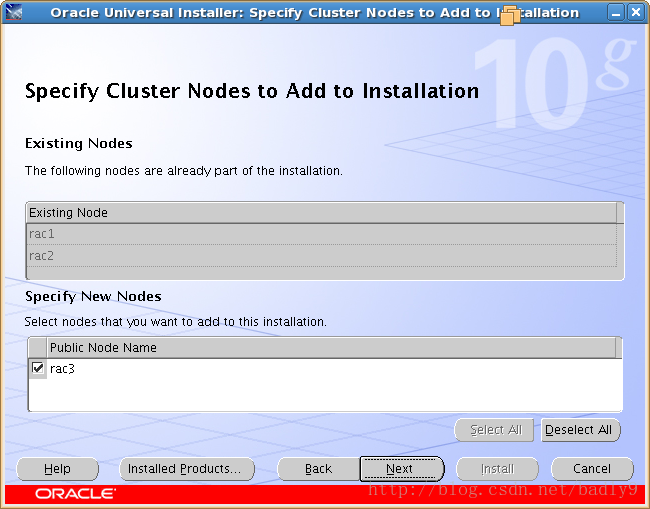

節點一:

[oracle@rac1 ~]$ cd $CRS_HOME/oui/bin/

[oracle@rac1 bin]$ ls

addLangs.sh lsnodes resource runInstaller

addNode.sh ouica.sh runConfig.sh runInstaller.sh

[oracle@rac1 bin]$ sh addNode.sh

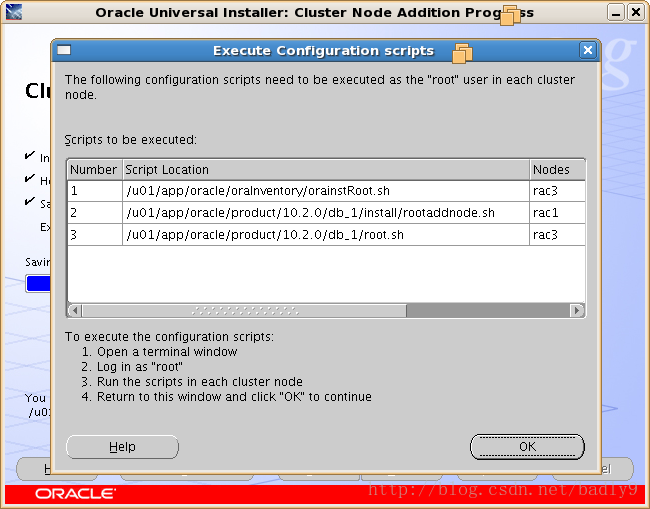

[root@rac3 ~]# /u01/app/oracle/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oracle/oraInventory to 770.

Changing groupname of /u01/app/oracle/oraInventory to oinstall.

The execution of the script is complete

[root@rac1 ~]# /u01/app/oracle/product/10.2.0/db_1/install/rootaddnode.sh

clscfg: EXISTING configuration version 3 detected.

clscfg: version 3 is 10G Release 2.

Attempting to add 1 new nodes to the configuration

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node <nodenumber>: <nodename> <private interconnect name> <hostname>

node 3: rac3 rac3-priv rac3

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

/u01/app/oracle/product/10.2.0/db_1/bin/srvctl add nodeapps -n rac3 -A rac3-vip/255.255.255.0/eth0 -o /u01/app/oracle/product/10.2.0/db_1

[root@rac3 ~]# /u01/app/oracle/product/10.2.0/db_1/root.sh

WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root

WARNING: directory '/u01/app/oracle/product' is not owned by root

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

OCR LOCATIONS = /dev/raw/raw1

OCR backup directory '/u01/app/oracle/product/10.2.0/db_1/cdata/crs' does not exist. Creating now

Setting the permissions on OCR backup directory

Setting up NS directories

Oracle Cluster Registry configuration upgraded successfully

WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root

WARNING: directory '/u01/app/oracle/product' is not owned by root

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

clscfg: EXISTING configuration version 3 detected.

clscfg: version 3 is 10G Release 2.

assigning default hostname rac1 for node 1.

assigning default hostname rac2 for node 2.

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node <nodenumber>: <nodename> <private interconnect name> <hostname>

node 1: rac1 rac1-priv rac1

node 2: rac2 rac2-priv rac2

clscfg: Arguments check out successfully.

NO KEYS WERE WRITTEN. Supply -force parameter to override.

-force is destructive and will destroy any previous cluster

configuration.

Oracle Cluster Registry for cluster has already been initialized

Startup will be queued to init within 90 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

CSS is active on these nodes.

rac1

rac2

rac3

CSS is active on all nodes.

Waiting for the Oracle CRSD and EVMD to start

Waiting for the Oracle CRSD and EVMD to start

Oracle CRS stack installed and running under init(1M)

Running vipca(silent) for configuring nodeapps

/u01/app/oracle/product/10.2.0/db_1/jdk/jre//bin/java: error while loading shared libraries: libpthread.so.0: cannot open shared object file: No such file or directory

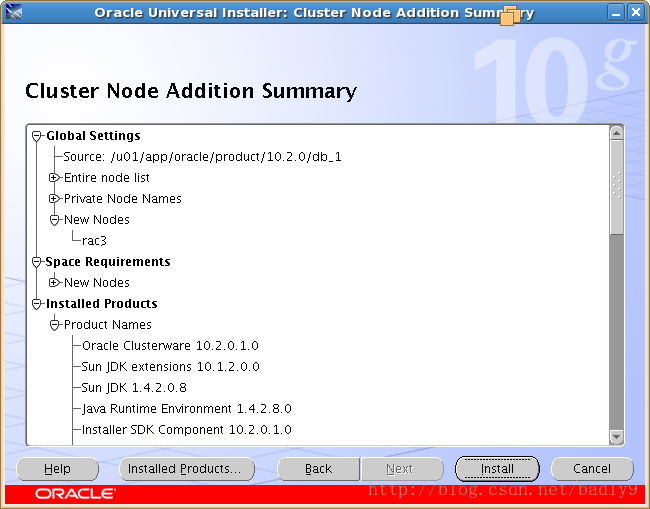

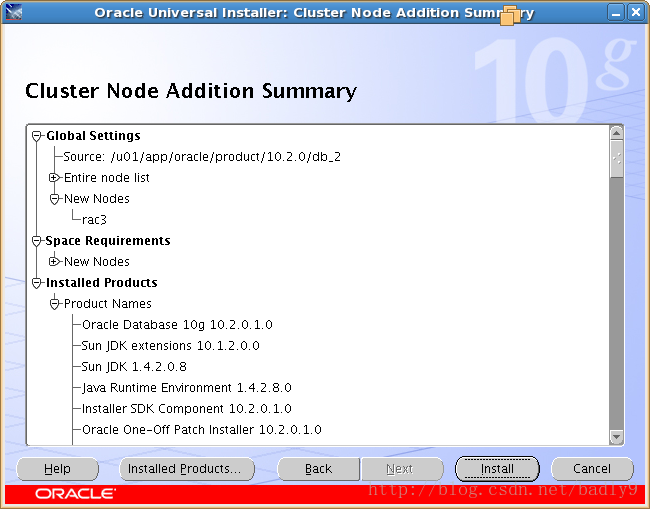

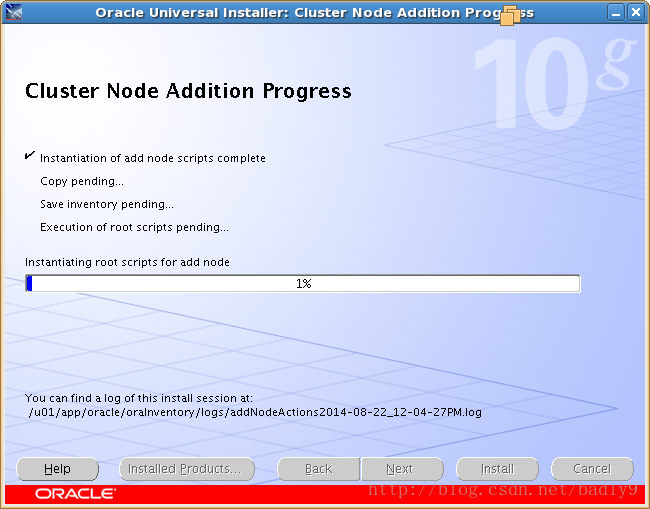

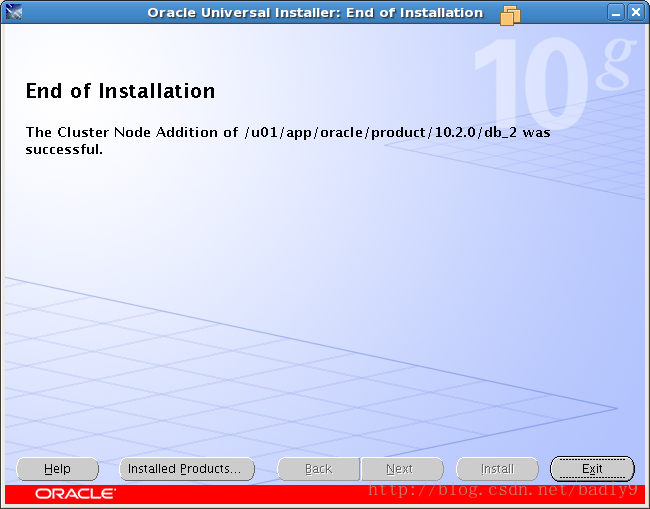

rac3節點安裝數據庫軟件:

rac1節點上上使用oracle用戶執行$ORACLE_HOME/oui/bin/addNode.sh

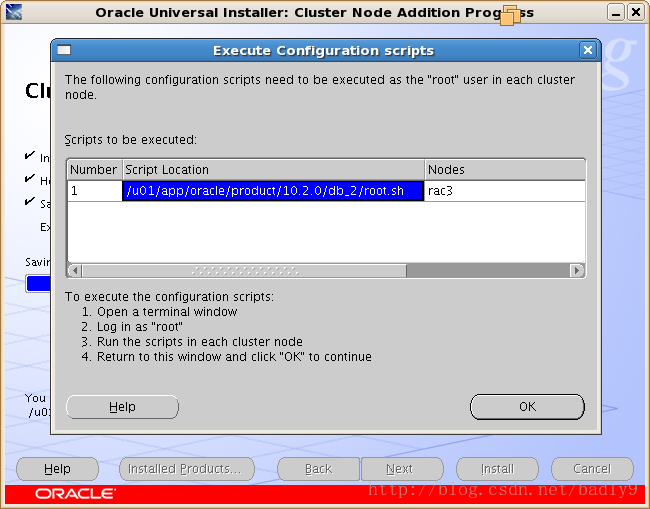

[root@rac3 ~]# /u01/app/oracle/product/10.2.0/db_2/root.sh

Running Oracle10 root.sh script...

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/10.2.0/db_2

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root.sh script.

Now product-specific root actions will be performed.

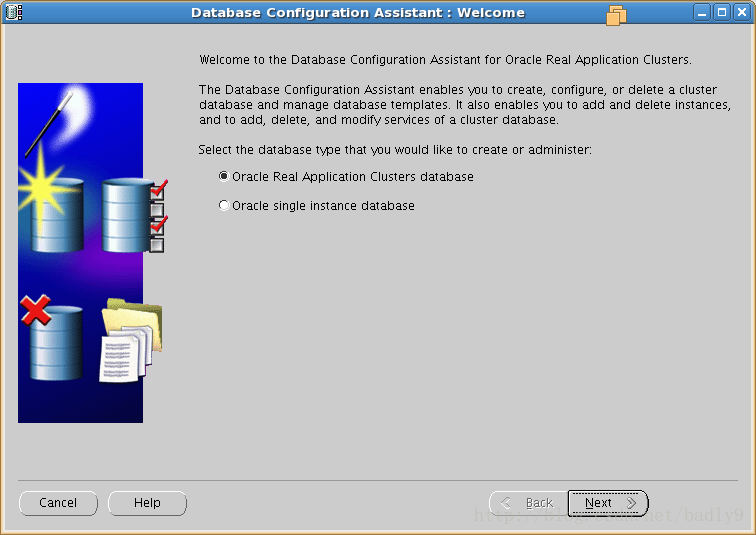

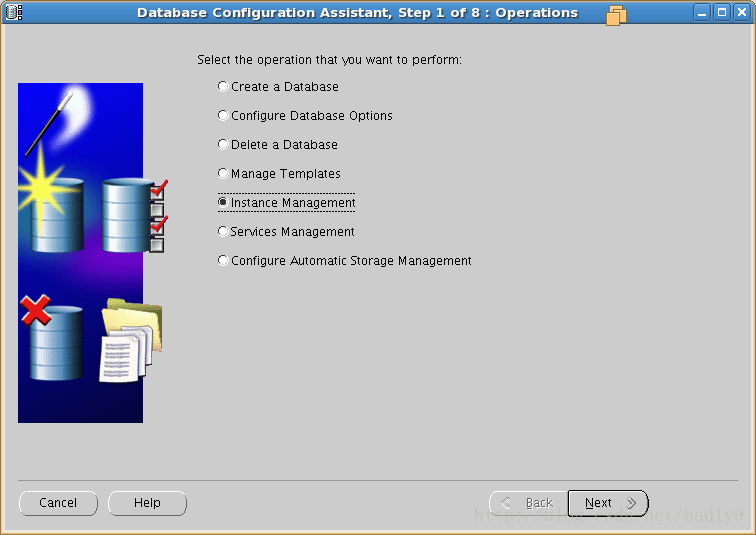

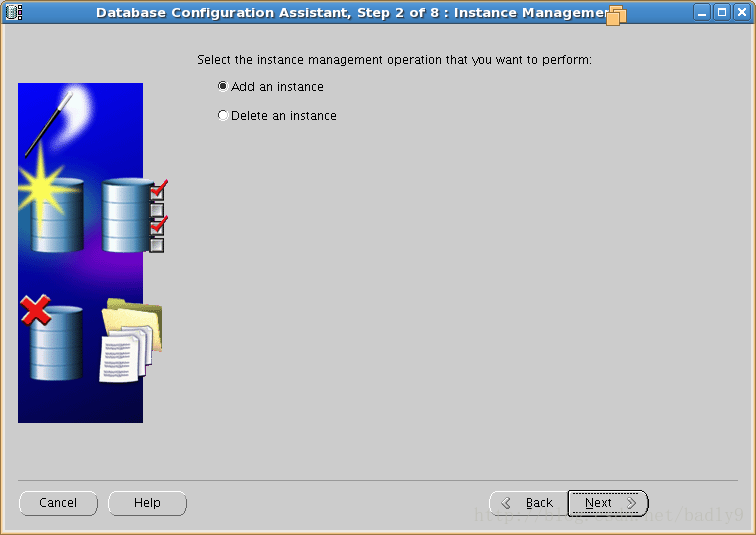

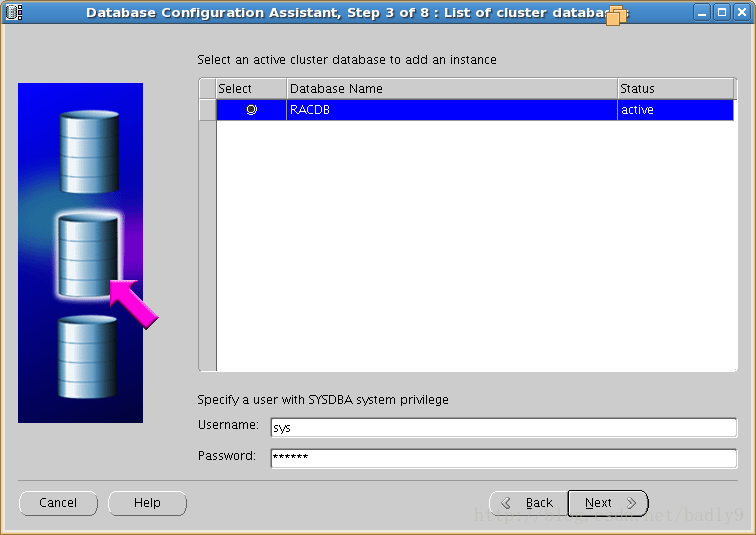

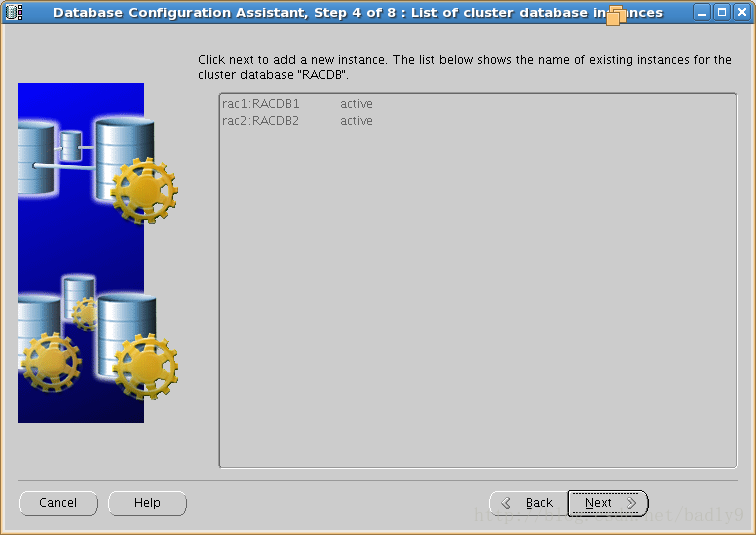

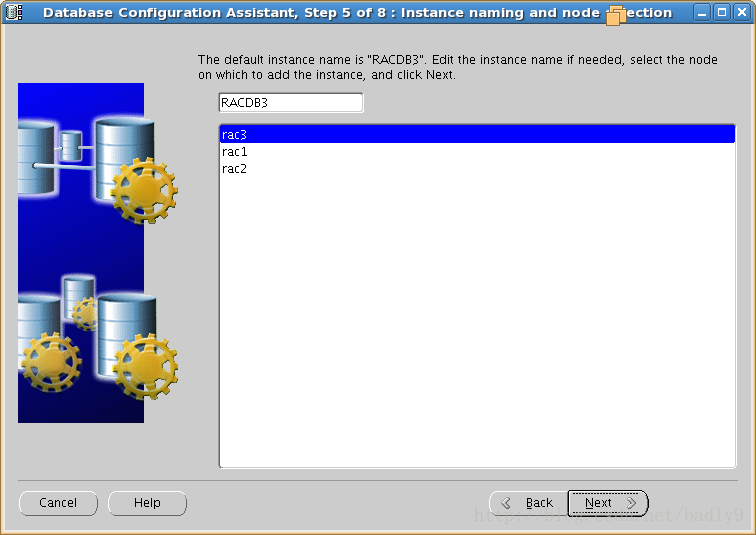

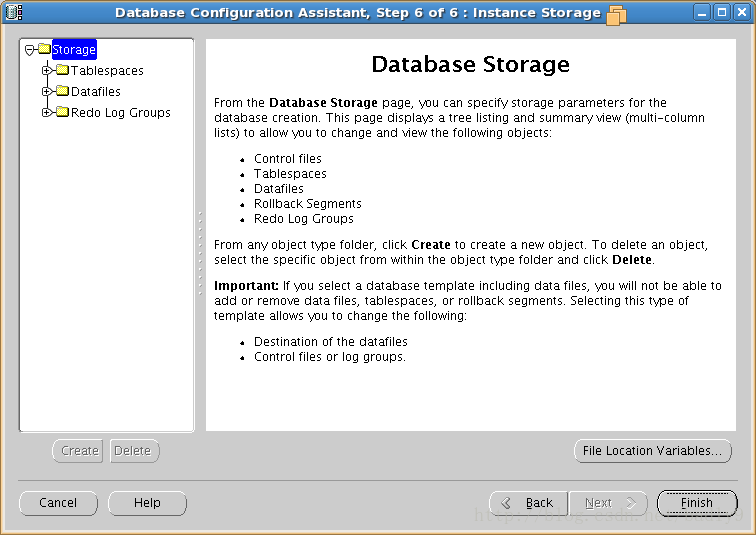

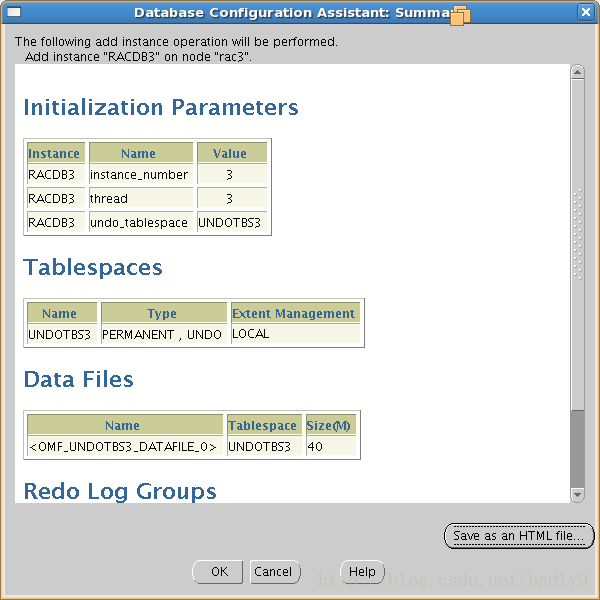

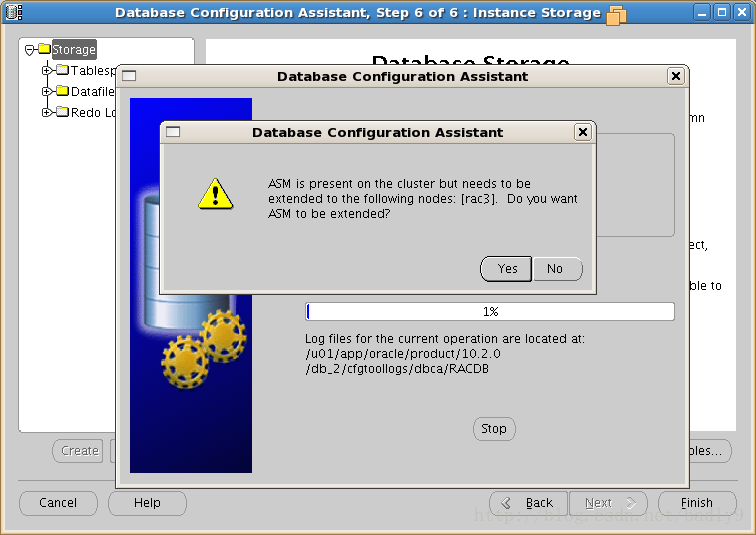

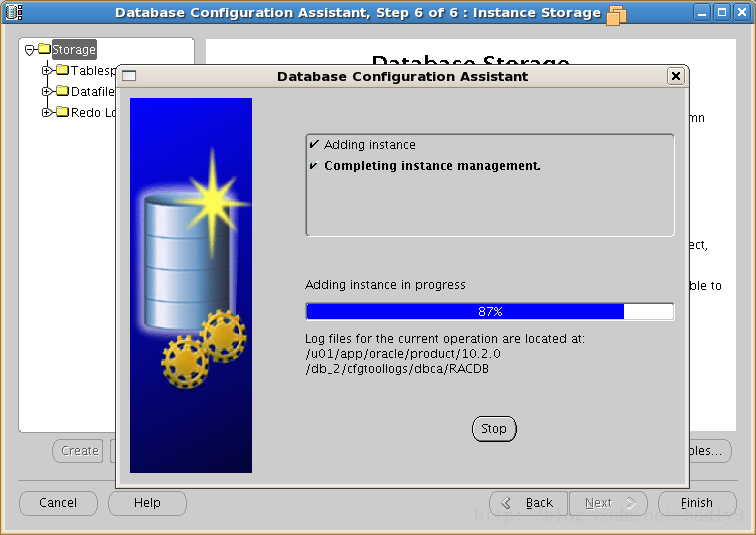

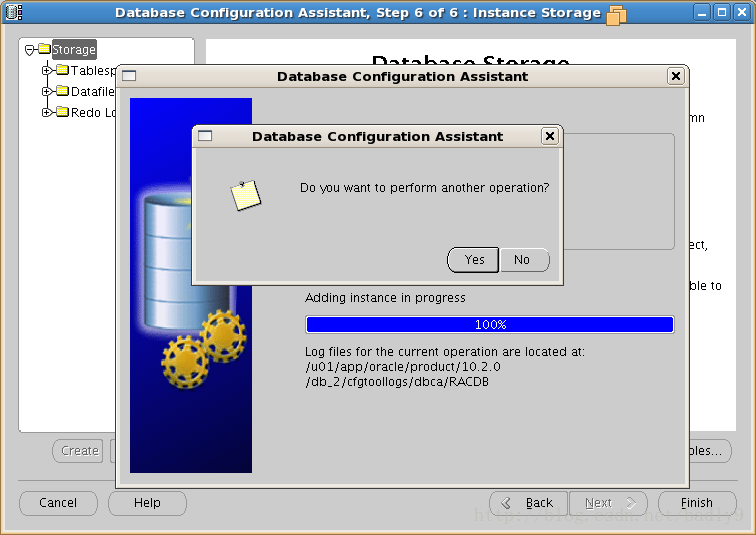

然後在rac1節點上使用dbca添加instance:

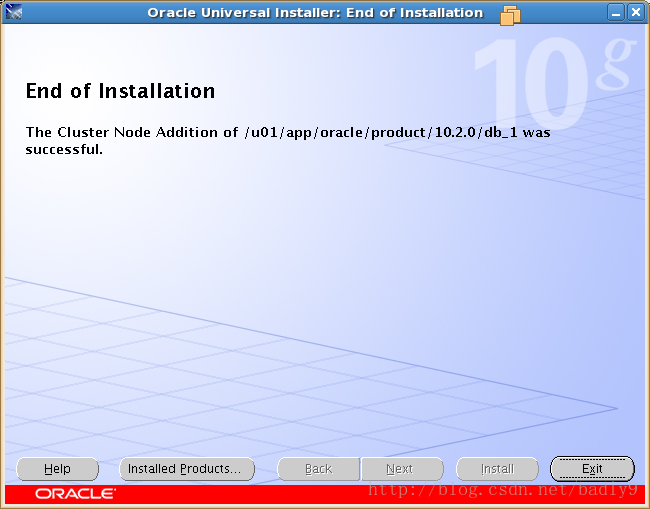

至此節點添加完畢:

[oracle@rac1 crsd]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....B1.inst application ONLINE ONLINE rac1

ora....B2.inst application ONLINE ONLINE rac2

ora....B3.inst application ONLINE ONLINE rac3

ora.RACDB.db application ONLINE ONLINE rac2

ora....SM1.asm application ONLINE ONLINE rac1

ora....C1.lsnr application ONLINE ONLINE rac1

ora.rac1.gsd application ONLINE ONLINE rac1

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip application ONLINE ONLINE rac1

ora....SM2.asm application ONLINE ONLINE rac2

ora....C2.lsnr application ONLINE ONLINE rac2

ora.rac2.gsd application ONLINE ONLINE rac2

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip application ONLINE ONLINE rac2

ora....SM3.asm application ONLINE ONLINE rac3

ora....C3.lsnr application ONLINE ONLINE rac3

ora.rac3.gsd application ONLINE ONLINE rac3

ora.rac3.ons application ONLINE ONLINE rac3

ora.rac3.vip application ONLINE ONLINE rac3