centos6.5 x86_64安裝oracle 11.2.0.3grid

1、安裝前的准備 工作

1.1、配置node1

1.1.1、配置虛擬機並安裝centos

安裝node1-----

1、安裝node1

1.1、添加30G的硬盤,1G內存,2個處理器,添加兩個網卡NAT

1.2、設置主機名同時配置網絡

node1.localdomain 192.168.0.3/255.255.255.0/192.168.0.1 eth0

node1-priv.localdomain 10.10.10.31/255.255.255.0/10.10.10.1 eth1

1.3、新建一個分區結構

/boot-256m /-8G /tmp-2G swap-3G /u01-10G

安裝grid的要求:

memory-1.5G swap-2.7G tmp-1G

1.4、Minimal安裝

安裝209個包

1.5、cmd-沒有關閉防火牆和selinux

把網卡設置為host only可以ping通

問題:

(1)其中一個網卡沒有保存

新增一個網卡host only

# ifconfig -a ##多出一個HWaddr

# cd /etc/sysconfig/network-scripts/

# cp /etc/sysconfig/network-scripts/ifcfg-eth0 ifcfg-eth1

# ping 10.10.10.31 ##可以ping通

安裝node2-----

1、安裝node2

1.1、添加30G的硬盤,1G內存,2個處理器,添加兩個網卡host only

1.2、設置主機名同時配置網絡

node2.localdomain 192.168.0.4/255.255.255.0/192.168.0.1 eth0

node2-priv.localdomain 10.10.10.32/255.255.255.0/10.10.10.1 eth1

1.3、新建一個分區結構

/boot-256m /-8G /tmp-2G swap-3G /u01-10G

1.4、Minimal安裝

安裝209個包

1.5、cmd

網卡host only:重啟後,直接ping 192.168.0.4可以ping通

1.1.2、node1-配置yum,並安裝X Window和Desktop

1、node1和node2互相ping,都能ping通

[root@node1 ~]# ping 192.168.0.3

[root@node1 ~]# ping 192.168.0.4

[root@node1 ~]# ping 10.10.10.31

[root@node1 ~]# ping 10.10.10.32

[root@node2 ~]# ping 10.10.10.31

[root@node2 ~]# ping 10.10.10.32

[root@node2 ~]# ping 192.168.0.3

[root@node2 ~]# ping 192.168.0.4

2、node1配置yum,並安裝X系統和桌面系統

# mount /dev/cdrom /mnt

# cd /etc/yum.repos.d/

# mv CentOS-Base.repo CentOS-Base.repo.bak

# cp CentOS-Base.repo.bak CentOS-Base.repo

# vi CentOS-Base.repo

[base]

name=CentOS-$releasever - Base

#mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=os

baseurl=file:///mnt/Packages

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

# yum clean all

# rm CentOS-Base.repo

# cp CentOS-Media.repo CentOS-Media.repo.bak

# vi CentOS-Media.repo ##只需修改baseurl

[c6-media]

name=CentOS-$releasever - Media

baseurl=file:///mnt/

gpgcheck=1

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

# yum --disablerepo=* --enablerepo=c6-media update

Loaded plugins: fastestmirror

Determining fastest mirrors

c6-media | 4.0 kB 00:00 ...

c6-media/primary_db | 4.4 MB 00:00 ...

Setting up Update Process

No Packages marked for Update

# yum --disablerepo=* --enablerepo=c6-media clean all

# yum --disablerepo=* --enablerepo=c6-media makecache

# yum --disablerepo=* --enablerepo=c6-media groupinstall "Desktop" ##需要安裝294個包,禁用所有系統提供的.repo庫

# yum --disablerepo=* --enablerepo=c6-media groupinstall "X Window System"

3、安裝vnc

# rpm -qa |grep vnc

# yum install tigervnc tigervnc-server

Loaded plugins: fastestmirror, refresh-packagekit

Loading mirror speeds from cached hostfile

Setting up Install Process

No package tigervnc available.

No package tigervnc-server available.

# cd /mnt/Packages/

# rpm -ivh tigervnc*

error: Failed dependencies:

perl is needed by tigervnc-server-1.1.0-5.el6_4.1.x86_64

xorg-x11-fonts-misc is needed by tigervnc-server-1.1.0-5.el6_4.1.x86_64

# yum --disablerepo=* --enablerepo=c6-media install vnc

# rpm -qa |grep vnc

tigervnc-1.1.0-5.el6_4.1.x86_64 ##只是安裝了tigervnc

# yum --disablerepo=* --enablerepo=c6-media install tigervnc tigervnc-server

Running Transaction

Installing : 1:perl-Pod-Escapes-1.04-136.el6.x86_64 1/9

Installing : 4:perl-libs-5.10.1-136.el6.x86_64 2/9

Installing : 1:perl-Pod-Simple-3.13-136.el6.x86_64 3/9

Installing : 3:perl-version-0.77-136.el6.x86_64 4/9

Installing : 1:perl-Module-Pluggable-3.90-136.el6.x86_64 5/9

Installing : 4:perl-5.10.1-136.el6.x86_64 6/9

Installing : 1:xorg-x11-font-utils-7.2-11.el6.x86_64 7/9

Installing : xorg-x11-fonts-misc-7.2-9.1.el6.noarch 8/9

Installing : tigervnc-server-1.1.0-5.el6_4.1.x86_64

# vi /etc/sysconfig/vncservers

VNCSERVERS="1:root"

VNCSERVERARGS[2]="-geometry 1024x768 -nolisten tcp"

# vncpasswd

# service vncserver start

Starting VNC server: 1:root xauth: creating new authority file /root/.Xauthority

xauth: (stdin):1: bad display name "node1.localdomain:1" in "add" command

New 'node1.localdomain:1 (root)' desktop is node1.localdomain:1

Creating default startup script /root/.vnc/xstartup

Starting applications specified in /root/.vnc/xstartup

Log file is /root/.vnc/node1.localdomain:1.log

[ OK ]

# cd ~/.vnc/

# vi xstartup

#!/bin/sh

export LANG

#unset SESSION_MANAGER

#exec /etc/X11/xinit/xinitrc

[ -x /etc/vnc/xistartup ] && exec /etc/vnc/xstartup

[ -r $HOME/.Xresources ] && xrdb $HOME/.Xresources

xsetroot -solid grey

vncconfig -iconic &

xterm -geometry 80x24+10+10 -ls -title "$VNCDESKTOP Desktop" &

twm &

gnome-session &

必選項:vnc viewer連接centos服務端時,注意防火牆對應的端口是否開放/或者關閉防火牆,否則會報10060(超時錯誤),使用下面命令配置規則允許對應端口tcp包通過:

# iptables -I INPUT 1 -p tcp --dport 5901 -j ACCEPT

# service iptables save

# service iptables restart

問題

(1)Could not open/read file:///mnt/Packages/repodata/repomd.xml

把baseurl設置為file:///mnt/

1.1.3、node1-修改/etc/hosts文件

1、

1.1、

# vi /etc/hosts

#node1

192.168.0.3 node1.localdomain node1

192.168.0.5 node1-vip.localdomain node1-vip

10.10.10.31 node1-priv.localdomain node1-priv

#node2

192.168.0.4 node2.localdomain node2

192.168.0.6 node2-vip.localdomain node2-vip

10.10.10.32 node2-priv.localdomain node2-privi

#scan-ip

192.168.0.8 scan-cluster.localdomain scan-cluster

******在node2上做如上相同的操作

1.1.4、node1-配置dns,使scan-ip可以被解析

1、

1.1、安裝bind服務

# ping 192.168.0.5 ##ping不通

# ping 192.168.0.15 ##ping不通

[root@node1 ~]# yum --disablerepo=* --enablerepo=c6-media install bind

1.2、配置/var/named/chroot/etc/named.conf 文件

*經過了解dns只需安裝bind、bind-chroot、bind-utils

# yum --disablerepo=* --enablerepo=c6-media remove bind*

# yum --disablerepo=* --enablerepo=c6-media install bind bind-chroot bind-utils

1.3、配置/etc/named.conf 文件 不再是/var/named/chroot/etc/named.conf 文件

1.3.1、修改主配置文件

# cp -p /etc/named.conf named.conf.bak

# vi /etc/named.conf

listen-on port 53 { any; };

allow-query { any; };

# cp -p /etc/named.rfc1912.zones named.rfc1912.zones.bak

1.3.2、配置zone文件

# vi /etc/named.rfc1912.zones ##追加以下信息

zone "localdomain" IN {

type master;

file "forward.zone";

allow-update { none; };

};

zone "0.168.192.in-addr.arpa" IN {

type master;

file "reverse.zone";

allow-update { none; };

};

1.3.3、生成正向解析數據庫文件

# cd /var/named/

# cp -p named.localhost forward.zone

# vi forward.zone ##正向解析數據庫文件末尾加入如下

$TTL 1D

@ IN SOA node1.localdomain. root.localdomain. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

IN NS node1.localdomain.

node1 IN A 127.0.0.1

scan-cluster.localdomain IN A 192.168.0.8

scan-cluster IN A 192.168.0.8

~

1.3.4、生成方向解析數據庫文件

# cp -p named.loopback reverse.zone

# vi reverse.zone ##反向解析數據庫文件末尾加入如下

$TTL 1D

@ IN SOA node1.localdomain. root.localdomain. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

IN NS node1.localdomain.

3 IN PTR node1.localdomain.

8 IN PTR scan-cluster.localdomain.

8 IN PTR scan-cluster.

# service named restart

# vi /etc/resolv.conf ##文件末尾加入如下

search localdomain

nameserver 192.168.0.3

1.1.5、檢查軟件環境需求

1.1、檢查操作系統版本

# cat /etc/issue

CentOS release 6.5 (Final)

Kernel \r on an \m

# cat /proc/version

Linux version 2.6.32-431.el6.x86_64 ([email protected]) (gcc version 4.4.7 20120313 (Red Hat 4.4.7-4) (GCC) ) #1 SMP Fri Nov 22 03:15:09 UTC 2013

1.2、檢查內核

# uname -r

2.6.32-431.el6.x86_64

1.3、檢查必要的包

binutils-2.20.51.0.2-5.11.el6 (x86_64)

compat-libcap1-1.10-1 (x86_64)

compat-libstdc++-33-3.2.3-69.el6 (x86_64)

compat-libstdc++-33-3.2.3-69.el6.i686

gcc-4.4.4-13.el6 (x86_64)

gcc-c++-4.4.4-13.el6 (x86_64)

glibc-2.12-1.7.el6 (i686)

glibc-2.12-1.7.el6 (x86_64)

glibc-devel-2.12-1.7.el6 (x86_64)

glibc-devel-2.12-1.7.el6.i686

ksh

libgcc-4.4.4-13.el6 (i686)

libgcc-4.4.4-13.el6 (x86_64)

libstdc++-4.4.4-13.el6 (x86_64)

libstdc++-4.4.4-13.el6.i686

libstdc++-devel-4.4.4-13.el6 (x86_64)

libstdc++-devel-4.4.4-13.el6.i686

libaio-0.3.107-10.el6 (x86_64)

libaio-0.3.107-10.el6.i686

libaio-devel-0.3.107-10.el6 (x86_64)

libaio-devel-0.3.107-10.el6.i686

make-3.81-19.el6

sysstat-9.0.4-11.el6 (x86_64)

# rpm -qa |grep binutils

binutils-2.20.51.0.2-5.36.el6.x86_64

# rpm -qa |grep compat-libcap

# yum --disablerepo=* --enablerepo=c6-media install compat-libcap

Loaded plugins: fastestmirror, refresh-packagekit

Loading mirror speeds from cached hostfile

Setting up Install Process

No package compat-libcap available.

Error: Nothing to do

# yum --disablerepo=* --enablerepo=c6-media install compat-libcap1

# rpm -qa |grep compat-libstdc++

# yum --disablerepo=* --enablerepo=c6-media install compat-libstdc++*

# rpm -qa |grep gcc

libgcc-4.4.7-4.el6.x86_64

libgcc-4.4.7-4.el6.i686

# yum --disablerepo=* --enablerepo=c6-media install gcc

# yum --disablerepo=* --enablerepo=c6-media install gcc-c++

# rpm -qa |grep gcc

gcc-4.4.7-4.el6.x86_64

libgcc-4.4.7-4.el6.x86_64

libgcc-4.4.7-4.el6.i686

gcc-c++-4.4.7-4.el6.x86_64

# rpm -qa |grep glibc

glibc-common-2.12-1.132.el6.x86_64

glibc-2.12-1.132.el6.x86_64

glibc-2.12-1.132.el6.i686

glibc-devel-2.12-1.132.el6.x86_64

glibc-headers-2.12-1.132.el6.x86_64

# yum --disablerepo=* --enablerepo=c6-media install glibc-devel

Loaded plugins: fastestmirror, refresh-packagekit

Loading mirror speeds from cached hostfile

Setting up Install Process

Package glibc-devel-2.12-1.132.el6.x86_64 already installed and latest version

Nothing to do

# yum --disablerepo=* --enablerepo=c6-media install glibc-devel.i686 *****

# rpm -qa |grep libgcc

libgcc-4.4.7-4.el6.x86_64

libgcc-4.4.7-4.el6.i686

# rpm -qa |grep libstdc++

libstdc++-4.4.7-4.el6.x86_64

compat-libstdc++-296-2.96-144.el6.i686

libstdc++-devel-4.4.7-4.el6.x86_64

compat-libstdc++-33-3.2.3-69.el6.x86_64

# yum --disablerepo=* --enablerepo=c6-media install libstdc++.i686

# yum --disablerepo=* --enablerepo=c6-media install libstdc++-devel.i686

# rpm -qa |grep libaio ##值得注意的安裝方法

# yum --disablerepo=* --enablerepo=c6-media install libaio*

# rpm -qa |grep libaio

libaio-devel-0.3.107-10.el6.x86_64

libaio-0.3.107-10.el6.x86_64

# yum --disablerepo=* --enablerepo=c6-media install libaio.i686

# yum --disablerepo=* --enablerepo=c6-media install libaio-devel.i686

# rpm -qa |grep ksh

# yum --disablerepo=* --enablerepo=c6-media install ksh

# rpm -qa |grep make

make-3.81-20.el6.x86_64

# yum --disablerepo=* --enablerepo=c6-media install libXp.i686

# yum --disablerepo=* --enablerepo=c6-media install libXt.i686

1.1.6、配置內核參數

# vi /etc/sysctl.conf ##原始的shmmax/shmall 比較大

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = 2097152

kernel.shmmax = 536870912

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

# /sbin/sysctl –p

error: "–p" is an unknown key

# sysctl -p

1.1.7、配置會話限制

# vi /etc/pam.d/login ##末尾添加

session required pam_limits.so

1.1.8、軟件安裝用戶的資源限制

# vi /etc/security/limits.conf

oracle hard nproc 204800

oracle soft nofile 204800

oracle hard nofile 204800

oracle soft stack 204800

grid soft nproc 204800

grid hard nproc 204800

grid soft nofile 204800

grid hard nofile 204800

grid soft stack 204800

1.1.9、創建組,創建用戶,修改環境變量

# groupadd -g 1000 oinstall

# groupadd -g 1200 asmadmin

# groupadd -g 1201 asmdba

# groupadd -g 1202 asmoper

# useradd -u 1100 -g oinstall -G asmadmin,asmdba,asmoper -d /home/grid -s /bin/bash grid

# passwd

# vi /home/grid/.bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

export PATH=$PATH:$HOME/bin

export PS1="`/bin/hostname -s`->"

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/11.2.0/grid

export TNS_ADMIN=$ORACLE_HOME/network/admin

export PATH=/usr/sbin:$PATH

export PATH=$PATH:$ORACLE_HOME/bin

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

# source /home/grid/.bash_profile

# groupadd -g 1300 dba

# groupadd -g 1301 oper

# useradd -u 1101 -g oinstall -G dba,oper,asmdba -d /home/oracle -s /bin/bash oracle

# passwd

# vi /home/oracle/.bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

export PATH=$PATH:$HOME/bin

export PS1="`/bin/hostname -s`->"

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=node1.localdomain

export ORACLE_SID=devdb1

export ORACLE_UNQNAME=devdb

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export TNS_ADMIN=$ORACLE_HOME/network/admin

export PATH=/usr/sbin:$PATH

export PATH=$PATH:$ORACLE_HOME/bin

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

# source /home/oracle/.bash_profile

1.1.10、創建目錄,修改權限

# mkdir -p /u01/app/grid ##-p級聯

# mkdir -p /u01/app/11.2.0/grid

# chown -R grid:oinstall /u01/app/grid

# chown -R grid:oinstall /u01/app/11.2.0/grid

# mkdir -p /u01/app/oracle

# chown -R oracle:oinstall /u01/app/oracle

最後執行如下命令:

# chmod -R 775 /u01

1.1.11、停止ntp服務,11gR2新增的檢查項

# service ntpd status

ntpd is stopped

# chkconfig ntpd off

#

# mv /etc/ntp.conf /etc/ntp.conf.bak

1.2、配置node2

在node2上,重復node1的1.2-1.11,同時配置環境變量時+ASM2/devdb2

1.3、配置grid/oracle用戶ssh對等性

##node1

node1->env |grep ORA

ORACLE_UNQNAME=devdb

ORACLE_SID=devdb1

ORACLE_BASE=/u01/app/oracle

ORACLE_HOSTNAME=node1.localdomain

ORACLE_HOME=/u01/app/oracle/product/11.2.0/db_1

##node2

node2->env |grep ORA

ORACLE_UNQNAME=devdb

ORACLE_SID=devdb2

ORACLE_BASE=/u01/app/oracle

ORACLE_HOSTNAME=node1.localdomain

ORACLE_HOME=/u01/app/oracle/product/11.2.0/db_1

在集群就緒服務 (CRS) 和 RAC 安裝過程中,Oracle Universal Installer (OUI) 必須能夠以 oracle 的身份將軟件復制到所有 RAC 節點

而不提示輸入口令

##node1

# su - oracle

node1->mkdir ~/.ssh

node1-> chmod 700 ~/.ssh

node1->ssh-keygen -t rsa ##一路回車

Generating public/private rsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is:

1f:a5:6f:8b:c9:e9:a3:04:4d:99:27:6a:3d:77:4d:1e [email protected]

node1->ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_dsa.

Your public key has been saved in /home/oracle/.ssh/id_dsa.pub.

The key fingerprint is:

fc:83:1c:73:37:52:50:90:50:aa:8b:41:9f:61:b9:6b [email protected]

##node2

# su - oracle

node2->mkdir ~/.ssh

node2->chmod 700 ~/.ssh

node2->ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is:

e9:fd:b0:9b:1e:57:56:07:51:9c:2e:99:33:dc:0d:03 [email protected]

node2->ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_dsa.

Your public key has been saved in /home/oracle/.ssh/id_dsa.pub.

The key fingerprint is:

67:f7:9a:09:e1:9e:c2:4f:6b:9a:e3:71:ce:c4:eb:08 [email protected]

##回到node1

node1->cd ~

node1->ll -a

node1->cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

node1->cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

node1->ssh node2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host 'node2 (192.168.0.4)' can't be established.

RSA key fingerprint is 36:5f:59:0d:52:d3:24:68:7d:60:59:da:10:37:b1:47.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node2,192.168.0.4' (RSA) to the list of known hosts.

oracle@node2's password:

node1->ssh node2 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

oracle@node2's password:

*****把公鑰文件拷貝到node2

node1->scp ~/.ssh/authorized_keys node2:~/.ssh/authorized_keys

oracle@node2's password:

authorized_keys 100% 2040 2.0KB/s 00:00

##驗證oracle用戶對等性

每個節點執行如下命令,第二次執行時不再需要輸入口令

ssh node1 date

ssh node2 date

ssh node1-priv date

ssh node2-priv date

ssh node1.localdomain date

ssh node2.localdomain date

ssh node1-priv.localdomain date

ssh node2-priv.localdomain date

根據以上方法,grid用戶配置對等性

1.4、配置共享磁盤

C:\Program Files (x86)\VMware\VMware Workstation>vmware-vdiskmanager.exe -c -s 0.5GB -a lsilogic -t 2 "F:\Virtual Machines\share disk\OCRDISK.vmdk"

C:\Program Files (x86)\VMware\VMware Workstation>vmware-vdiskmanager.exe -c -s 0.5GB -a lsilogic -t 2 "F:\Virtual Machines\share disk\VOTINGDISK.vmdk"

C:\Program Files (x86)\VMware\VMware Workstation>vmware-vdiskmanager.exe -c -s 3GB -a lsilogic -t 2 "F:\Virtual Machines\share disk\ASMDISK1.vmdk"

C:\Program Files (x86)\VMware\VMware Workstation>vmware-vdiskmanager.exe -c -s 3GB -a lsilogic -t 2 "F:\Virtual Machines\share disk\ASMDISK2.vmdk"

C:\Program Files (x86)\VMware\VMware Workstation>vmware-vdiskmanager.exe -c -s 3GB -a lsilogic -t 2 "F:\Virtual Machines\share disk\ASMFLASH.vmdk"

F:\Virtual Machines\node2\node2.vmx,使用ultraedit編輯,保存退出,啟動node2,只能啟動1塊硬盤

disk.locking = "FALSE"

diskLib.dataCacheMaxSize = "0"

scsi1.sharedBus = "virtual"

scsi1:0.present = "TRUE"

scsi1:0.fileName = "F:\Virtual Machines\share disk\OCRDISK.vmdk"

scsi1:0.mode = "independent-persistent"

scsi1:0.deviceType = "disk"

scsi1:1.present = "TRUE"

scsi1:1.fileName = "F:\Virtual Machines\share disk\VOTINGDISK.vmdk"

scsi1:1.mode = "independent-persistent"

scsi1:1.deviceType = "disk"

scsi2:0.present = "TRUE"

scsi2:0.fileName = "F:\Virtual Machines\share disk\ASMDISK1.vmdk"

scsi2:0.mode = "independent-persistent"

scsi2:0.deviceType = "disk"

scsi2:1.present = "TRUE"

scsi2:1.fileName = "F:\Virtual Machines\share disk\ASMDISK2.vmdk"

scsi2:1.mode = "independent-persistent"

scsi2:1.deviceType = "disk"

scsi2:2.present = "TRUE"

scsi2:2.fileName = "F:\Virtual Machines\share disk\ASMFLASH.vmdk"

scsi2:2.mode = "independent-persistent"

scsi2:2.deviceType = "disk"

scsi1.virtualDev = "lsilogic"

修改為:可以啟動6個硬盤

disk.locking = "FALSE"

diskLib.dataCacheMaxSize = "0"

scsi1.sharedBus = "virtual"

scsi1.present = "TRUE"

scsi1:0.present = "TRUE"

scsi1:0.fileName = "F:\Virtual Machines\share disk\OCRDISK.vmdk"

scsi1:0.mode = "independent-persistent"

scsi1:0.deviceType = "disk"

scsi1:1.present = "TRUE"

scsi1:1.fileName = "F:\Virtual Machines\share disk\VOTINGDISK.vmdk"

scsi1:1.mode = "independent-persistent"

scsi1:1.deviceType = "disk"

scsi1:2.present = "TRUE"

scsi1:2.fileName = "F:\Virtual Machines\share disk\ASMDISK1.vmdk"

scsi1:2.mode = "independent-persistent"

scsi1:2.deviceType = "disk"

scsi1:3.present = "TRUE"

scsi1:3.fileName = "F:\Virtual Machines\share disk\ASMDISK2.vmdk"

scsi1:3.mode = "independent-persistent"

scsi1:3.deviceType = "disk"

scsi1:4.present = "TRUE"

scsi1:4.fileName = "F:\Virtual Machines\share disk\ASMFLASH.vmdk"

scsi1:4.mode = "independent-persistent"

scsi1:4.deviceType = "disk"

scsi1.virtualDev = "lsilogic"

# fdisk -l ##6個硬盤

1.5、安裝rpm包,配置asm磁盤

1、格式化分區(兩個節點任選其一)

2、安裝asm包(每個節點)

# rpm -ivh kmod-oracleasm-2.0.6.rh1-3.el6.x86_64.rpm

# rpm -ivh oracleasm-support-2.1.8-1.el5.x86_64.rpm

# rpm -ivh oracleasmlib-2.0.4-1.el5.x86_64.rpm

# oracleasm configure -i

# oracleasm init

3、清理asm磁盤頭

# dd if=/dev/zero of=/dev/sdb1 bs=8192 count=12800

12800+0 records in

12800+0 records out

104857600 bytes (105 MB) copied, 2.18097 s, 48.1 MB/s

# dd if=/dev/zero of=/dev/sdc1 bs=8192 count=12800

12800+0 records in

12800+0 records out

104857600 bytes (105 MB) copied, 1.29817 s, 80.8 MB/s

# dd if=/dev/zero of=/dev/sdd1 bs=8192 count=12800

12800+0 records in

12800+0 records out

104857600 bytes (105 MB) copied, 1.31786 s, 79.6 MB/s

# dd if=/dev/zero of=/dev/sde1 bs=8192 count=12800

12800+0 records in

12800+0 records out

104857600 bytes (105 MB) copied, 1.43146 s, 73.3 MB/s

# dd if=/dev/zero of=/dev/sdf1 bs=8192 count=12800

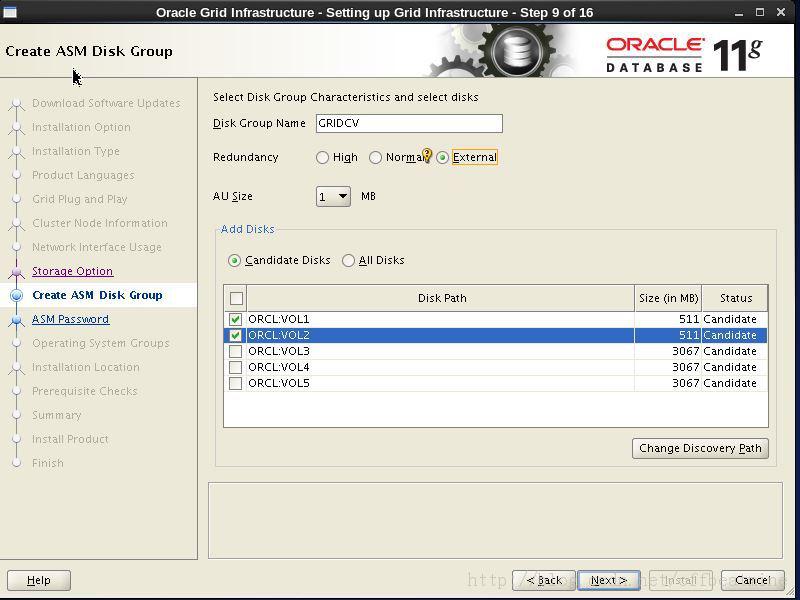

4、映射分區到asm磁盤(兩個節點任選其一)

# oracleasm scandisks ##刷新asm磁盤信息,不然不是最新信息

# oracleasm createdisk VOL1 /dev/sdb1

Writing disk header: done

Instantiating disk: done

# oracleasm createdisk VOL2 /dev/sdc1

Writing disk header: done

Instantiating disk: done

# oracleasm createdisk VOL3 /dev/sdd1

Writing disk header: done

# oracleasm createdisk VOL4 /dev/sde1

Writing disk header: done

Instantiating disk: done

# oracleasm createdisk VOL5 /dev/sdf1

5、關閉NetworkManager

# chkconfig --level 123456 NetworkManager off

# service NetworkManager stop

Stopping NetworkManager daemon: [ OK ]

1.6、安裝 cvuqdisk包,驗證grid環境,並修復failed

1、安裝cvuqdisk(每個節點)

# CVUQDISK_GRP=oinstall; export CVUQDISK_GRP

# rpm -qa |grep cvuqdisk

cvuqdisk-1.0.9-1.x86_64

# ll /home/grid/grid/rpm

total 12

-rwxr-xr-x 1 root root 8551 Sep 22 2011 cvuqdisk-1.0.9-1.rpm

# ls -l /usr/sbin/cvuqdisk

-rwsr-xr-x 1 root oinstall 10928 Sep 4 2011 /usr/sbin/cvuqdisk

2、驗證grid環境

node1->find /home/grid/grid/ -name runcluvfy.sh

/home/grid/grid/runcluvfy.sh

Checking ASMLib configuration.

Node Name Status

------------------------------------ ------------------------

node1 passed

node2 (failed) ASMLib does not list the disks "[VOL2, VOL1, VOL5, VOL4, VOL3]"

node1->./runcluvfy.sh stage -pre crsinst -fixup -n node1,node2 -verbose >> /home/grid/runcluvfy.check

Check: Package existence for "sysstat"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

node2 missing sysstat-5.0.5 failed

node1 missing sysstat-5.0.5 failed

Result: Package existence check failed for "sysstat"

Check: Package existence for "pdksh"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

node2 missing pdksh-5.2.14 failed

node1 missing pdksh-5.2.14 failed

Result: Default user file creation mask check passed

Checking consistency of file "/etc/resolv.conf" across nodes

Checking the file "/etc/resolv.conf" to make sure only one of domain and search entries is defined

File "/etc/resolv.conf" does not have both domain and search entries defined

Checking if domain entry in file "/etc/resolv.conf" is consistent across the nodes...

domain entry in file "/etc/resolv.conf" is consistent across nodes

Checking if search entry in file "/etc/resolv.conf" is consistent across the nodes...

search entry in file "/etc/resolv.conf" is consistent across nodes

Checking file "/etc/resolv.conf" to make sure that only one search entry is defined

All nodes have one search entry defined in file "/etc/resolv.conf"

Checking all nodes to make sure that search entry is "localdomain" as found on node "node2"

All nodes of the cluster have same value for 'search'

Checking DNS response time for an unreachable node

Node Name Status

------------------------------------ ------------------------

node2 failed

node1 failed

PRVF-5636 : The DNS response time for an unreachable node exceeded "15000" ms on following nodes: node2,node1

(1)其他節點必須執行oracleasm scandisks

# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

Instantiating disk "VOL1"

Instantiating disk "VOL3"

Instantiating disk "VOL2"

Instantiating disk "VOL4"

Instantiating disk "VOL5"

# oracleasm listdisks

VOL1

VOL2

VOL3

VOL4

VOL5

(2)可以不安裝sysstat

# yum --disablerepo=* --enablerepo=c6-media install sysstat -y

(3)卸載ksh安裝pdksh

# yum --disablerepo=* --enablerepo=c6-media install pdksh

Loaded plugins: fastestmirror, refresh-packagekit

Loading mirror speeds from cached hostfile

c6-media | 4.0 kB 00:00 ...

Setting up Install Process

No package pdksh available.

Error: Nothing to do

# rpm -e ksh-20120801-10.el6.x86_64

# rpm -ivh pdksh-5.2.14-30.x86_64.rpm

warning: pdksh-5.2.14-30.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 73307de6: NOKEY

Preparing... ########################################### [100%]

1:pdksh ########################################### [100%]

(3)PRVF-5636和PRVF-5637,rhel6以上版本的bug引起的,11.2.0.4修復

# sed -i 's/recursion yes/recursion no/1' /etc/named.conf ##解決PRVF-5636

# service named restart

Checking the file "/etc/resolv.conf" to make sure only one of domain and search entries is defined

File "/etc/resolv.conf" does not have both domain and search entries defined

Checking if domain entry in file "/etc/resolv.conf" is consistent across the nodes...

domain entry in file "/etc/resolv.conf" is consistent across nodes

Checking if search entry in file "/etc/resolv.conf" is consistent across the nodes...

search entry in file "/etc/resolv.conf" is consistent across nodes

Checking file "/etc/resolv.conf" to make sure that only one search entry is defined

All nodes have one search entry defined in file "/etc/resolv.conf"

Checking all nodes to make sure that search entry is "localdomain" as found on node "node2"

All nodes of the cluster have same value for 'search'

Checking DNS response time for an unreachable node

Node Name Status

------------------------------------ ------------------------

node2 failed

node1 failed

PRVF-5637 : DNS response time could not be checked on following nodes: node2,node1

##添加段

# sed -i 's/allow-query { any; };/&\n\ allow-query-cache\ { any; };/' /etc/named.conf ##解決PRVF-5637

# vi /etc/named.conf ##僅修改3行

allow-query { any; };

allow-query-cache { any; };

recursion no;

# service named restart

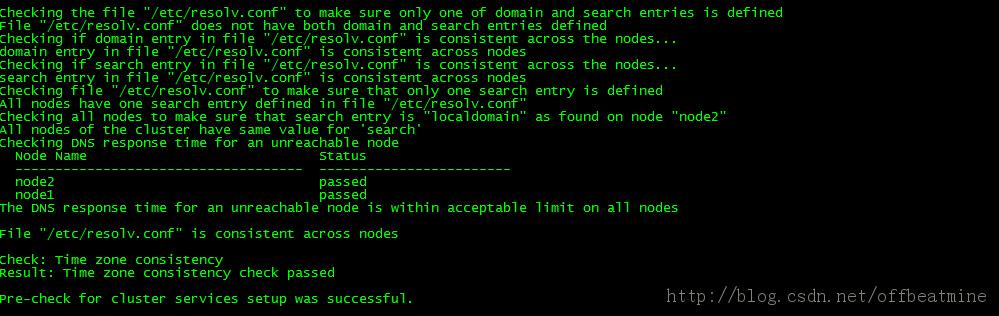

Checking the file "/etc/resolv.conf" to make sure only one of domain and search entries is defined

File "/etc/resolv.conf" does not have both domain and search entries defined

Checking if domain entry in file "/etc/resolv.conf" is consistent across the nodes...

domain entry in file "/etc/resolv.conf" is consistent across nodes

Checking if search entry in file "/etc/resolv.conf" is consistent across the nodes...

search entry in file "/etc/resolv.conf" is consistent across nodes

Checking file "/etc/resolv.conf" to make sure that only one search entry is defined

All nodes have one search entry defined in file "/etc/resolv.conf"

Checking all nodes to make sure that search entry is "localdomain" as found on node "node2"

All nodes of the cluster have same value for 'search'

Checking DNS response time for an unreachable node

Node Name Status

------------------------------------ ------------------------

node2 passed

node1 passed

The DNS response time for an unreachable node is within acceptable limit on all nodes

File "/etc/resolv.conf" is consistent across nodes

1.7、重啟虛擬機,建立虛擬機快照

(1)查看resolv.conf

關閉NetworkManager後,不再沖突

(2)查看asm磁盤是否掛載

自動

(3)配置named自啟動

# chkconfig --level 345 named on

(4)清空/tmp

(4)./runcluvfy.sh stage -pre crsinst -fixup -n node1,node2 -verbose

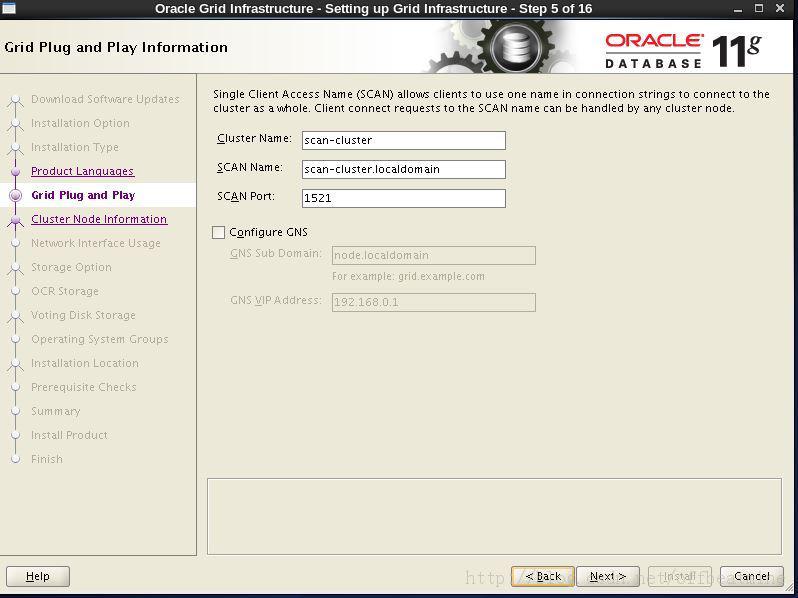

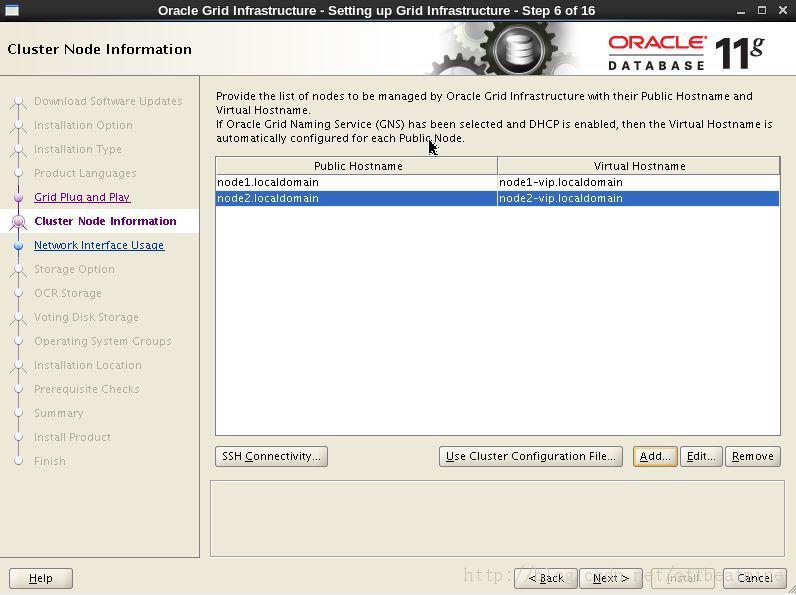

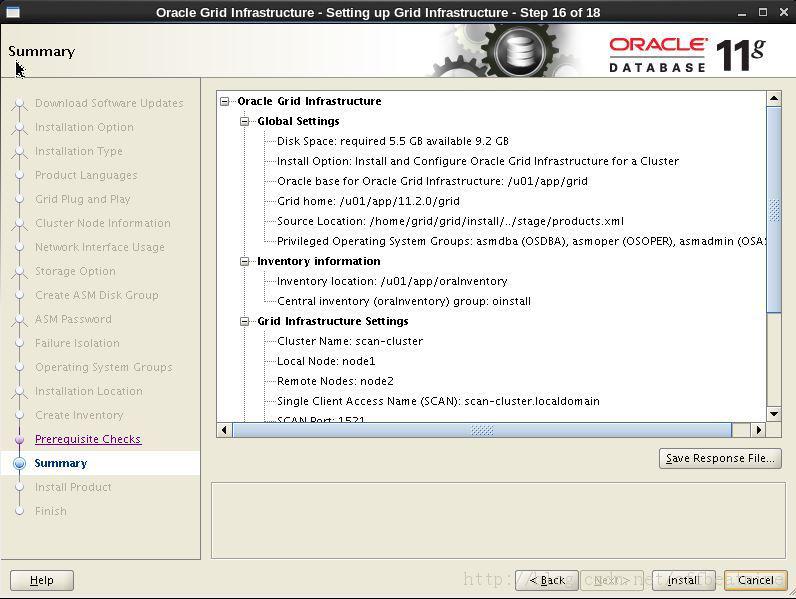

2、開始安裝grid